The objective of our project is to learn the concepts of a CNN and LSTM model and build a working model of Image caption generator by implementing CNN with LSTM.

In this Python project, we will be implementing the caption generator using CNN (Convolutional Neural Networks) and LSTM (Long short term memory). The image features will be extracted from Xception which is a CNN model trained on the imagenet dataset and then we feed the features into the LSTM model which will be responsible for generating the image captions.

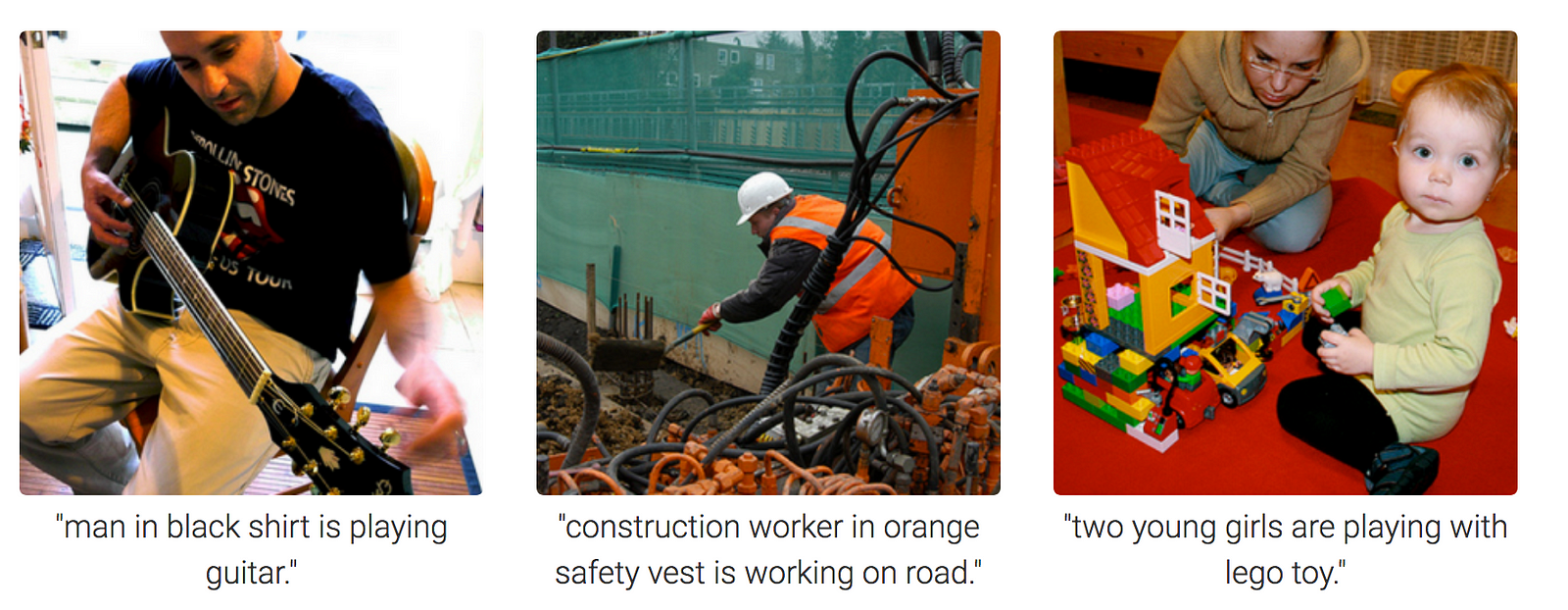

Examples

Image Credits : Towardsdatascience

- Requirements

- Training parameters and results

- Generated Captions on Test Images

- Procedure to Train Model

- Procedure to Test on new images

- Configurations (config.py)

- Frequently encountered problems

- TODO

- References

Recommended System Requirements to train model.

- A good CPU and a GPU with atleast 8GB memory

- Atleast 8GB of RAM

- Active internet connection so that keras can download inceptionv3/vgg16 model weights

Required libraries for Python along with their version numbers used while making & testing of this project

- Python - 3.6.7

- Numpy - 1.16.4

- Tensorflow - 1.13.1

- Keras - 2.2.4

- nltk - 3.2.5

- PIL - 4.3.0

- Matplotlib - 3.0.3

- tqdm - 4.28.1

Flickr8k Dataset: Dataset Request Form

UPDATE (August/2021): The official site seems to have been taken down (although the form still works). Here are some direct download links:

- Flickr8k_Dataset

- Flickr8k_text Download Link Credits: Jason Brownlee

Important: After downloading the dataset, put the reqired files in train_val_data folder

batch_size=64took ~14GB GPU memory in case of InceptionV3 + AlternativeRNN and VGG16 + AlternativeRNNbatch_size=64took ~8GB GPU memory in case of InceptionV3 + RNN and VGG16 + RNN- If you're low on memory, use google colab or reduce batch size

- In case of BEAM Search,

lossandval_lossare same as in case of argmax since the model is same

| Model & Config | Argmax | BEAM Search |

|---|---|---|

InceptionV3 + AlternativeRNN

|

(Lower the better) (Higher the better) |

BLEU Scores on Validation data (Higher the better) |

InceptionV3 + RNN

|

(Lower the better) (Higher the better) |

BLEU Scores on Validation data (Higher the better) |

VGG16 + AlternativeRNN

|

(Lower the better) (Higher the better) |

BLEU Scores on Validation data (Higher the better) |

VGG16 + RNN

|

(Lower the better) (Higher the better) |

BLEU Scores on Validation data (Higher the better) |

Model used - InceptionV3 + AlternativeRNN

- Clone the repository to preserve directory structure.

git clone https://github.com/dabasajay/Image-Caption-Generator.git - Put the required dataset files in

train_val_datafolder (files mentioned in readme there). - Review

config.pyfor paths and other configurations (explained below). - Run

train_val.py.

- Clone the repository to preserve directory structure.

git clone https://github.com/dabasajay/Image-Caption-Generator.git - Train the model to generate required files in

model_datafolder (steps given above). - Put the test images in

test_datafolder. - Review

config.pyfor paths and other configurations (explained below). - Run

test.py.

config

images_path:- Folder path containing flickr dataset imagestrain_data_path:- .txt file path containing images ids for trainingval_data_path:- .txt file path containing imgage ids for validationcaptions_path:- .txt file path containing captionstokenizer_path:- path for saving tokenizermodel_data_path:- path for saving files related to modelmodel_load_path:- path for loading trained modelnum_of_epochs:- Number of epochsmax_length:- Maximum length of captions. This is set manually after training of model and required for test.pybatch_size:- Batch size for training (larger will consume more GPU & CPU memory)beam_search_k:- BEAM search parameter which tells the algorithm how many words to consider at a time.test_data_path:- Folder path containing images for testing/inferencemodel_type:- CNN Model type to use -> inceptionv3 or vgg16random_seed:- Random seed for reproducibility of results

rnnConfig

embedding_size:- Embedding size used in Decoder(RNN) ModelLSTM_units:- Number of LSTM units in Decoder(RNN) Modeldense_units:- Number of Dense units in Decoder(RNN) Modeldropout:- Dropout probability used in Dropout layer in Decoder(RNN) Model

- Out of memory issue:

- Try reducing

batch_size

- Try reducing

- Results differ everytime I run script:

- Due to stochastic nature of these algoritms, results may differ slightly everytime. Even though I did set random seed to make results reproducible, results may differ slightly.

- Results aren't very great using beam search compared to argmax:

- Try higher

kin BEAM search usingbeam_search_kparameter in config. Note that higherkwill improve results but it'll also increase inference time significantly.

- Try higher

-

Support for VGG16 Model. Uses InceptionV3 Model by default.

-

Implement 2 architectures of RNN Model.

-

Support for batch processing in data generator with shuffling.

-

Implement BEAM Search.

-

Calculate BLEU Scores using BEAM Search.

-

Implement Attention and change model architecture.

-

Support for pre-trained word vectors like word2vec, GloVe etc.

- Show and Tell: A Neural Image Caption Generator - Oriol Vinyals, Alexander Toshev, Samy Bengio, Dumitru Erhan

- Where to put the Image in an Image Caption Generator - Marc Tanti, Albert Gatt, Kenneth P. Camilleri

- How to Develop a Deep Learning Photo Caption Generator from Scratch