forked from validatedpatterns/rag-llm-gitops

-

Notifications

You must be signed in to change notification settings - Fork 0

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

updated readme and added testplan for ci (validatedpatterns#15)

- Loading branch information

Showing

2 changed files

with

96 additions

and

0 deletions.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,93 @@ | ||

| # Test Cases for E2E Demo. | ||

|

|

||

| - [Provisioning of GPU Node](https://github.com/validatedpatterns-sandbox/rag-llm-gitops/blob/main/GPU_provisioning.md) | ||

|

|

||

| - MachineSet is created. | ||

| - Name of the machine set <clustername>-gpu-<AWSregion>. This should not be a hard requirement though. | ||

| - Machine set has taint section | ||

| ```yaml | ||

| taints: | ||

| - effect: NoSchedule | ||

| key: odh-notebook <--- Use own taint name or skip all together | ||

| value: 'true' | ||

| ``` | ||

| - MachineSet has a label | ||

| ```yaml | ||

| metadata: | ||

| labels: | ||

| node-role.kubernetes.io/odh-notebook: '' <--- Put your label if needed | ||

| ``` | ||

| - MachineSet instance type | ||

| ```yaml | ||

| providerSpec: | ||

| value: | ||

| ........................ | ||

| instanceType: g5.2xlarge <---- Change vm type if needed | ||

| ``` | ||

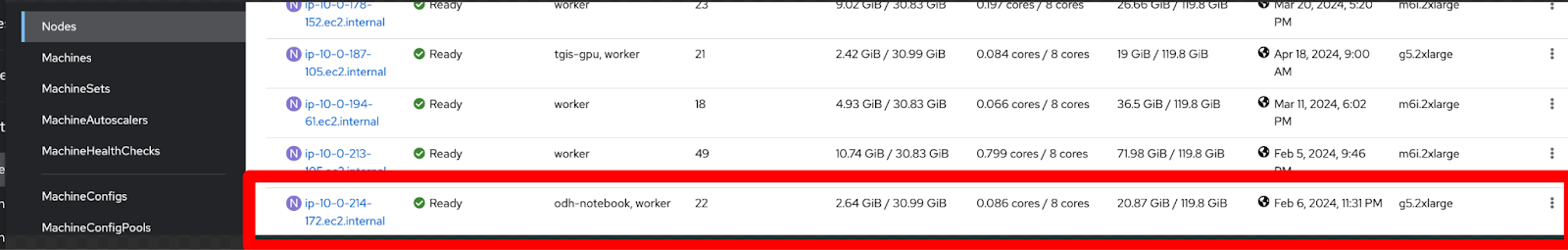

| - The nodes are provisioned with the proper label. The number of pods running should be greater than 20. | ||

|  | ||

| - NVIDIA pods should be running on the nodes. Check the pods running on the GPU nodes. | ||

|  | ||

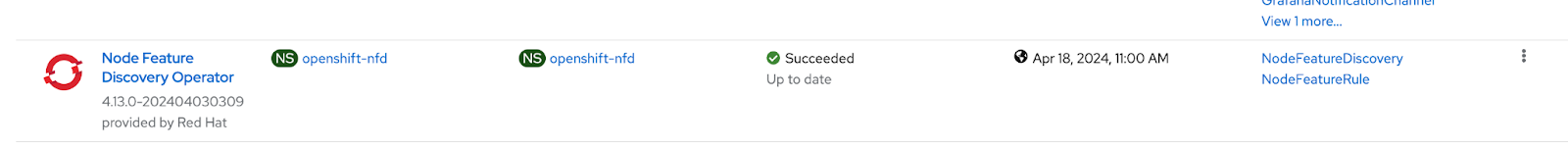

| - Verify Node Feature Discovery Operator is installed: | ||

| - Select Installed Operators from the left Navigation Bar and under Projects, select All Projects. Node Discover Feature Operator should be installed | ||

|  | ||

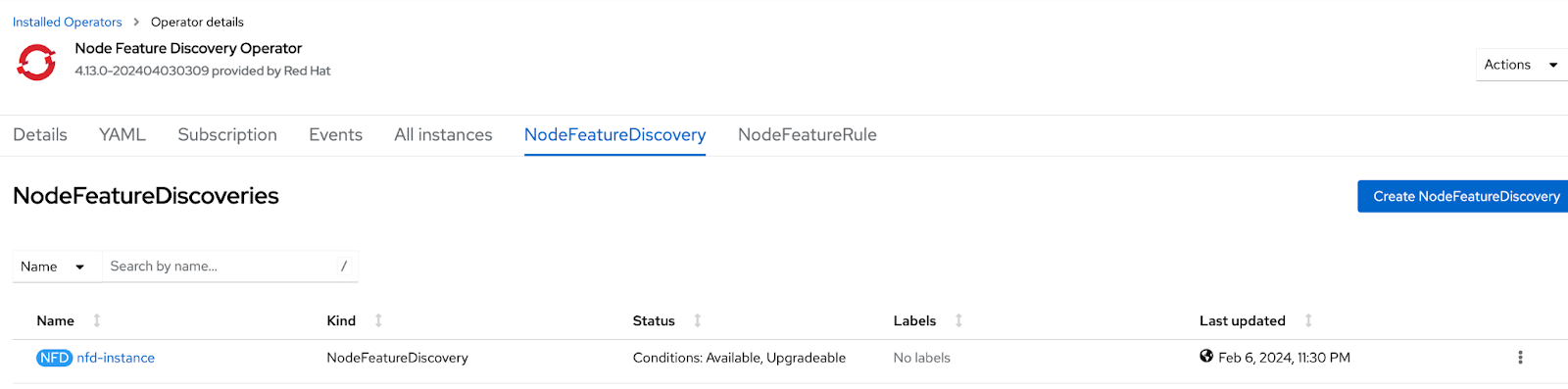

| - Click on the Node Feature Discovery Operator. Under NodeFeatureDiscovery an instance should be created. Status should be Available. | ||

|  | ||

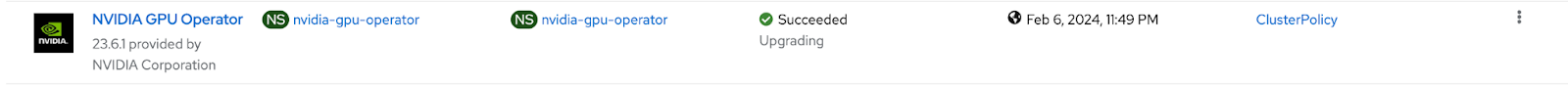

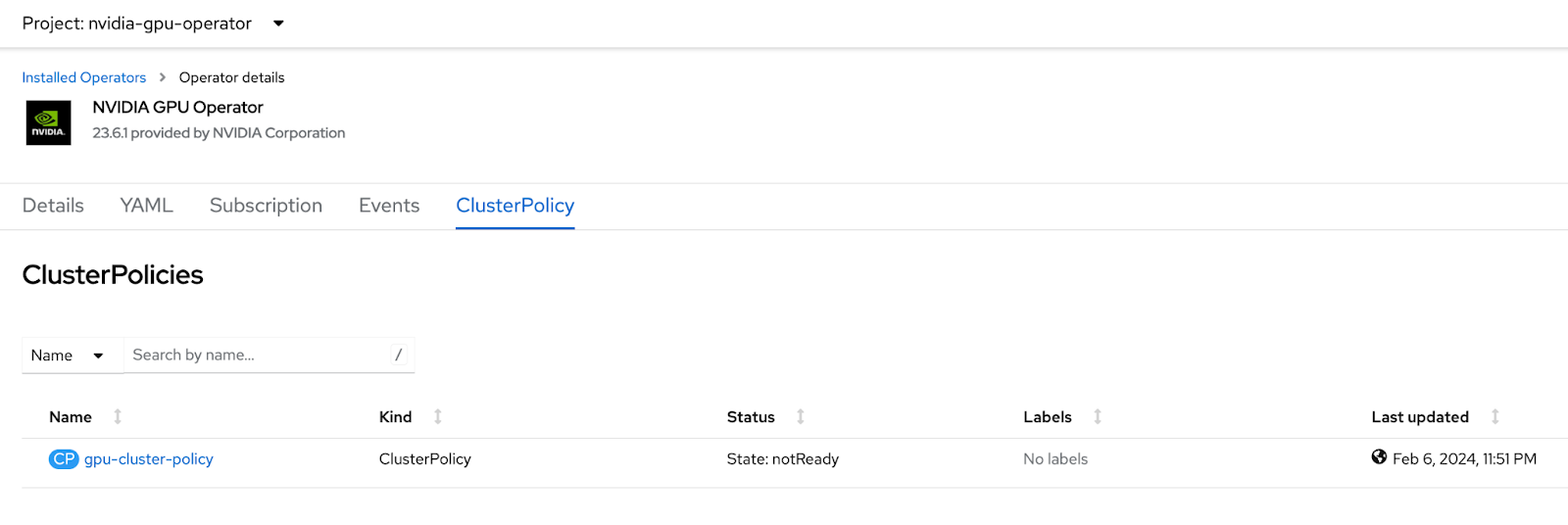

| - Verify NVIDIA GPU Operator is installed. | ||

| - NVIDIA GPU Operator is installed | ||

|  | ||

| - Click on the NVIDIA GPU Operator and click on ClusterPolicy. A gpu-cluster-policy should exist | ||

|  | ||

| - Click on the gpu-cluster-policy and click on the YAML tab. The YAML should contain the tolerations | ||

| ```yaml | ||

| tolerations: | ||

| - effect: NoSchedule | ||

| key: odh-notebook | ||

| value: 'true' | ||

| ``` | ||

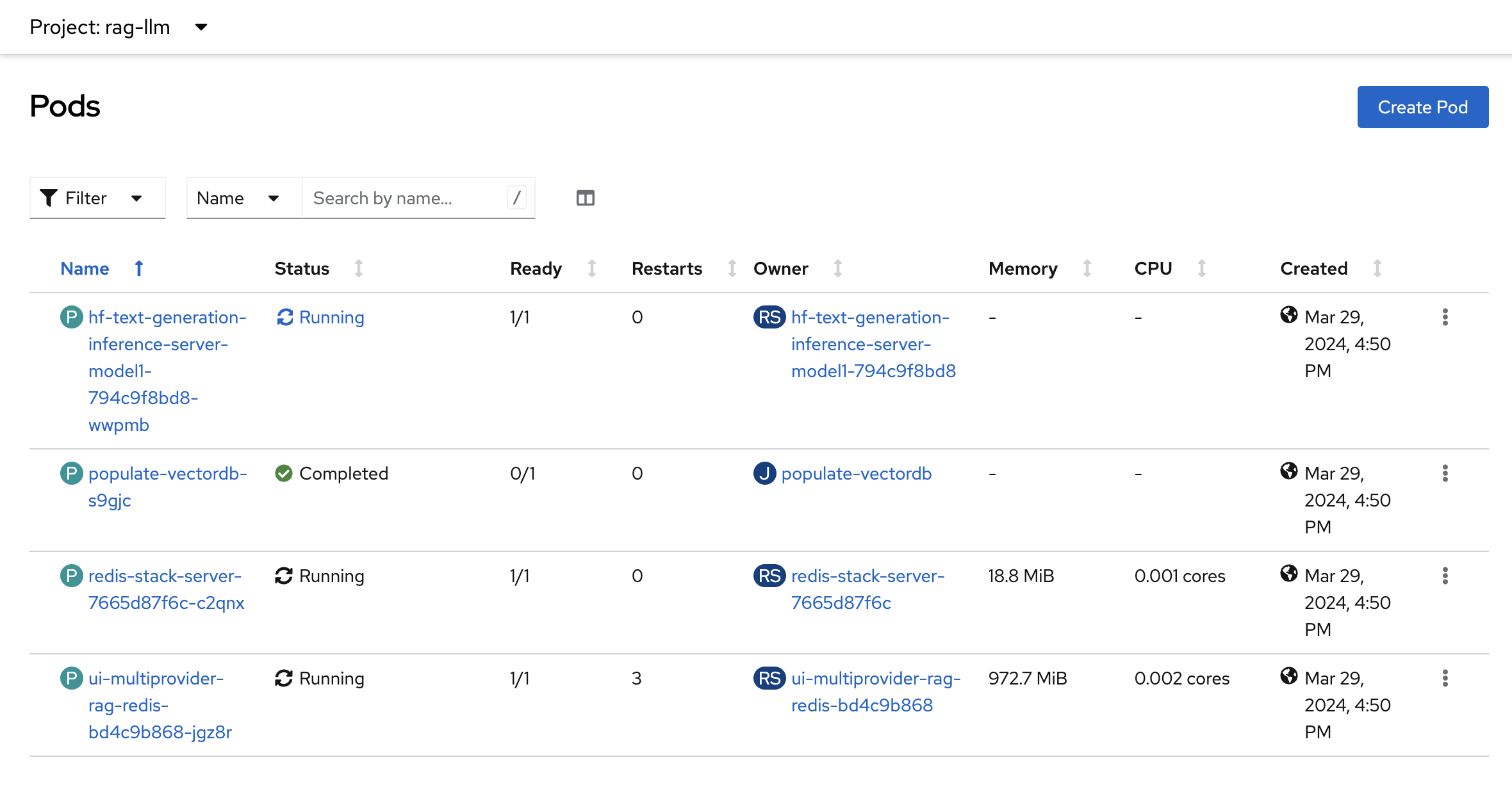

| - Application provisioned correctly. | ||

| - Click on the rag-llm namespace | ||

| - Four pods should be running | ||

|  | ||

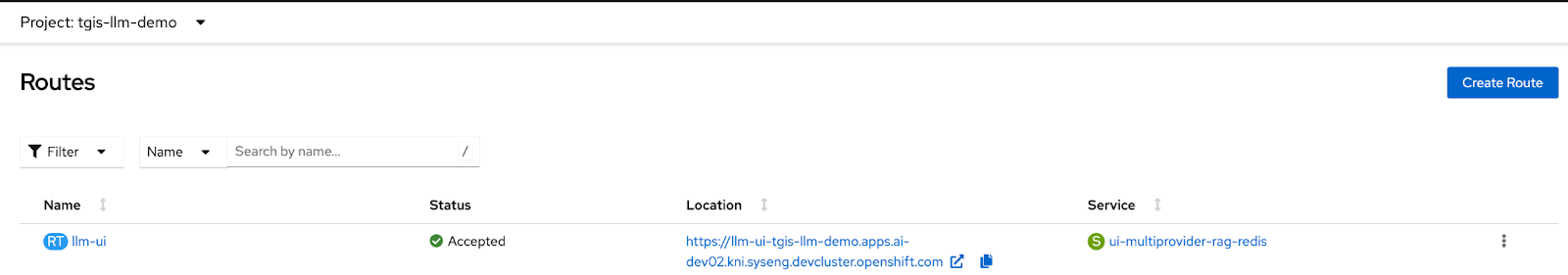

| - Click on Networking → Routes from the left Navigation panel. An llm-ui route should exist | ||

|  | ||

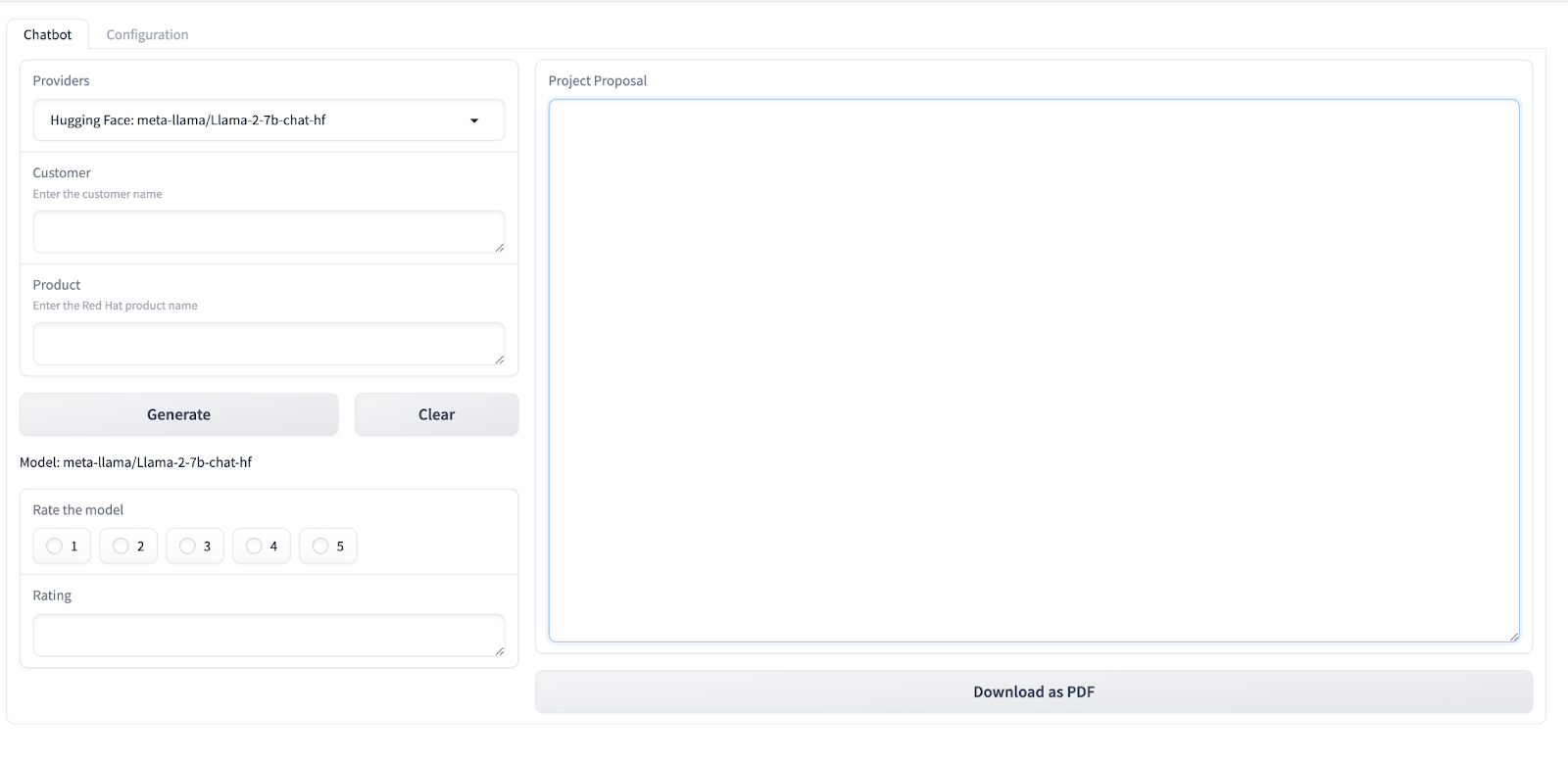

| - Click on the link under Location column and it should launch the application | ||

|  | ||

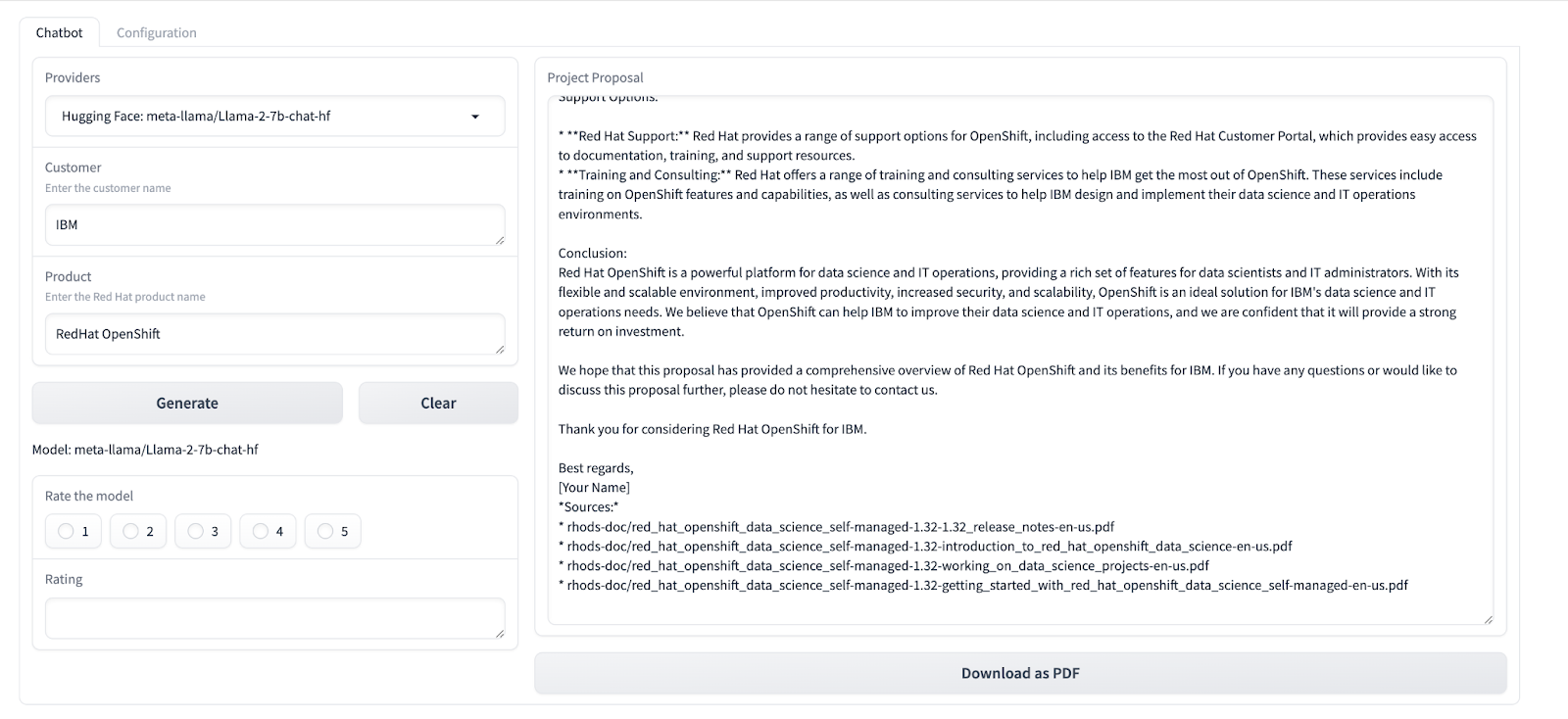

| - Enter customer name as ‘IBM’ and for product enter ‘RedHat OpenShift’ and click Generate. A project proposal should be generated | ||

|  | ||

| - Click on Ratings to rate the model. | ||

| - Verify Grafana and Prometheus are installed correctly. | ||

| - By default, Grafana application is deployed in llm-monitoring namespace.To launch the Grafana Dashboard, follow the instructions below: | ||

| - Grab the credentials of Grafana Application | ||

| - Navigate to Workloads --> Secrets | ||

| - Click on the grafana-admin-credentials and copy the `GF_SECURITY_ADMIN_USER`, `GF_SECURITY_ADMIN_PASSWORD` | ||

| - Launch Grafana Dashboard | ||

| - Navigate to Networking --> Routes in the llm-monitoring namespace. | ||

| - Click on the Location link for grafana-route. | ||

| - Enter the Grafana admin credentials. | ||

| - Ratings are displayed for each model | ||

| - Grafana Dashboard is displayed | ||

|

|

||

|  | ||

|

|

||

|

|

||

|

|

||

|

|