-

Notifications

You must be signed in to change notification settings - Fork 200

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

- Loading branch information

Showing

1 changed file

with

16 additions

and

0 deletions.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,16 @@ | ||

| # A Brief Introduction to OpenMP | ||

| A parallel system is an architecture where a problem is broken into several smaller problems for multiple processors or machines. The goal of parallelization is to reduce the total runtime of a program. | ||

|

|

||

| This guide mainly focuses on OpenMP which is a high-level API that makes parallelizing sequential code more simple. | ||

|

|

||

| ## Prerequisites | ||

| The following guide assumes that readers are familiar with concurrency (e.g. threads, and synchronization). If you are not familiar with these topics, we recommend checking out the following resources | ||

| - [Threads](https://en.wikipedia.org/wiki/Thread_(computing)) | ||

| - [OSTEP Chapter 26](https://pages.cs.wisc.edu/~remzi/OSTEP/threads-intro.pdf) | ||

|

|

||

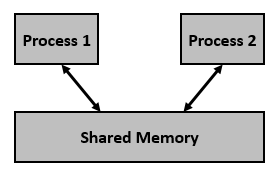

| ## Shared Memory | ||

| In order to parallelize a program, threads or processes must communicate with each other. In a distributed system, they would achieve this through message passing across a network. However, we focus on parallelism on a single machine (i.e. one or more processors with one or more cores connected through shared memory). At a high-level, this simply means all processors have access to the same physical memory. | ||

|

|

||

|  | ||

|

|

||

| With shared memory, processes can now communicate with each other via shared memory (implicitly). While there are libraries like pthreads that enable us to parallelize code using the shared memory paradigm, OpenMP is a higher-level abstraction that makes writing parallel code simpler (as seen in later examples). In particular, minimal changes need to be made to the sequential code to achieve parallelism. |