This repo is a collection of the implementations of many GANs. In order to make the codes easy to read and follow, I minimize the code and run on the same MNIST dataset.

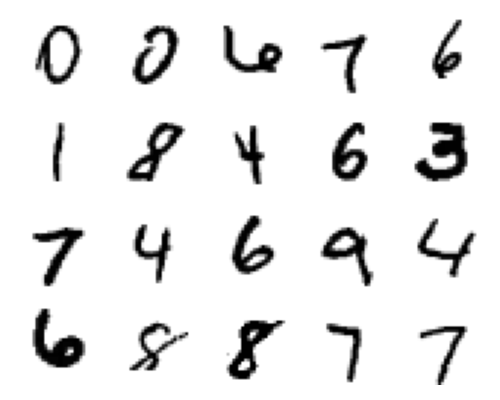

What does the MNIST data look like?

Toy implementations are organized as following:

1. Base Method

2. Loss or Structure Modifications

- Least Squares GAN (LSGAN)

- Wasserstein GAN (WGAN)

- Self-Attention GAN (SAGAN)

- Progressive-Growing GAN (PGGAN)

3. Can be Conditional

4. Image to Image Transformation

$ git clone https://github.com/MorvanZhou/mnistGANs

$ cd mnistGANs/

$ pip3 install -r requirements.txtUnsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks

Least Squares Generative Adversarial Networks

Improved Training of Wasserstein GANs

Wasserstein Divergence for GANs

Self-Attention Generative Adversarial Networks

PROGRESSIVE GROWING OF GANS FOR IMPROVED QUALITY, STABILITY, AND VARIATION

Conditional Generative Adversarial Nets

Conditional Image Synthesis with Auxiliary Classifier GANs

InfoGAN: Interpretable Representation Learning by Information Maximizing Generative Adversarial Nets

A Style-Based Generator Architecture for Generative Adversarial Networks

Semi-Supervised Learning with Context-Conditional Generative Adversarial Networks

Image-to-Image Translation with Conditional Adversarial Networks

Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks

Photo-Realistic Single Image Super-Resolution Using a Generative Adversarial Network