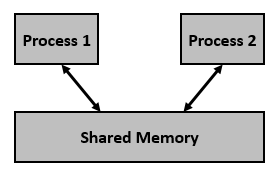

From b1a5e38a4c023b8a9bf7c50c238e25f22e969b8c Mon Sep 17 00:00:00 2001 From: maxkmy <maxkoh03@gmail.com> Date: Sun, 17 Mar 2024 16:48:38 -0400 Subject: [PATCH 1/2] add: openMP introduction --- Topics/Tech_Stacks/openMP.md | 14 ++++++++++++++ 1 file changed, 14 insertions(+) create mode 100644 Topics/Tech_Stacks/openMP.md diff --git a/Topics/Tech_Stacks/openMP.md b/Topics/Tech_Stacks/openMP.md new file mode 100644 index 000000000..05277a096 --- /dev/null +++ b/Topics/Tech_Stacks/openMP.md @@ -0,0 +1,14 @@ +# A Brief Introduction to OpenMP +A parallel system is an architecture where a problem is broken into several smaller problems for multiple processors or machines. The goal of parallelization is to reduce the total runtime of a program. + +This guide mainly focuses on OpenMP which is a high-level API that makes parallelizing sequential code more simple. + +## Prerequisites +The following guide assumes that readers are familiar with concurrency (e.g. threads, and synchronization). + +## Shared Memory +In order to parallelize a program, threads or processes must communicate with each other. In a distributed system, they would achieve this through message passing across a network. However, we focus on parallelism on a single machine (i.e. one or more processors with one or more cores connected through shared memory). At a high-level, this simply means all processors have access to the same physical memory. + + + +With shared memory, processes can now communicate with each other via shared memory (implicitly). While there are libraries like pthreads that enable us to parallelize code using the shared memory paradigm, OpenMP is a higher-level abstraction that makes writing parallel code simpler (as seen in later examples). In particular, minimal changes need to be made to the sequential code to achieve parallelism. \ No newline at end of file From 3a7ba8b01d986cd4c582e75ec555d15203063c8b Mon Sep 17 00:00:00 2001 From: maxkmy <maxkoh03@gmail.com> Date: Sun, 17 Mar 2024 16:54:06 -0400 Subject: [PATCH 2/2] add: resources to learn concurrency and threads --- Topics/Tech_Stacks/openMP.md | 4 +++- 1 file changed, 3 insertions(+), 1 deletion(-) diff --git a/Topics/Tech_Stacks/openMP.md b/Topics/Tech_Stacks/openMP.md index 05277a096..f70d7be93 100644 --- a/Topics/Tech_Stacks/openMP.md +++ b/Topics/Tech_Stacks/openMP.md @@ -4,7 +4,9 @@ A parallel system is an architecture where a problem is broken into several smal This guide mainly focuses on OpenMP which is a high-level API that makes parallelizing sequential code more simple. ## Prerequisites -The following guide assumes that readers are familiar with concurrency (e.g. threads, and synchronization). +The following guide assumes that readers are familiar with concurrency (e.g. threads, and synchronization). If you are not familiar with these topics, we recommend checking out the following resources +- [Threads](https://en.wikipedia.org/wiki/Thread_(computing)) +- [OSTEP Chapter 26](https://pages.cs.wisc.edu/~remzi/OSTEP/threads-intro.pdf) ## Shared Memory In order to parallelize a program, threads or processes must communicate with each other. In a distributed system, they would achieve this through message passing across a network. However, we focus on parallelism on a single machine (i.e. one or more processors with one or more cores connected through shared memory). At a high-level, this simply means all processors have access to the same physical memory.