diff --git a/docs/no_toc/01-Fundamentals.md b/docs/no_toc/01-Fundamentals.md

index fe8e77e..2611821 100644

--- a/docs/no_toc/01-Fundamentals.md

+++ b/docs/no_toc/01-Fundamentals.md

@@ -4,11 +4,11 @@

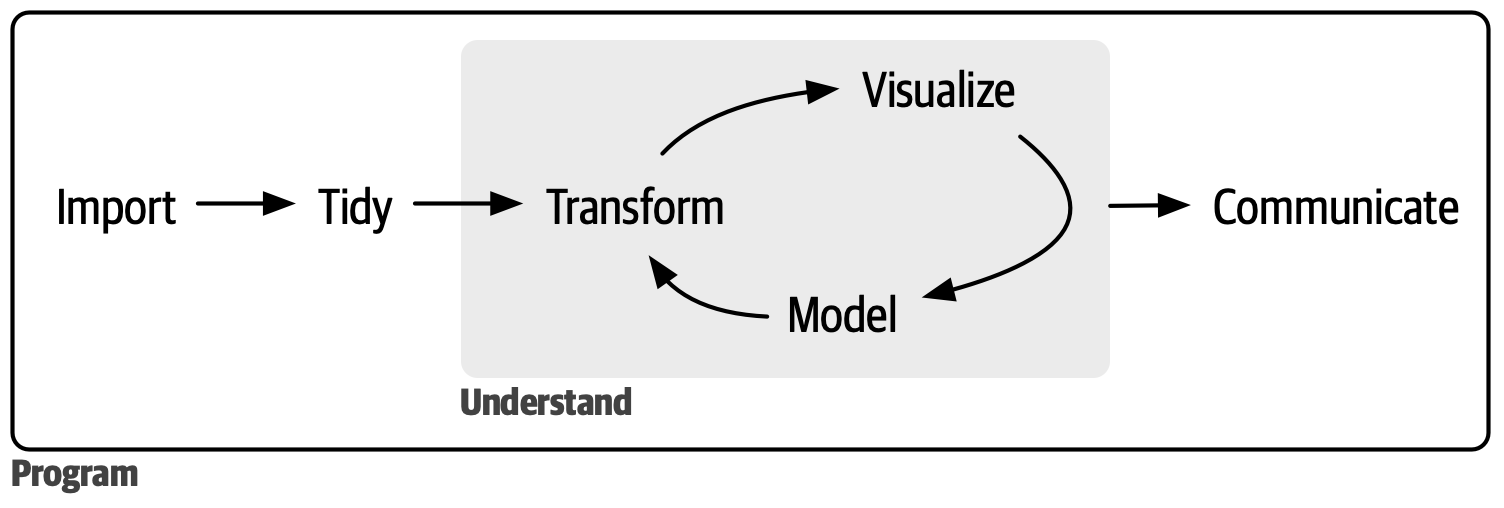

## Goals of this course

-- Continue building *programming fundamentals*: how to make use of complex data structures, use custom functions built by other R users, and creating your own functions. How to iterate repeated tasks that scales naturally.

+- Continue building *programming fundamentals*: How to use complex data structures, use and create custom functions, and how to iterate repeated tasks

- Continue exploration of *data science fundamentals*: how to clean messy data to a Tidy form for analysis.

-- Outcome: Conduct a full analysis in the data science workflow (minus model).

+- At the end of the course, you will be able to: conduct a full analysis in the data science workflow (minus model).

{width="450"}

@@ -428,7 +428,7 @@ l1$score

Therefore, `l1$score` is the same as `l1[[4]]` and is the same as `l1[["score"]]`.

-A dataframe is just a named list of vectors of same length with **attributes** of (column) `names` and `row.names`.

+A dataframe is just a named list of vectors of same length with additional **attributes** of (column) `names` and `row.names`.

## Matrix

@@ -475,3 +475,7 @@ my_matrix[2, 3]

```

## [1] 6

```

+

+## Exercises

+

+You can find [exercises and solutions on Posit Cloud](https://posit.cloud/content/8236252), or on [GitHub](https://github.com/fhdsl/Intermediate_R_Exercises).

diff --git a/docs/no_toc/02-Data_cleaning_1.md b/docs/no_toc/02-Data_cleaning_1.md

index f5c9180..a90d9a0 100644

--- a/docs/no_toc/02-Data_cleaning_1.md

+++ b/docs/no_toc/02-Data_cleaning_1.md

@@ -158,7 +158,7 @@ grade2 = if_else(grade > 60, TRUE, FALSE)

3. If-else_if-else

-```

+```

grade3 = case_when(grade >= 90 ~ "A",

grade >= 80 ~ "B",

grade >= 70 ~ "C",

@@ -199,7 +199,7 @@ simple_df2 = mutate(simple_df, grade = ifelse(grade > 60, TRUE, FALSE))

3. If-else_if-else

-```

+```

simple_df3 = simple_df

simple_df3$grade = case_when(simple_df3$grade >= 90 ~ "A",

@@ -211,8 +211,10 @@ simple_df3$grade = case_when(simple_df3$grade >= 90 ~ "A",

or

-```

-simple_df3 = mutate(simple_df, grade = case_when(grade >= 90 ~ "A",

+```

+simple_df3 = simple_df

+

+simple_df3 = mutate(simple_df3, grade = case_when(grade >= 90 ~ "A",

grade >= 80 ~ "B",

grade >= 70 ~ "C",

grade >= 60 ~ "D",

@@ -244,7 +246,7 @@ if(expression_is_TRUE) {

3. If-else_if-else:

```

-if(expression_A_is_TRUE)

+if(expression_A_is_TRUE) {

#code goes here

}else if(expression_B_is_TRUE) {

#other code goes here

@@ -299,3 +301,7 @@ result

```

## [1] 5

```

+

+## Exercises

+

+You can find [exercises and solutions on Posit Cloud](https://posit.cloud/content/8236252), or on [GitHub](https://github.com/fhdsl/Intermediate_R_Exercises).

diff --git a/docs/no_toc/03-Data_cleaning_2.md b/docs/no_toc/03-Data_cleaning_2.md

index e2fd999..77397cf 100644

--- a/docs/no_toc/03-Data_cleaning_2.md

+++ b/docs/no_toc/03-Data_cleaning_2.md

@@ -1,14 +1,13 @@

# Data Cleaning, Part 2

-

```r

library(tidyverse)

```

## Tidy Data

-It is important to have standard of organizing data, as it facilitates a consistent way of thinking about data organization and building tools (functions) that make use of that standard. The principles of **Tidy data**, developed by Hadley Wickham:

+It is important to have standard of organizing data, as it facilitates a consistent way of thinking about data organization and building tools (functions) that make use of that standard. The [principles of **Tidy data**](https://vita.had.co.nz/papers/tidy-data.html), developed by Hadley Wickham:

1. Each variable must have its own column.

@@ -221,7 +220,7 @@ ggplot(df) + aes(x = Q1_Sales, y = Q2_Sales, color = Store) + geom_point()

## Subjectivity in Tidy Data

-We have looked at clear cases of when a dataset is Tidy. In reality, the Tidy state depends on what we call variables and observations.

+We have looked at clear cases of when a dataset is Tidy. In reality, the Tidy state depends on what we call variables and observations. Consider this example, inspired by the following [blog post](https://kiwidamien.github.io/what-is-tidy-data.html) by Damien Martin.

```r

@@ -316,8 +315,6 @@ ggplot(kidney_long_still) + aes(x = treatment, y = recovery_rate, fill = stone_s

-## References

-

-https://vita.had.co.nz/papers/tidy-data.html

+## Exercises

-https://kiwidamien.github.io/what-is-tidy-data.html

+You can find [exercises and solutions on Posit Cloud](https://posit.cloud/content/8236252), or on [GitHub](https://github.com/fhdsl/Intermediate_R_Exercises).

diff --git a/docs/no_toc/04-Functions.md b/docs/no_toc/04-Functions.md

index f04bc37..dfa9c92 100644

--- a/docs/no_toc/04-Functions.md

+++ b/docs/no_toc/04-Functions.md

@@ -14,7 +14,7 @@ Some advice on writing functions:

- A function should do only one, well-defined task.

-### Anatomy of a function definition

+## Anatomy of a function definition

*Function definition consists of assigning a **function name** with a "function" statement that has a comma-separated list of named **function arguments**, and a **return expression**. The function name is stored as a variable in the global environment.*

@@ -34,13 +34,13 @@ With function definitions, not all code runs from top to bottom. The first four

When the function is called in line 5, the variables for the arguments are reassigned to function arguments to be used within the function and helps with the modular form. We need to introduce the concept of local and global environments to distinguish variables used only for a function from variables used for the entire program.

-### Local and global environments

+## Local and global environments

*{ } represents variable scoping: within each { }, if variables are defined, they are stored in a **local environment**, and is only accessible within { }. All function arguments are stored in the local environment. The overall environment of the program is called the **global environment** and can be also accessed within { }.*

The reason of having some of this "privacy" in the local environment is to make functions modular - they are independent little tools that should not interact with the rest of the global environment. Imagine someone writing a tool that they want to give someone else to use, but the tool depends on your environment, vice versa.

-### A step-by-step example

+## A step-by-step example

Using the `addFunction` function, let's see step-by-step how the R interpreter understands our code:

@@ -52,7 +52,7 @@ Using the `addFunction` function, let's see step-by-step how the R interpreter u

-### Function arguments create modularity

+## Function arguments create modularity

First time writers of functions might ask: why are variables we use for the arguments of a function *reassigned* for function arguments in the local environment? Here is an example when that process is skipped - what are the consequences?

@@ -81,7 +81,7 @@ Here is the execution for `w`:

The function did not work as expected because we used hard-coded variables from the global environment and not function argument variables unique to the function use!

-### Exercises

+## Examples

- Create a function, called `add_and_raise_power` in which the function takes in 3 numeric arguments. The function computes the following: the first two arguments are added together and raised to a power determined by the 3rd argument. The function returns the resulting value. Here is a use case: `add_and_raise_power(1, 2, 3) = 27` because the function will return this expression: `(1 + 2) ^ 3`. Another use case: `add_and_raise_power(3, 1, 2) = 16` because of the expression `(3 + 1) ^ 2`. Confirm with that these use cases work. Can this function used for numeric vectors?

@@ -114,7 +114,16 @@ The function did not work as expected because we used hard-coded variables from

## [1] 344 8

```

-- Create a function, called `medicaid_eligible` in which the function takes in one argument: a numeric vector called `age`. The function returns a numeric vector with the same length as `age`, in which elements are `0` for indicies that are less than 65 in `age`, and `1` for indicies 65 or higher in `age`. Use cases: `medicaid_eligible(c(30, 70)) = c(0, 1)`

+- Create a function, called `num_na` in which the function takes in any vector, and then return a single numeric value. This numeric value is the number of `NA`s in the vector. Use cases: `num_na(c(NA, 2, 3, 4, NA, 5)) = 2` and `num_na(c(2, 3, 4, 5)) = 0`. Hint 1: Use `is.na()` function. Hint 2: Given a logical vector, you can count the number of `TRUE` values by using `sum()`, such as `sum(c(TRUE, TRUE, FALSE)) = 2`.

+

+

+ ```r

+ num_na = function(x) {

+ return(sum(is.na(num_na)))

+ }

+ ```

+

+- Create a function, called `medicaid_eligible` in which the function takes in one argument: a numeric vector called `age`. The function returns a numeric vector with the same length as `age`, in which elements are `0` for indicies that are less than 65 in `age`, and `1` for indicies 65 or higher in `age`. (Hint: This is a data recoding problem!) Use cases: `medicaid_eligible(c(30, 70)) = c(0, 1)`

```r

@@ -130,3 +139,7 @@ The function did not work as expected because we used hard-coded variables from

```

## [1] 0 1

```

+

+## Exercises

+

+You can find [exercises and solutions on Posit Cloud](https://posit.cloud/content/8236252), or on [GitHub](https://github.com/fhdsl/Intermediate_R_Exercises).

diff --git a/docs/no_toc/05-Iteration.md b/docs/no_toc/05-Iteration.md

new file mode 100644

index 0000000..6effb67

--- /dev/null

+++ b/docs/no_toc/05-Iteration.md

@@ -0,0 +1,324 @@

+# Iteration

+

+Suppose that you want to repeat a chunk of code many times, but changing one variable's value each time you do it. This could be modifying each element of a vector with the same operation, or analyzing a dataframe with different parameters.

+

+There are three common strategies to go about this:

+

+1. Copy and paste the code chunk, and change that variable's value. Repeat. *This can be a starting point in your analysis, but will lead to errors easily.*

+2. Use a `for` loop to repeat the chunk of code, and let it loop over the changing variable's value. *This is popular for many programming languages, but the R programming culture encourages a functional way instead*.

+3. **Functionals** allow you to take a function that solves the problem for a single input and generalize it to handle any number of inputs. *This is very popular in R programming culture.*

+

+## For loops

+

+A `for` loop repeats a chunk of code many times, once for each element of an input vector.

+

+```

+for (my_element in my_vector) {

+ chunk of code

+}

+```

+

+Most often, the "chunk of code" will make use of `my_element`.

+

+#### We can loop through the indicies of a vector

+

+The function `seq_along()` creates the indicies of a vector. It has almost the same properties as `1:length(my_vector)`, but avoids issues when the vector length is 0.

+

+

+```r

+my_vector = c(1, 3, 5, 7)

+

+for(i in seq_along(my_vector)) {

+ print(my_vector[i])

+}

+```

+

+```

+## [1] 1

+## [1] 3

+## [1] 5

+## [1] 7

+```

+

+#### Alternatively, we can loop through the elements of a vector

+

+

+```r

+for(vec_i in my_vector) {

+ print(vec_i)

+}

+```

+

+```

+## [1] 1

+## [1] 3

+## [1] 5

+## [1] 7

+```

+

+#### Another example via indicies

+

+

+```r

+result = rep(NA, length(my_vector))

+for(i in seq_along(my_vector)) {

+ result[i] = log(my_vector[i])

+}

+```

+

+## Functionals

+

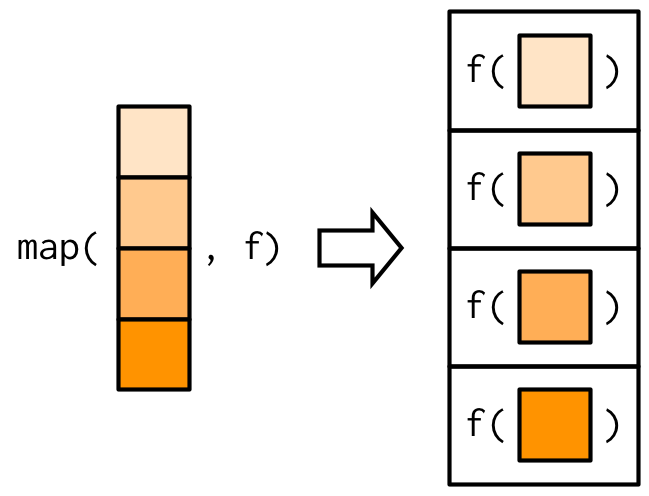

+A **functional** is a function that takes in a data structure and function as inputs and applies the function on the data structure, element by element. It *maps* your input data structure to an output data structure based on the function. It encourages the usage of modular functions in your code.

+

+

+

+Or,

+

+{width="250"}

+

+We will use the `purrr` package in `tidyverse` to use functionals.

+

+`map()` takes in a vector or a list, and then applies the function on each element of it. The output is *always* a list.

+

+

+

+

+```r

+my_vector = c(1, 3, 5, 7)

+map(my_vector, log)

+```

+

+```

+## [[1]]

+## [1] 0

+##

+## [[2]]

+## [1] 1.098612

+##

+## [[3]]

+## [1] 1.609438

+##

+## [[4]]

+## [1] 1.94591

+```

+

+Lists are useful if what you are using it on requires a flexible data structure.

+

+To be more specific about the output type, you can do this via the `map_*` function, where `*` specifies the output type: `map_lgl()`, `map_chr()`, and `map_dbl()` functions return vectors of logical values, strings, or numbers respectively.

+

+For example, to make sure your output is a double (numeric):

+

+

+```r

+map_dbl(my_vector, log)

+```

+

+```

+## [1] 0.000000 1.098612 1.609438 1.945910

+```

+

+All of these are toy examples that gets us familiar with the syntax, but we already have built-in functions to solve these problems, such as `log(my_vector)`. Let's see some real-life case studies.

+

+## Case studies

+

+### 1. Loading in multiple files.

+

+Suppose that we want to load in a few dataframes, and store them in a list of dataframes for analysis downstream.

+

+We start with the filepaths we want to load in as dataframes.

+

+

+```r

+paths = c("classroom_data/students.csv", "classroom_data/CCLE_metadata.csv")

+```

+

+The function we want to use to load the data in will be `read_csv()`.

+

+Let's practice writing out one iteration:

+

+

+```r

+result = read_csv(paths[1])

+```

+

+#### To do this functionally, we think about:

+

+- What variable we need to loop through: `paths`

+

+- The repeated task as a function: `read_csv()`

+

+- The looping mechanism, and its output: `map()` outputs lists.

+

+

+```r

+loaded_dfs = map(paths, read_csv)

+```

+

+#### To do this with a for loop, we think about:

+

+- What variable we need to loop through: `paths`.

+

+- Do we need to store the outcome of this loop in a data structure? Yes, a list.

+

+- At each iteration, what are we doing? Use `read_csv()` on the current element, and store it in the output list.

+

+

+```r

+paths = c("classroom_data/students.csv", "classroom_data/CCLE_metadata.csv")

+loaded_dfs = vector(mode = "list", length = length(paths))

+for(i in seq_along(paths)) {

+ df = read_csv(paths[i])

+ loaded_dfs[[i]] = df

+}

+```

+

+### 2. Analyze a dataframe with different parameters.

+

+Suppose you are working with the `penguins` dataframe:

+

+

+```r

+library(palmerpenguins)

+head(penguins)

+```

+

+```

+## # A tibble: 6 × 8

+## species island bill_length_mm bill_depth_mm flipper_length_mm body_mass_g

+##

+## 1 Adelie Torgersen 39.1 18.7 181 3750

+## 2 Adelie Torgersen 39.5 17.4 186 3800

+## 3 Adelie Torgersen 40.3 18 195 3250

+## 4 Adelie Torgersen NA NA NA NA

+## 5 Adelie Torgersen 36.7 19.3 193 3450

+## 6 Adelie Torgersen 39.3 20.6 190 3650

+## # ℹ 2 more variables: sex , year

+```

+

+and you want to look at the mean `bill_length_mm` for each of the three species (Adelie, Chinstrap, Gentoo).

+

+Let's practice writing out one iteration:

+

+

+```r

+species_to_analyze = c("Adelie", "Chinstrap", "Gentoo")

+penguins_subset = filter(penguins, species == species_to_analyze[1])

+mean(penguins_subset$bill_length_mm, na.rm = TRUE)

+```

+

+```

+## [1] 38.79139

+```

+

+#### To do this functionally, we think about:

+

+- What variable we need to loop through: `c("Adelie", "Chinstrap", "Gentoo")`

+

+- The repeated task as a function: a custom function that takes in a specie of interest. The function filters the rows of `penguins` to the species of interest, and compute the mean of `bill_length_mm`.

+

+- The looping mechanism, and its output: `map_dbl()` outputs (double) numeric vectors.

+

+

+```r

+analysis = function(current_species) {

+ penguins_subset = dplyr::filter(penguins, species == current_species)

+ return(mean(penguins_subset$bill_length_mm, na.rm=TRUE))

+}

+

+map_dbl(c("Adelie", "Chinstrap", "Gentoo"), analysis)

+```

+

+```

+## [1] 38.79139 48.83382 47.50488

+```

+

+#### To do this with a for loop, we think about:

+

+- What variable we need to loop through: `c("Adelie", "Chinstrap", "Gentoo")`.

+

+- Do we need to store the outcome of this loop in a data structure? Yes, a numeric vector.

+

+- At each iteration, what are we doing? Filter the rows of `penguins` to the species of interest, and compute the mean of `bill_length_mm`.

+

+

+```r

+outcome = rep(NA, length(species_to_analyze))

+for(i in seq_along(species_to_analyze)) {

+ penguins_subset = filter(penguins, species == species_to_analyze[i])

+ outcome[i] = mean(penguins_subset$bill_length_mm, na.rm=TRUE)

+}

+outcome

+```

+

+```

+## [1] 38.79139 48.83382 47.50488

+```

+

+### 3. Calculate summary statistics on columns of a dataframe.

+

+Suppose that you are interested in the numeric columns of the `penguins` dataframe.

+

+

+```r

+penguins_numeric = penguins %>% select(bill_length_mm, bill_depth_mm, flipper_length_mm, body_mass_g)

+```

+

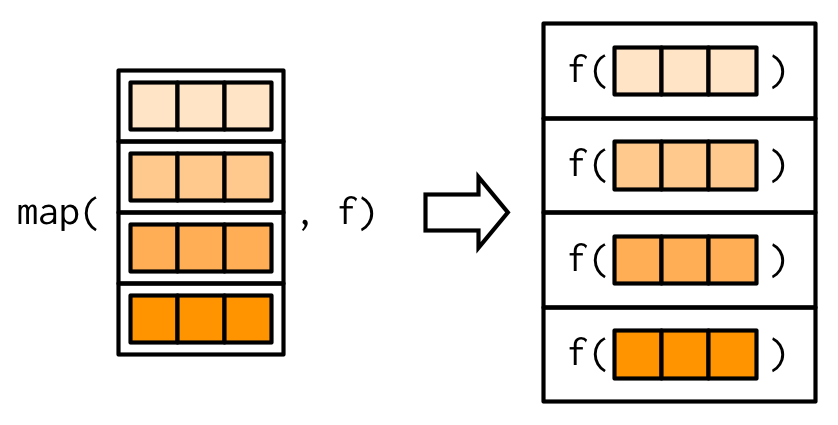

+and you are interested to look at the mean of each column. It is very helpful to interpret the dataframe `penguins_numeric` as a *list*, iterating through each column as an element of a list.

+

+Let's practice writing out one iteration:

+

+

+```r

+mean(penguins_numeric[[1]], na.rm = TRUE)

+```

+

+```

+## [1] 43.92193

+```

+

+#### To do this functionally, we think about:

+

+- What variable we need to loop through: the list `penguins_numeric`

+

+- The repeated task as a function: `mean()` with the argument `na.rm = TRUE`.

+

+- The looping mechanism, and its output: `map_dbl()` outputs (double) numeric vectors.

+

+

+```r

+map_dbl(penguins_numeric, mean, na.rm = TRUE)

+```

+

+```

+## bill_length_mm bill_depth_mm flipper_length_mm body_mass_g

+## 43.92193 17.15117 200.91520 4201.75439

+```

+

+Here, R is interpreting the dataframe `penguins_numeric` as a *list*, iterating through each column as an element of a list:

+

+{width="300"}

+

+#### To do this with a for loop, we think about:

+

+- What variable we need to loop through: the elements of `penguins_numeric` as a list.

+

+- Do we need to store the outcome of this loop in a data structure? Yes, a numeric vector.

+

+- At each iteration, what are we doing? Compute the mean of an element of `penguins_numeric`.

+

+

+```r

+result = rep(NA, ncol(penguins_numeric))

+for(i in seq_along(penguins_numeric)) {

+ result[i] = mean(penguins_numeric[[i]], na.rm = TRUE)

+}

+result

+```

+

+```

+## [1] 43.92193 17.15117 200.91520 4201.75439

+```

+

+## Exercises

+

+You can find [exercises and solutions on Posit Cloud](https://posit.cloud/content/8236252), or on [GitHub](https://github.com/fhdsl/Intermediate_R_Exercises).

diff --git a/docs/no_toc/404.html b/docs/no_toc/404.html

index 4da1576..41eb66e 100644

--- a/docs/no_toc/404.html

+++ b/docs/no_toc/404.html

@@ -138,6 +138,7 @@

5.1 Part 1: Looking at documentation to load in data

-

Suppose that you want to load in data “students.csv” in a CSV format, and you don’t know what tools to use. You decide to see whether the package “readr” can be useful to solve your problem. Where should you look?

-

All R packages must be stored on CRAN (Comprehensive R Archive Network), and all packages have a website that points to the reference manual (what is pulled up using the ? command), source code, vignettes examples, and dependencies on other packages. Here is the website for “readr”.

-

In the package, you find some potential functions for importing your data:

-

-

read_csv("file.csv") for comma-separated files

-

read_tsv("file.tsv") for tab-deliminated files

-

read_excel("example.xlsx") for excel files

-

read_excel("example.xlsx", sheet = "sheet1") for excel files with a sheet name

-

read_delim() for general-deliminated files, such as: read_delim("file.csv", sep = ",").

-

-

After looking at the vignettes, it seems that read_csv() is a function to try.

-

Let’s look at the read_csv() function documentation, which can be accessed via ?read_csv.

We see that the only required argument is the file variable, which is documented to be “Either a path to a file, a connection, or literal data (either a single string or a raw vector).” All the other arguments are considered optional, because they have a pre-allocated value in the documentation.

-

Load in “students.csv” via read_csv() function as a dataframe variable students and take a look at its contents via View().

-

library(tidyverse)

-

## Warning: package 'tidyverse' was built under R version 4.0.3

Something looks weird here. There is only one column, and it seems that the first two entries start with “#”, and don’t fit a CSV file format. These first two entries that start with “#” likely are comments giving metadata about the file, and they should be ignore when loading in the data.

-

Let’s try again. Take a look at the documentation for the comment argument and give it a character value "#" with this situation. Any text after the comment characters will be silently ignored.

-

The column names are not very consistent . Take a look at the documentation for the col_names argument and give it a value of c("student_id", "full_name", "favorite_food", "meal_plan", "age").

-

Alternatively, you could have loaded the data in without col_names option and modified the column names by accessing names(students).

-

For more information on loading in data, see this chapter of R for Data Science.

-

-

-

5.2 Part 2: Recoding data: warm-up

-

Consider this vector:

-

scores =c(23, 46, -3, 5, -1)

-

Recode scores so that all the negative values are 0.

-

Let’s look at the values of students dataframe more carefully. We will do some recoding on this small dataframe. It may feel trivial because you could do this by hand in Excel, but this is a practice on how we can scale this up with larger datasets!

-

Notice that some of the elements of this dataframe has proper NA values and also a character “N/A”. We want “N/A” to be a proper NA value.

-

Recode “N/A” to NA in the favorite_food column:

-

Recode “five” to 5 in the age column:

-

Create a new column age_category so that it has value “toddler” if age is < 6, and “child” if age is >= 6.

-

(Hint: You can create a new column via mutate, or you can directly refer to the new column via student$``age_category.)

-

Create a new column favorite_food_numeric so that it has value 1 if favorite_food is “Breakfast and lunch”, 2 if “Lunch only”, and 3 if “Dinner only”.

-

-

-

5.3 Part 3: Recoding data in State Cancer Profiles

[State Cancer Profile data] was developed with the idea to provide a geographic profile of cancer burden in the United States and reveal geographic disparities in cancer incidence, mortality, risk factors for cancer, and cancer screening, across different population subgroups.

-

-

In this analysis, we want to examine cancer incidence rates in state of Washington and make some comparisons between groups. The cancer incidence rate can be accessed at this website, once you give input of what kind of incidence data you want to access. If you want to analyze this data in R, it takes a bit of work of exporting the data and loading it into R.

-

To access this data easier in R, DaSL staff built a R package cancerprof to easily load in the data. Let’s look at the package’s documentation to see how to get access to cancer incidence data.

-

Towards the bottom of the documentation are some useful examples to consider as starting point.

-

Load in data about the following population: melanoma incidence in WA at the county level for males of all ages, all cancer stages, averaged in the past 5 years. Store it as a dataframe variable named melanoma_incidence

-

(If you are stuck, you can use the first example in the documentation.)

-

Take a look at the data yourself and explore it.

-

Let’s select a few columns of interest and give them column names that doesn’t contain spaces. We can access column names with spaces via the backtick ` symbol.

-

#uncomment to run!

-

-#melanoma_incidence = select(melanoma_incidence, County, `Age Adjusted Incidence Rate`, `Recent Trend`)

-

-#names(melanoma_incidence) = c("County", "Age_adjusted_incidence_rate", "Recent_trend")

-

Take a look at the column Age_adjusted_incidence_rate. It has missing data coded as “*” (notice the space after *). Recode “*” as NA.

-

Finally, notice that the data type for Age_adjusted_incidence_rate is character, if you run the function is.character() or class() on it. Convert it to a numeric data type.

As you become more independent R programmers, you will spend time learning about new functions on your own. We have gone over the basic anatomy of a function call back in Intro to R, but now let’s go a bit deeper to understand how a function is built and how to call them.

Recall that a function has a function name, input arguments, and a return value.

Function definition consists of assigning a function name with a “function” statement that has a comma-separated list of named function arguments, and a return expression. The function name is stored as a variable in the global environment.

@@ -309,8 +297,8 @@

4.1 Interpreting functions, caref

...: further arguments passed to or from other methods.

Notice that the arguments trim = 0, na.rm = FALSE have default values. This means that these arguments are optional - you should provide it only if you want to. With this understanding, you can use mean() in a new way:

The use of . . . (dot-dot-dot): This is a special argument that allows a function to take any number of arguments. This isn’t very useful for the mean() function, but it makes sense for function such as select() and filter(), and mutate(). For instance, in select(), once you provide your dataframe for the argument .data, you can pile on as many columns to select in the rest of the argument.

Usage:

@@ -329,8 +317,8 @@

4.1 Interpreting functions, caref

select a range of variables.

You will look at the function documentation on your own to see how to deal with more complex cases.

-

-

4.2 Recoding Data / Conditionals

+

+

3.2 Recoding Data / Conditionals

It is often said that 80% of data analysis is spent on the cleaning and preparing data. Today we will start looking at common data cleaning tasks. Suppose that you have a column in your data that needs to be recoded. Since a dataframe’s column, when selected via $, is a vector, let’s start talking about recoding vectors. If we have a numeric vector, then maybe you want to have certain values to be out of bounds, or assign a range of values to a character category. If we have a character vector, then maybe you want to reassign it to a different value.

Here are popular recoding logical scenarios:

@@ -339,17 +327,17 @@

4.2 Recoding Data / Conditionals<

If-else_if-else: “If elements of the vector meets condition A, then they are assigned value X. Else, if the elements of the vector meets condition B, they are assigned value Y. Otherwise, they are assigned value Z.”

Let’s look at a vector of grade values, as an example:

-

grade =c(90, 78, 95, 74, 56, 81, 102)

+

grade =c(90, 78, 95, 74, 56, 81, 102)

If

Instead of having the bracket [ ] notation on the right hand side of the equation, if it is on the left hand side of the equation, then we can modify a subset of the vector.

The 3 common scenarios we looked at for recoding data is closely tied to the concept of conditionals in programming: given certain conditions, you run a specific code chunk. Given a vector’s value, assign it a different value. Or, given a value, run the following hundred lines of code. Here is what it looks like:

If:

@@ -410,7 +400,7 @@

4.3 Conditionals

If-else_if-else:

-

if(expression_A_is_TRUE)

+

if(expression_A_is_TRUE) {

#code goes here

}else if(expression_B_is_TRUE) {

#other code goes here

@@ -419,34 +409,38 @@

4.3 Conditionals

}

The expression that is being tested whether it is TRUEmust be a singular logical value, and not a logical vector. If the latter, see the recoding section for now.

It is important to have standard of organizing data, as it facilitates a consistent way of thinking about data organization and building tools (functions) that make use of that standard. The principles of Tidy data, developed by Hadley Wickham:

+

+

Chapter 4 Data Cleaning, Part 2

+

library(tidyverse)

+

+

4.1 Tidy Data

+

It is important to have standard of organizing data, as it facilitates a consistent way of thinking about data organization and building tools (functions) that make use of that standard. The principles of Tidy data, developed by Hadley Wickham:

Each variable must have its own column.

Each observation must have its own row.

@@ -274,22 +262,22 @@

6.1 Tidy Data

Multiple variables are stored in a single column

After some clear examples, we emphasize that “Tidy” data is subjective to what kind of analysis you want to do with the dataframe.

-

-

6.1.1 1. Columns contain values, rather than variables (Long is tidy)

## Store Year Q1_Sales Q2_Sales Q3_Sales Q4_Sales

## 1 A 2018 55 45 22 50

## 2 B 2018 98 70 60 60

Each observation is a store, and each observation has its own row. That looks good.

The columns “Q1_Sales”, …, “Q4_Sales” seem to be values of a single variable “quarter” of our observation. The values of “quarter” are not in a single column, but are instead in the columns.

## # A tibble: 8 × 4

## Store Year quarter sales

## <chr> <dbl> <chr> <dbl>

@@ -305,20 +293,20 @@

6.1.1 1. Columns contain values,

The new columns “quarter” and “sales” are variables that describes our observation, and describes our values. We’re in a tidy state!

We have transformed our data to a “longer” format, as our observation represents something more granular or detailed than before. Often, the original variables values will repeat itself in a “longer format”. We call the previous state of our dataframe is a “wider” format.

-

-

6.1.2 2. Variables are stored in rows (Wide is tidy)

+

+

4.1.2 2. Variables are stored in rows (Wide is tidy)

Are all tidy dataframes Tidy in a “longer” format?

6.1.2 2. Variables are stored in

## 4 B KRAS_expression 3.9

Here, each observation is a sample’s gene…type? The observation feels awkward because variables are stored in rows. Also, the column “values” contains multiple variable types: gene expression and mutation values that got coerced to numeric!

We are back to our orignal form, and it was already Tidy.

-

-

6.1.3 3. Multiple variables are stored in a single column

-

table3

+

+

4.1.3 3. Multiple variables are stored in a single column

+

table3

## # A tibble: 6 × 3

## country year rate

## * <chr> <int> <chr>

@@ -351,7 +339,7 @@

6.1.3 3. Multiple variables are s

## 5 China 1999 212258/1272915272

## 6 China 2000 213766/1280428583

There seems to be two variables in the numerator and denominator of “rate” column. Let’s separate it.

-

separate(table3, col ="rate", into =c("count", "population"), sep ="/")

+

separate(table3, col ="rate", into =c("count", "population"), sep ="/")

## # A tibble: 6 × 4

## country year count population

## <chr> <int> <chr> <chr>

@@ -363,10 +351,10 @@

6.1.3 3. Multiple variables are s

## 6 China 2000 213766 1280428583

-

-

6.2 Uses of Tidy data

+

+

4.2 Uses of Tidy data

In general, many functions for analysis and visualization in R assumes that the input dataframe is Tidy. These tools assumes the values of each variable fall in their own column vector. For instance, from our first example, we can compare sales across quarters and stores.

-

df_long

+

df_long

## # A tibble: 8 × 4

## Store Year quarter sales

## <chr> <dbl> <chr> <dbl>

@@ -378,21 +366,21 @@

6.2 Uses of Tidy data

## 6 B 2018 Q2_Sales 70

## 7 B 2018 Q3_Sales 60

## 8 B 2018 Q4_Sales 60

-

ggplot(df_long) +aes(x = quarter, y = sales, group = Store) +geom_point() +geom_line()

+

ggplot(df_long) +aes(x = quarter, y = sales, group = Store) +geom_point() +geom_line()

Although in its original state we can also look at sales between quarter, we can only look between two quarters at once. Tidy data encourages looking at data in the most granular scale.

-

ggplot(df) +aes(x = Q1_Sales, y = Q2_Sales, color = Store) +geom_point()

+

ggplot(df) +aes(x = Q1_Sales, y = Q2_Sales, color = Store) +geom_point()

-

-

6.3 Subjectivity in Tidy Data

-

We have looked at clear cases of when a dataset is Tidy. In reality, the Tidy state depends on what we call variables and observations.

We have looked at clear cases of when a dataset is Tidy. In reality, the Tidy state depends on what we call variables and observations. Consider this example, inspired by the following blog post by Damien Martin.

Right now, the kidney dataframe clearly has values of a variable in the column. Let’s try to make it Tidy by making it into a longer form and separating out variables that are together in a column.

The reason why both of these versions seem Tidy is that the columns “recovered” and “failed” can be interpreted as independent variables and values of the variable “treatment”.

Ultimately, we decide which dataframe we prefer based on the analysis we want to do.

For instance, when our observation is about a kidney stone’s treatment’s outcome type, we compare it between outcome type, treatment, and stone size.

-

ggplot(kidney_long) +aes(x = treatment, y = count, fill = outcome) +geom_bar(position="dodge", stat="identity") +facet_wrap(~stone_size)

+

ggplot(kidney_long) +aes(x = treatment, y = count, fill = outcome) +geom_bar(position="dodge", stat="identity") +facet_wrap(~stone_size)

When our observation is about a kidney stone’s treatment’s, we compare a new variable recovery rate ( = recovered / (recovered + failed)) between treatment and stone size.

How do you subset the following vector to the first three elements?

-

measurements =c(2, 4, -1, -3, 2, -1, 10)

-

How do you subset the original vector so that it only has negative values?

-

How do you subset the following vector so that it has no NA values?

-

vec_with_NA =c(2, 4, NA, NA, 3, NA)

-

Consider the following logical vector some_logicals. Convert Logical vector -> Numeric vector -> Character vector in two steps. Check that you are doing this correctly along the way by using the class() function, or is.numeric() and is.character(), on the converted data.

patient =list(

-name =" ",

-age =34,

-pronouns =c("he", "him", "/", "they", "them"),

-vaccines =c("hep-B", "chickenpox", "HPV"),

-visits =NA

-)

-

-visit1 =list(

-symptoms =c("runny nose", "sore throat", "frustration"),

-prescription ="recommended time off from work, rest.",

-date ="1/1/2000"

-)

-

-visit2 =list(

-symptoms =c("fainted", "pale complexion"),

-prescription ="drink water and take time off work.",

-date ="1/1/2001"

-)

-

Access the first element of patient via double brackets [[ ]] and modify it to a value of your choice.

-

Access the named element “pronouns” of patient via double bracket [[ ]] or $ and modify its value so that it doesn’t contain the “/” element. (Use your vector subsetting skills here after you access the appropriate element from the list.)

-

Create a new list all_visits with elements visit1 and visit2. Yes, we’re making lists within lists!

-

Suppose you want to use all_visits to access visit 1’s symptoms. You would continue the double brackets [[ ]] or $ notation: all_visits[[1]] returns a list, so we access the first element of that list via all_visits[[1]][[1]].

How would you use all_visits to access visit 2’s prescription?

-

How would you use all_visits to access visit 2’s symptom element “pale complexion”? Remember, once you access a vector, you would go back to the single bracket [ ] to access its elements.

-

Finally, assign all_visits to patient’s visits.

-

-

3.2.1 Part 3: Dataframes (Lists)

-

A dataframe is just a named list of vectors of same length with attributes of (column) names and row.names.

-

library(palmerpenguins)

-head(penguins)

-

## # A tibble: 6 × 8

-## species island bill_length_mm bill_depth_mm flipper_length_mm body_mass_g

-## <fct> <fct> <dbl> <dbl> <int> <int>

-## 1 Adelie Torgersen 39.1 18.7 181 3750

-## 2 Adelie Torgersen 39.5 17.4 186 3800

-## 3 Adelie Torgersen 40.3 18 195 3250

-## 4 Adelie Torgersen NA NA NA NA

-## 5 Adelie Torgersen 36.7 19.3 193 3450

-## 6 Adelie Torgersen 39.3 20.6 190 3650

-## # ℹ 2 more variables: sex <fct>, year <int>

-

Access the body_mass_g column vector of penguins via the double bracket [[ ]], treating penguins like a list, and compute the mean. Remember to use na.rm = TRUE to remove any NA values: mean(x, na.rm = TRUE)

-

Create a new dataframe penguins_clean, which has no NA values in the body_mass_g column. You need to filter out rows that have NAs in the column bill_length_mm:

-

Now, subset penguins_clean to each of the three species and compute their respective mean value of body_mass_g. Because you already got rid of NAs in body_mass_g, you can just use mean(x) without the extra option. How do they compare?

-

Finally, make a box plot of species (x-axis) vs. body_mass_g (y-axis) via penguins_clean dataframe. I’ll get you started…

-

#ggplot(penguins_clean) + aes(x = , y = ) + geom_boxplot()

Continue building programming fundamentals: how to make use of complex data structures, use custom functions built by other R users, and creating your own functions. How to iterate repeated tasks that scales naturally.

+

Continue building programming fundamentals: How to use complex data structures, use and create custom functions, and how to iterate repeated tasks

Continue exploration of data science fundamentals: how to clean messy data to a Tidy form for analysis.

-

Outcome: Conduct a full analysis in the data science workflow (minus model).

+

At the end of the course, you will be able to: conduct a full analysis in the data science workflow (minus model).

@@ -482,7 +470,7 @@

2.7 Lists

l1$score

## [1] 2.3 5.9

Therefore, l1$score is the same as l1[[4]] and is the same as l1[["score"]].

-

A dataframe is just a named list of vectors of same length with attributes of (column) names and row.names.

+

A dataframe is just a named list of vectors of same length with additional attributes of (column) names and row.names.

The course is intended for researchers who want to continue learning the fundamentals of R programming and how to deal with messy datasets. The audience should know how to subset dataframes and vectors and conduct basic analysis, and/or have taken our Intro to R course.

+

+

+

1.3 Offerings

+

This course is taught on a regular basis at Fred Hutch Cancer Center through the Data Science Lab. Announcements of course offering can be found here. If you wish to follow the course content asynchronously, you may access the course content on this website and exercises and solutions on Posit Cloud. The Posit Cloud compute space can be copied to your own workspace for personal use, or you can access the exercises and solutions on GitHub.

diff --git a/docs/no_toc/index.md b/docs/no_toc/index.md

index e427fbe..0aae292 100644

--- a/docs/no_toc/index.md

+++ b/docs/no_toc/index.md

@@ -1,6 +1,6 @@

---

title: "Intermediate R, Season 3"

-date: "March, 2024"

+date: "May, 2024"

site: bookdown::bookdown_site

documentclass: book

bibliography: [book.bib]

@@ -15,11 +15,14 @@ output:

# About this Course

-## Curriculum

+## Curriculum

-The course continues building programming fundamentals in R programming and data analysis. You will learn how to make use of complex data structures, use custom functions built by other R users, creating your own functions, and how to iterate repeated tasks that scales naturally. You will also learn how to clean messy data to a Tidy form for analysis, and conduct an end-to-end data science workflow.

+The course continues building programming fundamentals in R programming and data analysis. You will learn how to make use of complex data structures, use custom functions built by other R users, creating your own functions, and how to iterate repeated tasks that scales naturally. You will also learn how to clean messy data to a Tidy form for analysis, and conduct an end-to-end data science workflow.

-## Target Audience

+## Target Audience

-The course is intended for researchers who want to continue learning the fundamentals of R programming and how to deal with messy datasets. The audience should know how to subset dataframes and vectors and conduct basic analysis, and/or have taken our [Intro to R course](https://github.com/fhdsl/Intro_to_R).

+The course is intended for researchers who want to continue learning the fundamentals of R programming and how to deal with messy datasets. The audience should know how to subset dataframes and vectors and conduct basic analysis, and/or have taken our [Intro to R course](https://github.com/fhdsl/Intro_to_R).

+## Offerings

+

+This course is taught on a regular basis at Fred Hutch Cancer Center through the Data Science Lab. Announcements of course offering can be found [here](https://hutchdatascience.org/training/). If you wish to follow the course content asynchronously, you may access the course content on this website and [exercises and solutions on Posit Cloud](https://posit.cloud/content/8236252). The Posit Cloud compute space can be copied to your own workspace for personal use, or you can access the [exercises and solutions on GitHub](https://github.com/fhdsl/Intermediate_R_Exercises).

diff --git a/docs/no_toc/repeating-tasks.html b/docs/no_toc/iteration.html

similarity index 51%

rename from docs/no_toc/repeating-tasks.html

rename to docs/no_toc/iteration.html

index b0afc8b..8294898 100644

--- a/docs/no_toc/repeating-tasks.html

+++ b/docs/no_toc/iteration.html

@@ -4,11 +4,11 @@

- Chapter 10 Repeating tasks | Intermediate R, Season 3

-

+ Chapter 6 Iteration | Intermediate R, Season 3

+

-

+

@@ -16,7 +16,7 @@

-

+

@@ -29,8 +29,8 @@

-

-

+

+

@@ -138,6 +138,7 @@

Suppose that you want to repeat a chunk of code many times, but changing one variable’s value each time you do it. This could be modifying each element of a vector with the same operation, or analyzing a dataframe with different parameters.

There are three common strategies to go about this:

@@ -256,106 +244,111 @@

Chapter 10 Repeating tasks

Use a for loop to repeat the chunk of code, and let it loop over the changing variable’s value. This is popular for many programming languages, but the R programming culture encourages a functional way instead.

Functionals allow you to take a function that solves the problem for a single input and generalize it to handle any number of inputs. This is very popular in R programming culture.

-

-

10.1 For loops

+

+

6.1 For loops

A for loop repeats a chunk of code many times, once for each element of an input vector.

for (my_element in my_vector) {

chunk of code

}

Most often, the “chunk of code” will make use of my_element.

-

-

10.1.0.1 We can loop through elements of a vector and print it out.

6.1.0.1 We can loop through the indicies of a vector

+

The function seq_along() creates the indicies of a vector. It has almost the same properties as 1:length(my_vector), but avoids issues when the vector length is 0.

6.1.0.2 Alternatively, we can loop through the elements of a vector

+

for(vec_i in my_vector) {

+print(vec_i)

+}

## [1] 1

## [1] 3

## [1] 5

## [1] 7

+

+

6.1.0.3 Another example via indicies

+

result =rep(NA, length(my_vector))

+for(i inseq_along(my_vector)) {

+ result[i] =log(my_vector[i])

+}

+

-

-

10.2 Functionals

+

+

6.2 Functionals

A functional is a function that takes in a data structure and function as inputs and applies the function on the data structure, element by element. It maps your input data structure to an output data structure based on the function. It encourages the usage of modular functions in your code.

Or,

We will use the purrr package in tidyverse to use functionals.

-

map() takes in a vector or a list, and then applies the function on each element of it. The output is always a list. (You see the output twice, because it prints out element by element, and then returns a list.)

-

map(my_vector, print)

-

## [1] 1

-## [1] 3

-## [1] 5

-## [1] 7

+

map() takes in a vector or a list, and then applies the function on each element of it. The output is always a list.

Lists are useful if what you are using it on requires a flexible data structure.

To be more specific about the output type, you can do this via the map_* function, where * specifies the output type: map_lgl(), map_chr(), and map_dbl() functions return vectors of logical values, strings, or numbers respectively.

For example, to make sure your output is a double (numeric):

-

map_dbl(my_vector, log)

+

map_dbl(my_vector, log)

## [1] 0.000000 1.098612 1.609438 1.945910

-

All of these are toy examples that gets us familiar with the syntax, but we already have built-in functions to solve these problems. Let’s see some real-life case studies.

+

All of these are toy examples that gets us familiar with the syntax, but we already have built-in functions to solve these problems, such as log(my_vector). Let’s see some real-life case studies.

-

-

10.3 Case studies

-

-

10.3.1 1. Loading in multiple dataframes from files for analysis

+

+

6.3 Case studies

+

+

6.3.1 1. Loading in multiple files.

Suppose that we want to load in a few dataframes, and store them in a list of dataframes for analysis downstream.

We start with the filepaths we want to load in as dataframes.

What variable we need to loop through: c("Adelie", "Chinstrap", "Gentoo")

The repeated task as a function: a custom function that takes in a specie of interest. The function filters the rows of penguins to the species of interest, and compute the mean of bill_length_mm.

The looping mechanism, and its output: map_dbl() outputs (double) numeric vectors.

and you are interested to look at the mean of each column. It is very helpful to interpret the dataframe penguins_numeric as a list, iterating through each column as an element of a list.

Let’s practice writing out one iteration:

-

mean(penguins_numeric[[1]], na.rm =TRUE)

+

mean(penguins_numeric[[1]], na.rm =TRUE)

## [1] 43.92193

-

-

10.3.3.1 To do this functionally, we think about:

+

+

6.3.3.1 To do this functionally, we think about:

What variable we need to loop through: the list penguins_numeric

The repeated task as a function: mean() with the argument na.rm = TRUE.

The looping mechanism, and its output: map_dbl() outputs (double) numeric vectors.

-

-

-

-

-

-

-

-

-

-

-

-

-

diff --git a/docs/no_toc/search_index.json b/docs/no_toc/search_index.json

index c0fde75..46b5cf8 100644

--- a/docs/no_toc/search_index.json

+++ b/docs/no_toc/search_index.json

@@ -1 +1 @@