Angel Xuan Chang

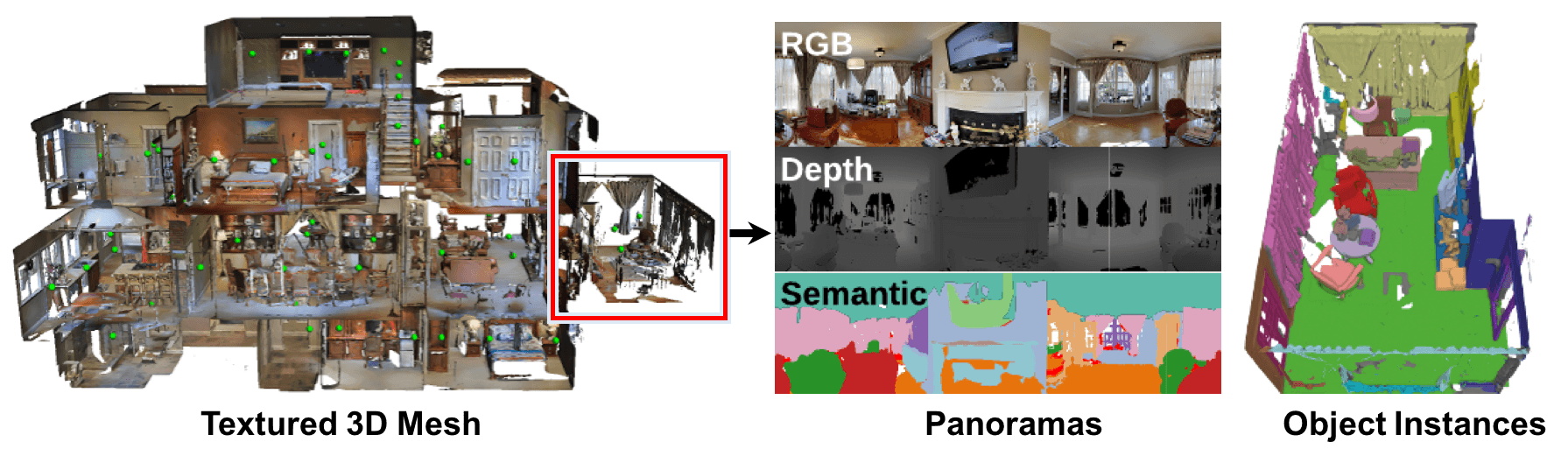

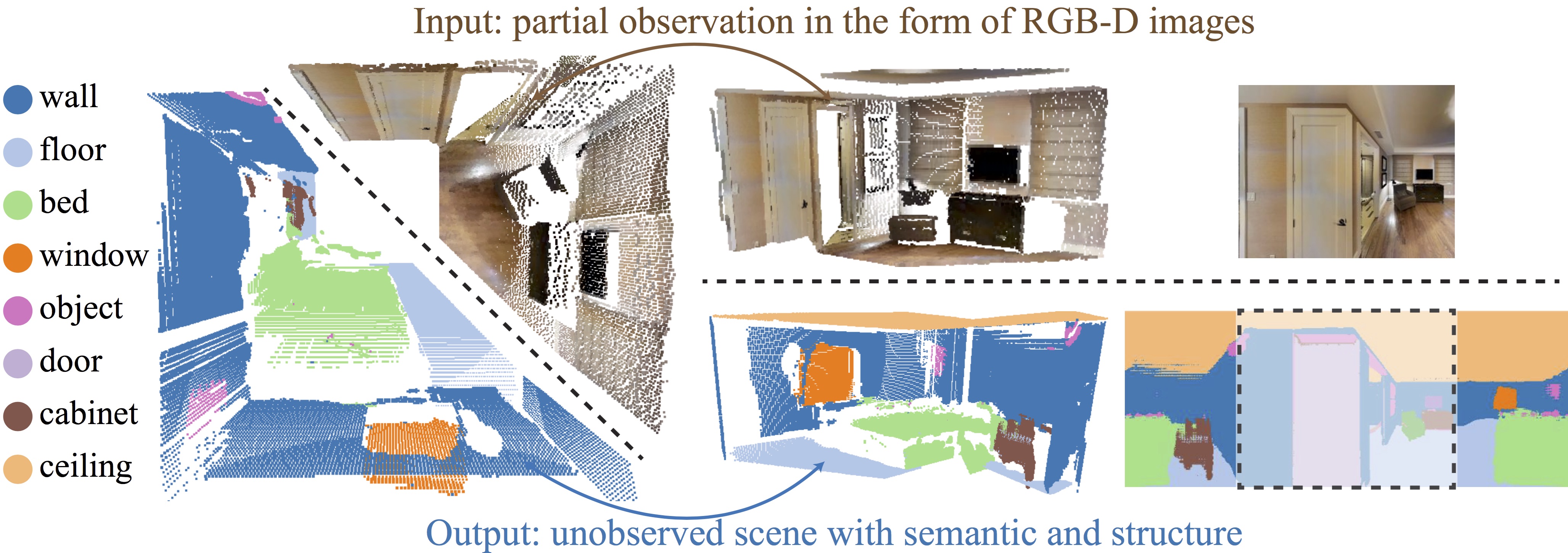

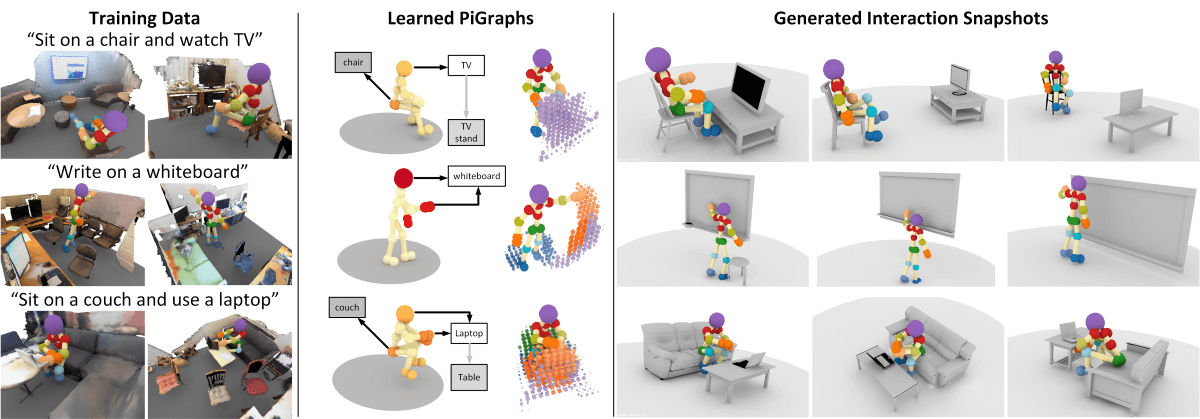

+ ++ I am an Associate Professor at Simon Fraser University. + Prior to this, I was a visiting research scientist at Facebook AI Research and a research scientist at Eloquent Labs working on dialogue. I received my Ph.D. in Computer Science from Stanford, where I was part of the Natural Language Processing Group and advised by Chris Manning. + My research focuses on connecting language to 3D representations of shapes and scenes and grounding of language for embodied agents in indoor environments. I have worked on methods for synthesizing 3D scenes and shapes from natural language, and various datasets for 3D scene understanding. In general, I am interested in the semantics of shapes and scenes, the representation and acquisition of common sense knowledge, and reasoning using probabilistic models. + Some of my other interests include drawing and dance. +

++ +

+ +

+ + Associate Professor

+ School of Computing Science

+ Simon Fraser University

+ 3DLG + | GrUVi + | SFU NatLang

+ SFU AI/ML + | VINCI

+ Canada CIFAR AI Chair (Amii)

+ TUM-IAS Hans Fischer Fellow (2018-2022)

+ Google Scholar +

+ + + +

Contact Details

+Mailing Address

+ Angel Xuan Chang+ School of Computing Science

+ ASB 9971-8888 University Drive

+ Simon Fraser University

+ Burnaby, BC V5A 1S6, Canada +

Office

+ Campus: Burnaby Campus+ Building: TASC1

+ Room: 8031 +