Enabling campus researchers to share computational and data resources with external collaborators is a powerful multiplier in advancing science. Sharing spare capacity for even short durations allows an institutional HPC resource a cost-efficient means of participating in a larger cyber ecosystem. In this document we will show you how to integrate your HPC cluster resource to the Open Science Grid to support collaborative, multi-institutional science. The only requirements are that your cluster can provide SSH access to a single OSG staff member, that your cluster job submission and worker nodes have outbound IP connectivity, the operating system is CentOS/RHEL 6.x, 7.x or similar, and that a common batch scheduler is used (e.g. SLURM, PBS, HTCondor).

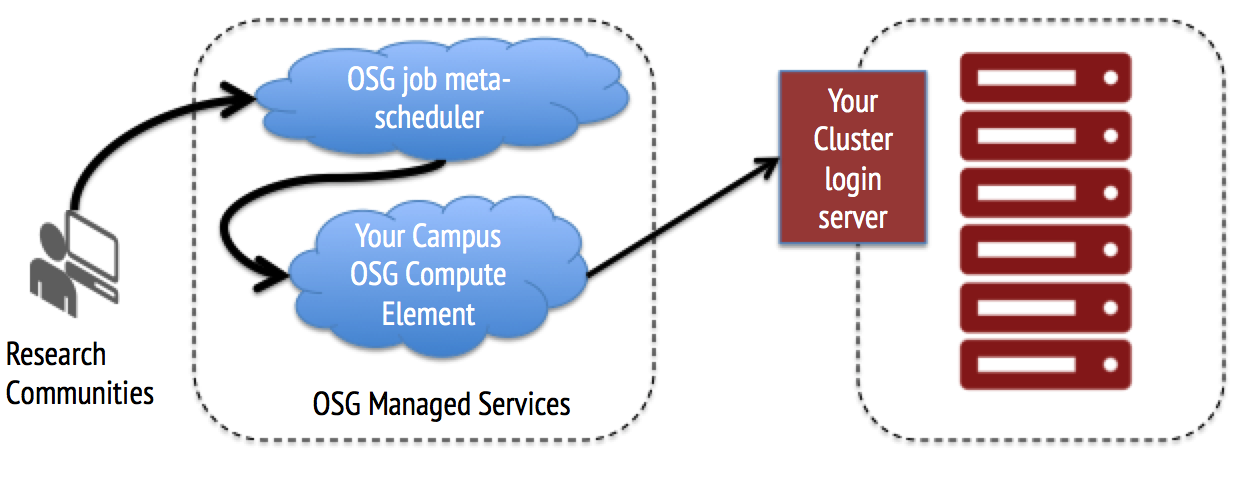

OSG offers a managed service option to connect a campus HPC/HTC cluster to the Open Science Grid. The OSG team will host and operate an HTCondor compute element which routes user jobs to your cluster, configured for science communities that you choose to support.

Here are the basic system requirements:

- Cluster operating system must be RHEL 6.x, 7.x or CentOS 6.x, 7.x or Scientific Linux 6.x, 7.x

- A standard Unix account on your system's login server. The OSG service will use this account and submit to your batch queue in a manner you define.

- SSH access to this account via public SSH keys.

- A supported batch system (Slurm, HTCondor, PBS, LSF, SGE)

- Outbound network connectivity from the compute nodes (can be behind NAT)

Setup and installation process consists of the following steps:

- Consultation call to collect system details. We'll need the following information:

- Cluster name

- IP address for cluster

- Technical contact information for cluster

- Technical information on cluster (batch system, OS, location of scratch space, number of slots available for OSG)

- Job submission details (queue to use, default resources for a job, etc.)

- Create Unix login account for the OSG service

- Install public SSH keys for the service

- We configure the OSG service with your system details

- We validate operation with a set of test jobs

- We configure central OSG services to schedule jobs for science communities you support

- (Optional): we can assist you in installing and setting up the Squid and OASIS software on your cluster to support application software repositories

OSG takes multiple precautions to maintain security and prevent unauthorized usage of resources:

- Access to the OSG system with ssh keys are restricted to the OSG staff maintaining them

- Users are carefully vetted before they are allowed to submit jobs to OSG

- Jobs running through OSG can be traced back to the user that submitted them

- Job submission can quickly be disabled if needed

- OSG staff are readily contactable through several means in case of an emergency through email, the help desk, or phone (317-278-9699)

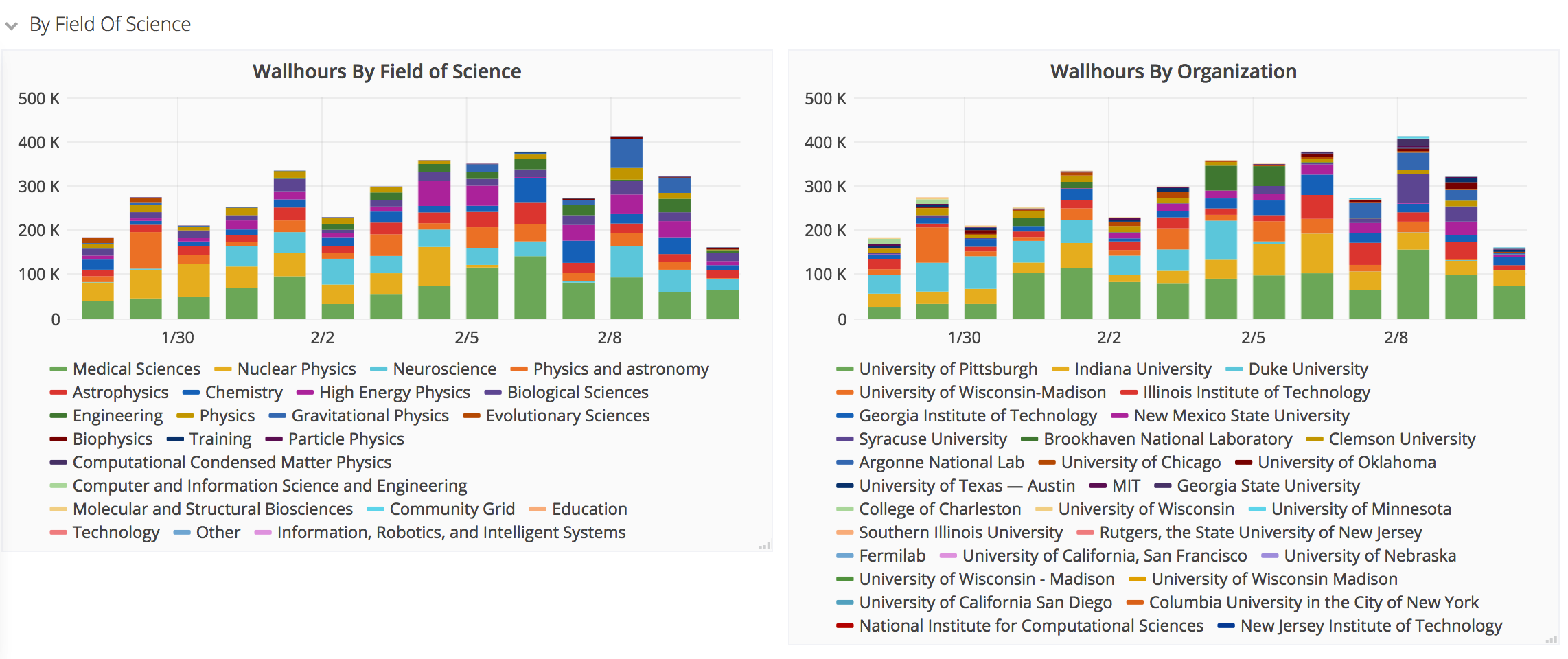

The OSG provides monitoring to view which communities are accessing your site, their fields of science, home institution. Below is an example of the monitoring views that will be available for your cluster.

## **Optional**: Providing Access to Application Software Using OASIS

Many OSG communities use software modules provided by their collaborations or by the OSG User Support team. You do not need to install any application software on your cluster. OSG uses a FUSE-based distributed software repository system called OASIS. To support these communities, the following additional components are needed:

- A (cluster-wide) Squid service with a minimum 50GB of cache space.

- Local scratch area on compute nodes: typical recommendations are 10 GB per job

- On each compute node, installation of the OASIS software package and associated FUSE kernel modules

- Local scratch space of at least 10 GB (preferably 22GB) on compute nodes for caching OASIS data.

OSG has a yum repository with rpms of the OSG Frontier Squid service. The rpms include configuration files that allow Squid to access certified OSG software repositories. Instructions on setting up Frontier Squid are available here.

OSG also provides rpms for the OASIS software in it's yum repositories. Instructions on installing and making OASIS based software available on your compute nodes are available here.

## Getting Started

Drop us a note at [email protected] if this is of interest to you. We will contact you to setup a consultation.