+

+ +

+ ## Materials

@@ -8,14 +8,14 @@ This is the list of mechanical materials we used while developing the robot:

| Name | Use | Product Image |

| ---- | --- | ------------- |

-| 3mm MDF | Made up earlier parts of the robot |

## Materials

@@ -8,14 +8,14 @@ This is the list of mechanical materials we used while developing the robot:

| Name | Use | Product Image |

| ---- | --- | ------------- |

-| 3mm MDF | Made up earlier parts of the robot |  |

-| ABS filament | Make up most CAD parts of the robot |

|

-| ABS filament | Make up most CAD parts of the robot |  |

-| PLA filament | Make up few CAD parts of the robot |

|

-| PLA filament | Make up few CAD parts of the robot |  |

-| Male-female nylon spacers | Connecting separated robot pieces |

|

-| Male-female nylon spacers | Connecting separated robot pieces |  |

-| M3 6mm round-head nylon screws | Fixing in place the robot pieces |

|

-| M3 6mm round-head nylon screws | Fixing in place the robot pieces |  |

-| M3 10mm flat-head steel screws | Fixing in place the robot pieces |

|

-| M3 10mm flat-head steel screws | Fixing in place the robot pieces |  |

-| M3 nylon nuts | Fixing in place the robot pieces |

|

-| M3 nylon nuts | Fixing in place the robot pieces |  |

-| M3 steel nuts | Fixing in place the robot pieces |

|

-| M3 steel nuts | Fixing in place the robot pieces |  |

+| 3mm MDF | Made up earlier parts of the robot |

|

+| 3mm MDF | Made up earlier parts of the robot |  |

+| ABS filament | Make up most CAD parts of the robot |

|

+| ABS filament | Make up most CAD parts of the robot |  |

+| PLA filament | Make up few CAD parts of the robot |

|

+| PLA filament | Make up few CAD parts of the robot |  |

+| Male-female nylon spacers | Connecting separated robot pieces |

|

+| Male-female nylon spacers | Connecting separated robot pieces |  |

+| M3 6mm round-head nylon screws | Fixing in place the robot pieces |

|

+| M3 6mm round-head nylon screws | Fixing in place the robot pieces |  |

+| M3 10mm flat-head steel screws | Fixing in place the robot pieces |

|

+| M3 10mm flat-head steel screws | Fixing in place the robot pieces |  |

+| M3 nylon nuts | Fixing in place the robot pieces |

|

+| M3 nylon nuts | Fixing in place the robot pieces |  |

+| M3 steel nuts | Fixing in place the robot pieces |

|

+| M3 steel nuts | Fixing in place the robot pieces |  |

- Piece materials

@@ -35,10 +35,10 @@ This is the list of tools we used to manufacture the robot:

| Name | Use | Product Image |

| ---- | --- | ------------- |

-| Fusion360 | CAD program |

|

- Piece materials

@@ -35,10 +35,10 @@ This is the list of tools we used to manufacture the robot:

| Name | Use | Product Image |

| ---- | --- | ------------- |

-| Fusion360 | CAD program |  |

-| Laser cutter | Cutting MDF |

|

-| Laser cutter | Cutting MDF |  |

-| Ender 3 V2 | Printing PLA |

|

-| Ender 3 V2 | Printing PLA |  |

-| Artillery Sidewinder X2 | Printing ABS |

|

-| Artillery Sidewinder X2 | Printing ABS |  |

+| Fusion360 | CAD program |

|

+| Fusion360 | CAD program |  |

+| Laser cutter | Cutting MDF |

|

+| Laser cutter | Cutting MDF |  |

+| Ender 3 V2 | Printing PLA |

|

+| Ender 3 V2 | Printing PLA |  |

+| Artillery Sidewinder X2 | Printing ABS |

|

+| Artillery Sidewinder X2 | Printing ABS |  |

- Software

@@ -56,4 +56,4 @@ We would definetely recommend using the Zortrax material so that the

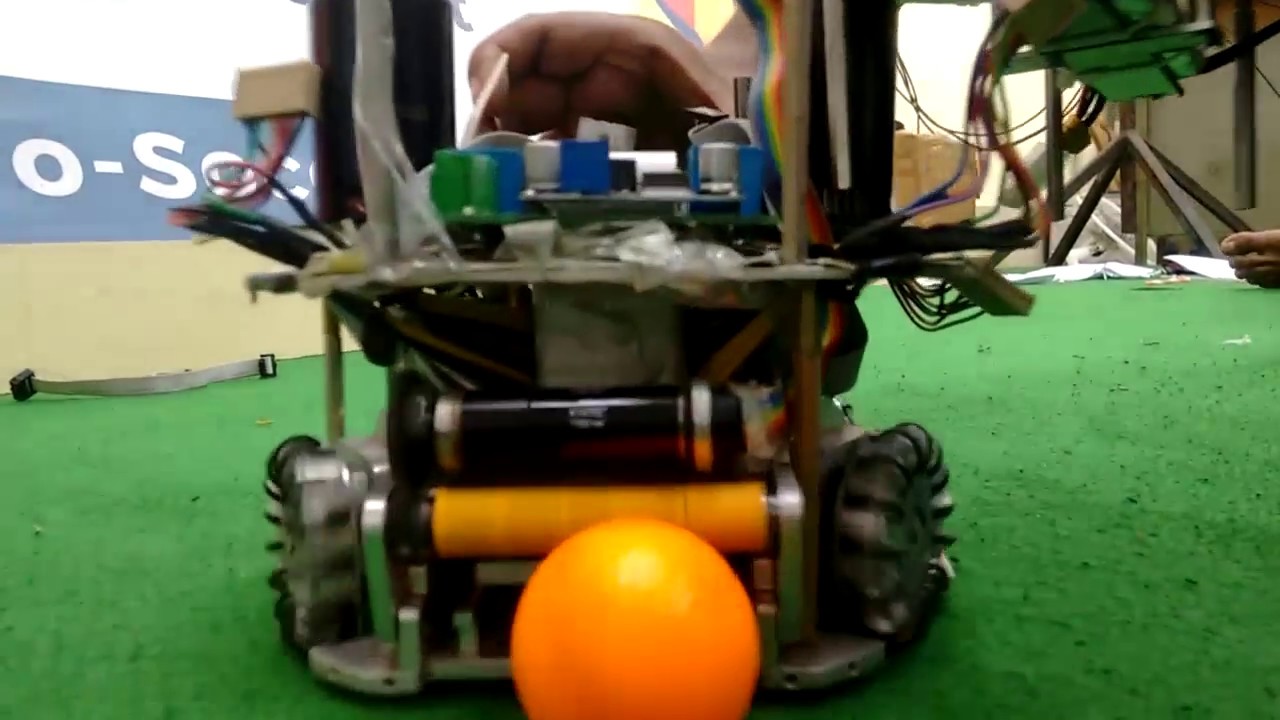

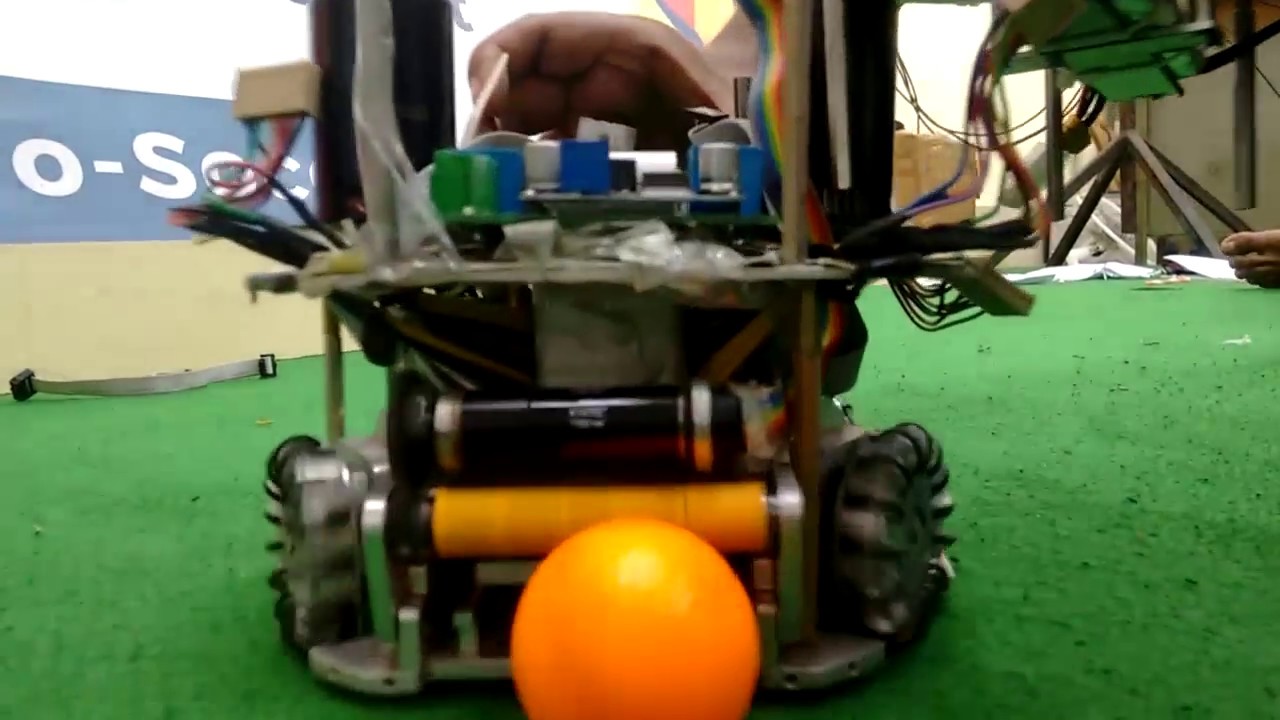

These are the robot's different versions as we progressed in its design:

-

|

- Software

@@ -56,4 +56,4 @@ We would definetely recommend using the Zortrax material so that the

These are the robot's different versions as we progressed in its design:

- +

+ diff --git a/docs/soccer/Mechanics/1.Robot_Lower_Design.md b/docs/SoccerLightweight/2023/Mechanics/1.Robot_Lower_Design.md

similarity index 87%

rename from docs/soccer/Mechanics/1.Robot_Lower_Design.md

rename to docs/SoccerLightweight/2023/Mechanics/1.Robot_Lower_Design.md

index 3a1cd0c..3c9e288 100644

--- a/docs/soccer/Mechanics/1.Robot_Lower_Design.md

+++ b/docs/SoccerLightweight/2023/Mechanics/1.Robot_Lower_Design.md

@@ -1,6 +1,6 @@

# Lower Design

-

diff --git a/docs/soccer/Mechanics/1.Robot_Lower_Design.md b/docs/SoccerLightweight/2023/Mechanics/1.Robot_Lower_Design.md

similarity index 87%

rename from docs/soccer/Mechanics/1.Robot_Lower_Design.md

rename to docs/SoccerLightweight/2023/Mechanics/1.Robot_Lower_Design.md

index 3a1cd0c..3c9e288 100644

--- a/docs/soccer/Mechanics/1.Robot_Lower_Design.md

+++ b/docs/SoccerLightweight/2023/Mechanics/1.Robot_Lower_Design.md

@@ -1,6 +1,6 @@

# Lower Design

- +

+ ## Base

@@ -8,17 +8,17 @@

We started by considering a base that could let us maximize the area permited by RCJ rules. We didn't make the exact dimensions because the rules state that the robot needs to fit *smoothly* into a cilinder of this diameter, so we had to leave a bit of leeway.

-

## Base

@@ -8,17 +8,17 @@

We started by considering a base that could let us maximize the area permited by RCJ rules. We didn't make the exact dimensions because the rules state that the robot needs to fit *smoothly* into a cilinder of this diameter, so we had to leave a bit of leeway.

- +

+ We also considered for a long time to use bases to support the PCBs. This was to keep them in a rigid piece of the robot and also to possibly give a padding to it to prevent vibrations in the PCBs. In the final implementation however, we ended up not using them because we never ended up using the padding, and connecting the PCBs alone wasn't as rigid but still maintained them correctly in place.

-

We also considered for a long time to use bases to support the PCBs. This was to keep them in a rigid piece of the robot and also to possibly give a padding to it to prevent vibrations in the PCBs. In the final implementation however, we ended up not using them because we never ended up using the padding, and connecting the PCBs alone wasn't as rigid but still maintained them correctly in place.

- +

+ - Implementation

We ended up following the main ideas on our initial considerations, with some slight adjustments

-

- Implementation

We ended up following the main ideas on our initial considerations, with some slight adjustments

- +

+ The main base has the holes to support the following modules and pieces: the main PCB, the line PCBs, the voltage regulator, the IR ring, the dribbler, the ultrasonic sensor supports, the motor supports and zipties.

diff --git a/docs/soccer/Mechanics/2.Robot_Upper_Design.md b/docs/SoccerLightweight/2023/Mechanics/2.Robot_Upper_Design.md

similarity index 66%

rename from docs/soccer/Mechanics/2.Robot_Upper_Design.md

rename to docs/SoccerLightweight/2023/Mechanics/2.Robot_Upper_Design.md

index b8faeba..9f039fb 100644

--- a/docs/soccer/Mechanics/2.Robot_Upper_Design.md

+++ b/docs/SoccerLightweight/2023/Mechanics/2.Robot_Upper_Design.md

@@ -1,6 +1,6 @@

# Upper Design

-

The main base has the holes to support the following modules and pieces: the main PCB, the line PCBs, the voltage regulator, the IR ring, the dribbler, the ultrasonic sensor supports, the motor supports and zipties.

diff --git a/docs/soccer/Mechanics/2.Robot_Upper_Design.md b/docs/SoccerLightweight/2023/Mechanics/2.Robot_Upper_Design.md

similarity index 66%

rename from docs/soccer/Mechanics/2.Robot_Upper_Design.md

rename to docs/SoccerLightweight/2023/Mechanics/2.Robot_Upper_Design.md

index b8faeba..9f039fb 100644

--- a/docs/soccer/Mechanics/2.Robot_Upper_Design.md

+++ b/docs/SoccerLightweight/2023/Mechanics/2.Robot_Upper_Design.md

@@ -1,6 +1,6 @@

# Upper Design

- +

+ ## IR Ring Cover

diff --git a/docs/soccer/Programming/General.md b/docs/SoccerLightweight/2023/Programming/General.md

similarity index 92%

rename from docs/soccer/Programming/General.md

rename to docs/SoccerLightweight/2023/Programming/General.md

index 28c3bd3..b04b0a0 100644

--- a/docs/soccer/Programming/General.md

+++ b/docs/SoccerLightweight/2023/Programming/General.md

@@ -18,10 +18,10 @@ It is also important to mention that the structure of the code worked as a state

### Attacking Robot

The main objective of this robot was to gain possesion of the ball using the dribbler as fast as possible and then go towards the goal using vision. Therefore, the algorithm used is the following:

-

+

### Defending Robot

On the other hand, the defending robot should always stay near the goal and go towards it if the ball is in a 20cm radius. The algorithm for this robot is shown in the following image:

-

+

diff --git a/docs/soccer/Programming/IR_Detection.md b/docs/SoccerLightweight/2023/Programming/IR_Detection.md

similarity index 100%

rename from docs/soccer/Programming/IR_Detection.md

rename to docs/SoccerLightweight/2023/Programming/IR_Detection.md

diff --git a/docs/soccer/Programming/Line_Detection.md b/docs/SoccerLightweight/2023/Programming/Line_Detection.md

similarity index 90%

rename from docs/soccer/Programming/Line_Detection.md

rename to docs/SoccerLightweight/2023/Programming/Line_Detection.md

index 57f0328..d7c1ec7 100644

--- a/docs/soccer/Programming/Line_Detection.md

+++ b/docs/SoccerLightweight/2023/Programming/Line_Detection.md

@@ -4,13 +4,13 @@ To obtain the phototransistor values from the multiplexors, a function was creat

## Attacking Robot

Since this robot would go in all directions to search for the ball and score, it should never cross any white lines. Therefore, the phototransistors pcbs were used to estimate the angle on which the robot was touching the line to then move in the opposite direction. This was also complemented with ultrasonic sensors in order to avoid crashing with the walls, other robots and the ball itself (avoiding scoring on our goal).

-

## IR Ring Cover

diff --git a/docs/soccer/Programming/General.md b/docs/SoccerLightweight/2023/Programming/General.md

similarity index 92%

rename from docs/soccer/Programming/General.md

rename to docs/SoccerLightweight/2023/Programming/General.md

index 28c3bd3..b04b0a0 100644

--- a/docs/soccer/Programming/General.md

+++ b/docs/SoccerLightweight/2023/Programming/General.md

@@ -18,10 +18,10 @@ It is also important to mention that the structure of the code worked as a state

### Attacking Robot

The main objective of this robot was to gain possesion of the ball using the dribbler as fast as possible and then go towards the goal using vision. Therefore, the algorithm used is the following:

-

+

### Defending Robot

On the other hand, the defending robot should always stay near the goal and go towards it if the ball is in a 20cm radius. The algorithm for this robot is shown in the following image:

-

+

diff --git a/docs/soccer/Programming/IR_Detection.md b/docs/SoccerLightweight/2023/Programming/IR_Detection.md

similarity index 100%

rename from docs/soccer/Programming/IR_Detection.md

rename to docs/SoccerLightweight/2023/Programming/IR_Detection.md

diff --git a/docs/soccer/Programming/Line_Detection.md b/docs/SoccerLightweight/2023/Programming/Line_Detection.md

similarity index 90%

rename from docs/soccer/Programming/Line_Detection.md

rename to docs/SoccerLightweight/2023/Programming/Line_Detection.md

index 57f0328..d7c1ec7 100644

--- a/docs/soccer/Programming/Line_Detection.md

+++ b/docs/SoccerLightweight/2023/Programming/Line_Detection.md

@@ -4,13 +4,13 @@ To obtain the phototransistor values from the multiplexors, a function was creat

## Attacking Robot

Since this robot would go in all directions to search for the ball and score, it should never cross any white lines. Therefore, the phototransistors pcbs were used to estimate the angle on which the robot was touching the line to then move in the opposite direction. This was also complemented with ultrasonic sensors in order to avoid crashing with the walls, other robots and the ball itself (avoiding scoring on our goal).

- +

+ The calibration for this robot was automatic and done when the robot started. Here, the robot would capture about 100 values for each sensor when standing on green to get the average measurement. Then, to check if there was a line, the robot would compare the current value with the average value and if the difference was greater than a threshold, then a line was detected and it was possible to see which phototransistor had seen it.

## Defending Robot

The defending robot worked basically as a line follower, using two phototransistors (one from each side) in order to move horizontally. Additionally, it used the front phototransistor pcb to check if it had gone too far back, hence it would move forward. There were also three ultrasonic sensors used to avoid crashing with the walls and the ball.

-

The calibration for this robot was automatic and done when the robot started. Here, the robot would capture about 100 values for each sensor when standing on green to get the average measurement. Then, to check if there was a line, the robot would compare the current value with the average value and if the difference was greater than a threshold, then a line was detected and it was possible to see which phototransistor had seen it.

## Defending Robot

The defending robot worked basically as a line follower, using two phototransistors (one from each side) in order to move horizontally. Additionally, it used the front phototransistor pcb to check if it had gone too far back, hence it would move forward. There were also three ultrasonic sensors used to avoid crashing with the walls and the ball.

- +

+ For this robot, it was important to check the two phototransistors for line following before the match started, since the robot would move proportionally according to the sensors, looking to stay in the average value between white and green. Therefore, the calibration was done manually, by reading and then setting the white and green values for each sensor. The front sensor was calibrated automatically using the method explained in the attacking robot.

\ No newline at end of file

diff --git a/docs/soccer/Programming/Movement.md b/docs/SoccerLightweight/2023/Programming/Movement.md

similarity index 94%

rename from docs/soccer/Programming/Movement.md

rename to docs/SoccerLightweight/2023/Programming/Movement.md

index 42e60d4..3de0ac2 100644

--- a/docs/soccer/Programming/Movement.md

+++ b/docs/SoccerLightweight/2023/Programming/Movement.md

@@ -62,7 +62,7 @@ void Motors::moveToAngle(int degree, int speed, int error) {

In order to take advantage of the HP motors, ideally, the robot should go as fast as possible, however, after a lot of testing, we found that the robot was not able to fully control the ball at high speeds, as it would usually push the ball out of bounds instead of getting it with the dribbler. Therefore, the speed was regulated depending on the distance to the ball (measured with the IR ring) using the following function:

-

For this robot, it was important to check the two phototransistors for line following before the match started, since the robot would move proportionally according to the sensors, looking to stay in the average value between white and green. Therefore, the calibration was done manually, by reading and then setting the white and green values for each sensor. The front sensor was calibrated automatically using the method explained in the attacking robot.

\ No newline at end of file

diff --git a/docs/soccer/Programming/Movement.md b/docs/SoccerLightweight/2023/Programming/Movement.md

similarity index 94%

rename from docs/soccer/Programming/Movement.md

rename to docs/SoccerLightweight/2023/Programming/Movement.md

index 42e60d4..3de0ac2 100644

--- a/docs/soccer/Programming/Movement.md

+++ b/docs/SoccerLightweight/2023/Programming/Movement.md

@@ -62,7 +62,7 @@ void Motors::moveToAngle(int degree, int speed, int error) {

In order to take advantage of the HP motors, ideally, the robot should go as fast as possible, however, after a lot of testing, we found that the robot was not able to fully control the ball at high speeds, as it would usually push the ball out of bounds instead of getting it with the dribbler. Therefore, the speed was regulated depending on the distance to the ball (measured with the IR ring) using the following function:

- +

+ $v(r) = 1.087 + 1/(r-11.5)$, where $r$ is the distance to the ball $\in [0.00,10.0]$

@@ -73,6 +73,6 @@ This equation was experimentally established with the goal of keeping speed at m

The idea for this robot was to keep it on the line line of the goal, always looking to keep the ball in front of it to block any goal attempts.Therefore, speed was regulated according to the angle and x-component to the ball. This meant that if the ball was in front of it, then it didn't have to move. However if the ball was far to the right or left, then speed had to be increased proportionally to the x-component of the ball, as shown in the following image:

-

$v(r) = 1.087 + 1/(r-11.5)$, where $r$ is the distance to the ball $\in [0.00,10.0]$

@@ -73,6 +73,6 @@ This equation was experimentally established with the goal of keeping speed at m

The idea for this robot was to keep it on the line line of the goal, always looking to keep the ball in front of it to block any goal attempts.Therefore, speed was regulated according to the angle and x-component to the ball. This meant that if the ball was in front of it, then it didn't have to move. However if the ball was far to the right or left, then speed had to be increased proportionally to the x-component of the ball, as shown in the following image:

- +

+ diff --git a/docs/soccer/Programming/Vision.md b/docs/SoccerLightweight/2023/Programming/Vision.md

similarity index 100%

rename from docs/soccer/Programming/Vision.md

rename to docs/SoccerLightweight/2023/Programming/Vision.md

diff --git a/docs/SoccerLightweight/2023/index.md b/docs/SoccerLightweight/2023/index.md

new file mode 100644

index 0000000..380b241

--- /dev/null

+++ b/docs/SoccerLightweight/2023/index.md

@@ -0,0 +1,35 @@

+# @SoccerLightweight - 2023

+

+The main developments during 2023 with respect to previous years are the following:

+

+## Sections

+

+### Mechanics

+

+- [General](Mechanics/0.General.md)

+

+- [Robot Lower Design](Mechanics/1.Robot_Lower_Design.md)

+

+- [Robot Upper Design](Mechanics/2.Robot_Upper_Design.md)

+

+### Electronics

+

+- [General](Electronics/1 General.md)

+

+- [Power Supply](Electronics/2 Power Supply.md)

+

+- [Printed Circuit Boards (PCB)](Electronics/3 Printed Circuit Boards (PCB).md)

+

+- [Dribbler Implementation](Electronics/4 Dribbler Implementation.md)

+

+### Programming

+

+- [General](Programming/General.md)

+

+- [IR Detection](Programming/IR_Detection.md)

+

+- [Line Detection](Programming/Line_Detection.md)

+

+- [Movement](Programming/Movement.md)

+

+- [Vision](Programming/Vision.md)

diff --git a/docs/SoccerLightweight/2024/Electronics/1 General.md b/docs/SoccerLightweight/2024/Electronics/1 General.md

new file mode 100644

index 0000000..2e47e6f

--- /dev/null

+++ b/docs/SoccerLightweight/2024/Electronics/1 General.md

@@ -0,0 +1,5 @@

+# General

+

+For the TMR 2024, these are some general ideas:

+

+ For the power supply we used two LiPO batteries: one 11.1V and 2250 mAh for the motors, and two 3.7V Lipo batteries connected in series to obtain 7.4V and 2500 mAh for the logic using a voltage regulator at 5V, These batteries were used due to their high amperage and optimal duration in competition. The PCBs, designed with EasyEda, included a main board with the microcontroller and sensors, boards for the TEPT5700 phototransistors connected directly to the microcontroller for line detection, and an IR ring with TSSP58038 receivers and an Atmega328p was reused to process the infrared signals. The Arduino Mega Pro microcontroller was chosen for its ease of use and multiple pins. For movement, HP motors at 2200 RPM with TB6612FNG controllers were used, configured to handle up to 2.4 Amperes continuous and peaks of 6 Amperes, adapting to the high current demand of the motors.

diff --git a/docs/SoccerLightweight/2024/Electronics/2 Power Supply.md b/docs/SoccerLightweight/2024/Electronics/2 Power Supply.md

new file mode 100644

index 0000000..78f0898

--- /dev/null

+++ b/docs/SoccerLightweight/2024/Electronics/2 Power Supply.md

@@ -0,0 +1,13 @@

+# Power Supply

+

+For power, two batteries were used.

+

+## Movement Power

+For the motors, a 11.1V 2250 mAh battery was used; this is because the motors require 12V and so as not to worry about how long they would last, we bought them with a lot of amperage.

+

+## Logic Power

+For logic, two 3.7V Lipo batteries were used connected in series to obtain 7.4V, these batteries have an amperage of 2500 mAh.

+

+

+

+It was decided to use this type of batteries because they are very good in relation to electronic projects; likewise, their duration was very optimal when it came to being in the competition.

diff --git a/docs/SoccerLightweight/2024/Electronics/3 PCBs Designs.md b/docs/SoccerLightweight/2024/Electronics/3 PCBs Designs.md

new file mode 100644

index 0000000..1716775

--- /dev/null

+++ b/docs/SoccerLightweight/2024/Electronics/3 PCBs Designs.md

@@ -0,0 +1,12 @@

+# PCBs Designs

+

+For the design of the PCBs, we used the EasyEda app. Three types of boards were made on it.

+

+## Main Board

+The main board included the microcontroller, as well as the sensors that would be used; additional digital/analog pins were added in case they were needed.

+

+## Phototransistors Board

+The other boards were made for the phototransistors, which were used for the lower part of the robot for line detection. It was considered to use them with a multiplexer, but for reasons of complexity, they were connected directly to the analog pins of the microcontroller.

+

+## IR Ring Board

+It was decided to reuse last year's IR ring due to its complexity and the short time we had to develop something different. With this IR ring, we were able to work very well on the electronic part because it was connected to the microcontroller's serial port.

diff --git a/docs/SoccerLightweight/2024/Electronics/4 Electronic Components.md b/docs/SoccerLightweight/2024/Electronics/4 Electronic Components.md

new file mode 100644

index 0000000..137073d

--- /dev/null

+++ b/docs/SoccerLightweight/2024/Electronics/4 Electronic Components.md

@@ -0,0 +1,13 @@

+# Electronic Components

+

+## Microcontroller

+For the microcontroller, the Arduino Mega Pro was chosen. This is due to its ease of use in electronics and programming. The Arduino Mega Pro microcontroller has 54 digital pins, 15 digital ports with PWM output, 16 analog pins, among many other things.

+

+## Sensors

+For the orientation of the robot, we use a BNO055 sensor which has very good performance and accuracy. It is also possible to connect this sensor via I2C to the microcontroller.

+For the detection of lines, TEPT5700 phototransistors were used since the range in which they work does not include infrared light, which is crucial so that it does not interfere with the signals from the IR ball.

+

+Digital IR receivers TSSP58038 were used to detect the IR signals emitted by the ball and a custom PCB was also designed. The IR ring is made up of 12 IR receivers, and an Atmega328p was used for processing and vectoring the infrared signals.

+

+## Drivers and motors

+For the motion section, we use HP motors at 2200 RPM with TB6612FNG drivers. For this, we bridge the input and output signals to obtain up to 2.4 continuous Amps and up to 6 peak Amps since the motors require too much current.

diff --git a/docs/SoccerLightweight/2024/Programming/General.md b/docs/SoccerLightweight/2024/Programming/General.md

new file mode 100644

index 0000000..b144e2d

--- /dev/null

+++ b/docs/SoccerLightweight/2024/Programming/General.md

@@ -0,0 +1,34 @@

+# General Overview & Strategy

+

+"Soccer Lightweight 2024" is an autonomous robot competition featuring 2 vs 2 soccer playoffs. The Soccer LighWeight team merged our knowledge in robotics and their passion for sports to design a robot that plays soccer with agility and precision.

+

+The strategy for the Soccer Lightweight competition was a blend of offensive and defensive tactics, realized with programming techniques and meticulous strategic planning. Core aspects of this strategy include:

+

+## Key Elements of the Strategy

+

+### Role Assignment

+Each robot is assigned a specific role, such as a striker or a goalkeeper. This specialization allows for focused development of skills and tactics suitable for each position.

+

+### Real-Time Vision Processing

+Using the Pixy2 camera, the robots can identify and track the ball and goals in real-time.

+

+### Holonomic Movement

+Implemented through kinematic equations, this allows the robots to move smoothly and rapidly in any direction.

+

+### Line Detection

+Utilizing phototransistors and IR sensors, the robots can detect field boundaries, ensuring they stay within the playing area and avoid penalties.

+

+## Tools and Technologies

+The main tools and software used in the development of the Soccer Lightweight robot include:

+

+- **Arduino**: Used for programming the microcontroller that controls the robot's actions.

+- **Visual Studio Code**: The primary integrated development environment (IDE) for writing and debugging code.

+- **Pixy Moon IDE**: Utilized for the calibration and detection of color blobs through the camera, essential for the robot's vision system.

+

+## Abstract

+The Soccer Lightweight robot exemplifies robotics through its integration of advanced motion algorithms and sensory systems. Designed for optimum performance on the soccer field, this robot utilizes real-time vision and sensory integration to accurately identify the ball and goals, enhancing its competitiveness. The engineering prioritizes agility within strict weight constraints, enabling the robot to execute complex maneuvers and strategies effectively during matches.

+

+

+## Algorithm of Attacking and Defending Robot

+

+

diff --git a/docs/SoccerLightweight/2024/Programming/IRDetection.md b/docs/SoccerLightweight/2024/Programming/IRDetection.md

new file mode 100644

index 0000000..3385139

--- /dev/null

+++ b/docs/SoccerLightweight/2024/Programming/IRDetection.md

@@ -0,0 +1,53 @@

+# IR Detection

+

+In the Soccer Lightweight project, the precision of robot movement and positioning on the field was really important for successful gameplay. Essential to this was accurately determining both the angle and distance to the ball. To achieve this, an IR ring utilizing 12 TSSP-58038 IR receivers was designed.

+

+

+## IR Ring Design and Functionality

+

+The IR ring consists of 12 TSSP-58038 IR receivers arranged in a circular ring. This configuration enables the robot to detect the angle of the ball relative to its position and estimate the distance to the ball. The primary objectives of the IR detection system include:

+

+

+- **Angle Detection**: To determine the direction of the ball.

+- **Distance Measurement**: To estimate how far the ball is from the robot.

+

+## Mathematical Calculations

+

+The IR detection system processes and filters the signals received from the IR sensors to determine the ball's angle and signal strength. Referencing the Yunit team's research from 2017, the calculations can be summarized as follows:

+

+- **Signal Processing**: The IR sensors detect the ball and send angle and strength data to the Arduino.

+- **Filtering**: We apply an exponential moving average (EMA) filter to smooth the data, ensuring stable and accurate readings.

+- **Angle Adjustment**: The raw angle data is adjusted to account for any offsets and converted to a 0-360 degree format for easier interpretation.

+- **Strength Calculation**: The strength of the signal indicates the distance to the ball, with stronger signals meaning the ball is closer.

+

+## Code Implementation

+

+Here is a brief overview of the core code responsible for processing the IR data:

+

+```cpp

+void IR::updateData() {

+ if (Serial3.available()) {

+ String input = Serial3.readStringUntil('\n');

+ if (input[0] == 'a') {

+ angle = input.substring(2).toDouble() + offset;

+ filterAngle.AddValue(angle);

+ } else if (input[0] == 'r') {

+ strength = input.substring(2).toDouble();

+ filterStr.AddValue(strength);

+ }

+ }

+}

+

+double IR::getAngle() {

+ return filterAngle.GetLowPass();

+}

+

+double IR::getStrength() {

+ return filterStr.GetLowPass();

+}

+```

+This code reads data from the IR sensors, applies filtering to the angle and strength values, and adjusts them for precise detection.

+

+## Implementation and Testing

+

+Comprehensive testing was conducted to calibrate the system and verify its accuracy. By employing TSSP-58038 IR sensors and advanced filtering techniques, we achieved reliable and precise ball detection, enabling the robot to execute complex movements and strategies with effectiveness.

\ No newline at end of file

diff --git a/docs/SoccerLightweight/2024/Programming/LineDetection.md b/docs/SoccerLightweight/2024/Programming/LineDetection.md

new file mode 100644

index 0000000..e34401c

--- /dev/null

+++ b/docs/SoccerLightweight/2024/Programming/LineDetection.md

@@ -0,0 +1,30 @@

+# Line Detection

+

+In the Soccer Lightweight project, detecting the field's lines is essential for maintaining the robot's position and ensuring it stays within the playing boundaries. The line detection system differentiates between the white lines on the field and the green background, enabling precise navigation.

+

+

+## Line Detection Strategy

+

+The line detection strategy employs a combination of analog sensors and a multiplexer to read values from different parts of the field. The key components and steps in this strategy include:

+

+

+- **Analog Sensors**: Multiple analog sensors are positioned around the robot to read the field's color, distinguishing between white lines and the green background.

+- **Multiplexer (MUX)**: A multiplexer switches between different sensor inputs, allowing the robot to monitor several sensors using a single analog input pin on the Arduino.

+- **Threshold Values**: Threshold values are set to differentiate between white lines and the green field, determining whether the sensor is over a line or the field.

+

+## Implementation

+

+The implementation involves reading sensor values and comparing them to predefined thresholds. The key functions responsible for line detection are:

+

+

+### muxSensor()

+Reads values from the multiplexer and direct sensor pins, comparing them to threshold values to determine if they are over a white line or green field.

+

+### calculateDirection()

+Determines the direction of the detected line and adjusts the robot's heading accordingly.

+

+## Testing and Calibration

+The line detection system underwent extensive testing and calibration to ensure accuracy. Thresholds for distinguishing between white and green were fine-tuned based on experimental data, ensuring the robot can reliably detect lines under various lighting conditions and on different field surfaces.

+

+

+

diff --git a/docs/SoccerLightweight/2024/Programming/Movement.md b/docs/SoccerLightweight/2024/Programming/Movement.md

new file mode 100644

index 0000000..3f6c9e6

--- /dev/null

+++ b/docs/SoccerLightweight/2024/Programming/Movement.md

@@ -0,0 +1,62 @@

+# Motion Control: Holonomic Movement

+

+## Kinematic Model

+

+The kinematic model was crucial for accurately calculating the speed of each motor, ensuring precise movement in the desired direction. The key principles applied include:

+

+- **Motor Speed Calculation**: The speed of each motor was determined using kinematic equations based on the desired movement angle.

+- **Consistent Orientation**: : Orientation data from sensors was used to correct the robot's movement, ensuring it always faced the goal.

+

+

+## Sensors and PID Controller

+

+We employed BNO-055 and MPU sensors to capture the robot's current orientation. A simplified PID (Proportional-Integral-Derivative) controller was implemented to correct any deviations from the desired orientation. This controller minimized the error between the current orientation and the target direction, facilitating smooth and accurate movement.

+

+## Implementation

+

+Here’s the core code that shows the kinematic equations and the corrections implemented using the PID controller:

+

+```cpp

+double PID::calculateError(int angle, int set_point) {

+ unsigned long time = millis();

+ double delta_time = (time - previous_time) / 1000.0;

+

+ control_error = set_point - angle;

+ double delta_error = (control_error - previous_error) / delta_time;

+ sum_error += control_error * delta_time;

+

+ sum_error = (sum_error > max_error) ? max_error : (sum_error < -max_error) ? -max_error : sum_error;

+

+ double control = (kP * control_error) + (kI * sum_error) + (kD * delta_error);

+

+ previous_error = control_error;

+ previous_time = time;

+

+ return control;

+}

+

+void Drive::linealMovementError(int degree, int speed, int error) {

+ float m1 = sin(((60 - degree) * PI / 180));

+ float m2 = sin(((180 - degree) * PI / 180));

+ float m3 = sin(((300 - degree) * PI / 180));

+

+ int speedA = (m1 * speed);

+ int speedB = (m2 * speed);

+ int speedC = (m3 * speed);

+

+ speedA -= error;

+ speedB -= error;

+ speedC -= error;

+

+ motor_1.setSpeed(speedA);

+ motor_2.setSpeed(speedB);

+ motor_3.setSpeed(speedC);

+}

+```

+

+### PID::calculateError

+The calculateError function computes the control signal using the PID algorithm, considering the discrepancy between the desired and current orientation.

+

+### Drive::linealMovementError

+The linealMovementError function calculates the speed for each motor based on the desired movement direction and applies corrections using the error from the PID controller.

+

diff --git a/docs/SoccerLightweight/2024/Programming/Vision.md b/docs/SoccerLightweight/2024/Programming/Vision.md

new file mode 100644

index 0000000..bf54cbf

--- /dev/null

+++ b/docs/SoccerLightweight/2024/Programming/Vision.md

@@ -0,0 +1,43 @@

+# Vision System: Goal Detection

+

+In our Soccer Lightweight project, detecting the goal is essential for strategic gameplay. We employed a Pixy2 camera, which facilitated blob color detection using the PixyMon IDE. This system enabled our robots to identify the bounding box of the goal and transmit the relevant data to the Arduino for processing.

+

+## Pixy2 Camera and PixyMon IDE

+

+The Pixy2 camera, integrated with the Open MV IDE, allowed for the detection of colored blobs representing the goals. The bounding box coordinates of these detected blobs were then transmitted to the Arduino, enabling the robots to navigate towards the goal or estimate the distance to it.

+

+## Implementation

+

+Here's the core code that shows how the vision system updates goal data and checks for detected goals:

+

+```cpp

+void Goals::updateData() {

+ pixy.ccc.getBlocks();

+ numGoals = pixy.ccc.numBlocks > 2 ? 2 : pixy.ccc.numBlocks;

+ for (uint8_t i = 0; i < numGoals; i++) {

+ goals[i].x = pixy.ccc.blocks[i].m_x;

+ goals[i].y = pixy.ccc.blocks[i].m_y;

+ goals[i].width = pixy.ccc.blocks[i].m_width;

+ goals[i].height = pixy.ccc.blocks[i].m_height;

+ goals[i].color = pixy.ccc.blocks[i].m_signature;

+ }

+}

+

+bool Goals::detected(uint8_t color) {

+ for (uint8_t i = 0; i < numGoals; i++) {

+ if (goals[i].color == color) {

+ return true;

+ }

+ }

+ return false;

+}

+```

+

+### updateData()

+This function retrieves the detected blocks from the Pixy2 camera and updates the goal data by storing the coordinates, dimensions, and color signatures of up to two detected goals.

+

+### detected()

+This function checks if a goal of the specified color has been detected. It iterates through the detected goals and returns true if a match is found.

+

+

+

diff --git a/docs/SoccerLightweight/2024/index.md b/docs/SoccerLightweight/2024/index.md

new file mode 100644

index 0000000..0755f02

--- /dev/null

+++ b/docs/SoccerLightweight/2024/index.md

@@ -0,0 +1,30 @@

+# @SoccerLightweight - 2024

+

+The main developments during 2024 with respect to previous years are the following:

+

+

+TODO: modify this.

+## Mechanics

+

+- [Jetson Nano](Jetson Nano/RunningJetson/)

+- d

+

+## Electronics

+

+- [General](Electronics/1%20General.md)

+

+- [Power Supply](Electronics/2%20Power%20Supply.md)

+

+- [PCBs Designs (PCB)](Electronics/3%20PCBs%20Designs.md)

+

+- [Electronic Components](Electronics/4%20Electronic%20Components.md)

+

+

+

+## Programming

+

+- [General](Programming/General.md)

+- [IR Detection](Programming/IRDetection.md)

+- [Line Detection](Programming/LineDetection.md)

+- [Motion Control](Programming/Movement.md)

+- [Vision Processing](Programming/Vision.md)

diff --git a/docs/SoccerLightweight/index.md b/docs/SoccerLightweight/index.md

new file mode 100644

index 0000000..9621c7e

--- /dev/null

+++ b/docs/SoccerLightweight/index.md

@@ -0,0 +1,12 @@

+# @SoccerLightweight

+

+

diff --git a/docs/soccer/Programming/Vision.md b/docs/SoccerLightweight/2023/Programming/Vision.md

similarity index 100%

rename from docs/soccer/Programming/Vision.md

rename to docs/SoccerLightweight/2023/Programming/Vision.md

diff --git a/docs/SoccerLightweight/2023/index.md b/docs/SoccerLightweight/2023/index.md

new file mode 100644

index 0000000..380b241

--- /dev/null

+++ b/docs/SoccerLightweight/2023/index.md

@@ -0,0 +1,35 @@

+# @SoccerLightweight - 2023

+

+The main developments during 2023 with respect to previous years are the following:

+

+## Sections

+

+### Mechanics

+

+- [General](Mechanics/0.General.md)

+

+- [Robot Lower Design](Mechanics/1.Robot_Lower_Design.md)

+

+- [Robot Upper Design](Mechanics/2.Robot_Upper_Design.md)

+

+### Electronics

+

+- [General](Electronics/1 General.md)

+

+- [Power Supply](Electronics/2 Power Supply.md)

+

+- [Printed Circuit Boards (PCB)](Electronics/3 Printed Circuit Boards (PCB).md)

+

+- [Dribbler Implementation](Electronics/4 Dribbler Implementation.md)

+

+### Programming

+

+- [General](Programming/General.md)

+

+- [IR Detection](Programming/IR_Detection.md)

+

+- [Line Detection](Programming/Line_Detection.md)

+

+- [Movement](Programming/Movement.md)

+

+- [Vision](Programming/Vision.md)

diff --git a/docs/SoccerLightweight/2024/Electronics/1 General.md b/docs/SoccerLightweight/2024/Electronics/1 General.md

new file mode 100644

index 0000000..2e47e6f

--- /dev/null

+++ b/docs/SoccerLightweight/2024/Electronics/1 General.md

@@ -0,0 +1,5 @@

+# General

+

+For the TMR 2024, these are some general ideas:

+

+ For the power supply we used two LiPO batteries: one 11.1V and 2250 mAh for the motors, and two 3.7V Lipo batteries connected in series to obtain 7.4V and 2500 mAh for the logic using a voltage regulator at 5V, These batteries were used due to their high amperage and optimal duration in competition. The PCBs, designed with EasyEda, included a main board with the microcontroller and sensors, boards for the TEPT5700 phototransistors connected directly to the microcontroller for line detection, and an IR ring with TSSP58038 receivers and an Atmega328p was reused to process the infrared signals. The Arduino Mega Pro microcontroller was chosen for its ease of use and multiple pins. For movement, HP motors at 2200 RPM with TB6612FNG controllers were used, configured to handle up to 2.4 Amperes continuous and peaks of 6 Amperes, adapting to the high current demand of the motors.

diff --git a/docs/SoccerLightweight/2024/Electronics/2 Power Supply.md b/docs/SoccerLightweight/2024/Electronics/2 Power Supply.md

new file mode 100644

index 0000000..78f0898

--- /dev/null

+++ b/docs/SoccerLightweight/2024/Electronics/2 Power Supply.md

@@ -0,0 +1,13 @@

+# Power Supply

+

+For power, two batteries were used.

+

+## Movement Power

+For the motors, a 11.1V 2250 mAh battery was used; this is because the motors require 12V and so as not to worry about how long they would last, we bought them with a lot of amperage.

+

+## Logic Power

+For logic, two 3.7V Lipo batteries were used connected in series to obtain 7.4V, these batteries have an amperage of 2500 mAh.

+

+

+

+It was decided to use this type of batteries because they are very good in relation to electronic projects; likewise, their duration was very optimal when it came to being in the competition.

diff --git a/docs/SoccerLightweight/2024/Electronics/3 PCBs Designs.md b/docs/SoccerLightweight/2024/Electronics/3 PCBs Designs.md

new file mode 100644

index 0000000..1716775

--- /dev/null

+++ b/docs/SoccerLightweight/2024/Electronics/3 PCBs Designs.md

@@ -0,0 +1,12 @@

+# PCBs Designs

+

+For the design of the PCBs, we used the EasyEda app. Three types of boards were made on it.

+

+## Main Board

+The main board included the microcontroller, as well as the sensors that would be used; additional digital/analog pins were added in case they were needed.

+

+## Phototransistors Board

+The other boards were made for the phototransistors, which were used for the lower part of the robot for line detection. It was considered to use them with a multiplexer, but for reasons of complexity, they were connected directly to the analog pins of the microcontroller.

+

+## IR Ring Board

+It was decided to reuse last year's IR ring due to its complexity and the short time we had to develop something different. With this IR ring, we were able to work very well on the electronic part because it was connected to the microcontroller's serial port.

diff --git a/docs/SoccerLightweight/2024/Electronics/4 Electronic Components.md b/docs/SoccerLightweight/2024/Electronics/4 Electronic Components.md

new file mode 100644

index 0000000..137073d

--- /dev/null

+++ b/docs/SoccerLightweight/2024/Electronics/4 Electronic Components.md

@@ -0,0 +1,13 @@

+# Electronic Components

+

+## Microcontroller

+For the microcontroller, the Arduino Mega Pro was chosen. This is due to its ease of use in electronics and programming. The Arduino Mega Pro microcontroller has 54 digital pins, 15 digital ports with PWM output, 16 analog pins, among many other things.

+

+## Sensors

+For the orientation of the robot, we use a BNO055 sensor which has very good performance and accuracy. It is also possible to connect this sensor via I2C to the microcontroller.

+For the detection of lines, TEPT5700 phototransistors were used since the range in which they work does not include infrared light, which is crucial so that it does not interfere with the signals from the IR ball.

+

+Digital IR receivers TSSP58038 were used to detect the IR signals emitted by the ball and a custom PCB was also designed. The IR ring is made up of 12 IR receivers, and an Atmega328p was used for processing and vectoring the infrared signals.

+

+## Drivers and motors

+For the motion section, we use HP motors at 2200 RPM with TB6612FNG drivers. For this, we bridge the input and output signals to obtain up to 2.4 continuous Amps and up to 6 peak Amps since the motors require too much current.

diff --git a/docs/SoccerLightweight/2024/Programming/General.md b/docs/SoccerLightweight/2024/Programming/General.md

new file mode 100644

index 0000000..b144e2d

--- /dev/null

+++ b/docs/SoccerLightweight/2024/Programming/General.md

@@ -0,0 +1,34 @@

+# General Overview & Strategy

+

+"Soccer Lightweight 2024" is an autonomous robot competition featuring 2 vs 2 soccer playoffs. The Soccer LighWeight team merged our knowledge in robotics and their passion for sports to design a robot that plays soccer with agility and precision.

+

+The strategy for the Soccer Lightweight competition was a blend of offensive and defensive tactics, realized with programming techniques and meticulous strategic planning. Core aspects of this strategy include:

+

+## Key Elements of the Strategy

+

+### Role Assignment

+Each robot is assigned a specific role, such as a striker or a goalkeeper. This specialization allows for focused development of skills and tactics suitable for each position.

+

+### Real-Time Vision Processing

+Using the Pixy2 camera, the robots can identify and track the ball and goals in real-time.

+

+### Holonomic Movement

+Implemented through kinematic equations, this allows the robots to move smoothly and rapidly in any direction.

+

+### Line Detection

+Utilizing phototransistors and IR sensors, the robots can detect field boundaries, ensuring they stay within the playing area and avoid penalties.

+

+## Tools and Technologies

+The main tools and software used in the development of the Soccer Lightweight robot include:

+

+- **Arduino**: Used for programming the microcontroller that controls the robot's actions.

+- **Visual Studio Code**: The primary integrated development environment (IDE) for writing and debugging code.

+- **Pixy Moon IDE**: Utilized for the calibration and detection of color blobs through the camera, essential for the robot's vision system.

+

+## Abstract

+The Soccer Lightweight robot exemplifies robotics through its integration of advanced motion algorithms and sensory systems. Designed for optimum performance on the soccer field, this robot utilizes real-time vision and sensory integration to accurately identify the ball and goals, enhancing its competitiveness. The engineering prioritizes agility within strict weight constraints, enabling the robot to execute complex maneuvers and strategies effectively during matches.

+

+

+## Algorithm of Attacking and Defending Robot

+

+

diff --git a/docs/SoccerLightweight/2024/Programming/IRDetection.md b/docs/SoccerLightweight/2024/Programming/IRDetection.md

new file mode 100644

index 0000000..3385139

--- /dev/null

+++ b/docs/SoccerLightweight/2024/Programming/IRDetection.md

@@ -0,0 +1,53 @@

+# IR Detection

+

+In the Soccer Lightweight project, the precision of robot movement and positioning on the field was really important for successful gameplay. Essential to this was accurately determining both the angle and distance to the ball. To achieve this, an IR ring utilizing 12 TSSP-58038 IR receivers was designed.

+

+

+## IR Ring Design and Functionality

+

+The IR ring consists of 12 TSSP-58038 IR receivers arranged in a circular ring. This configuration enables the robot to detect the angle of the ball relative to its position and estimate the distance to the ball. The primary objectives of the IR detection system include:

+

+

+- **Angle Detection**: To determine the direction of the ball.

+- **Distance Measurement**: To estimate how far the ball is from the robot.

+

+## Mathematical Calculations

+

+The IR detection system processes and filters the signals received from the IR sensors to determine the ball's angle and signal strength. Referencing the Yunit team's research from 2017, the calculations can be summarized as follows:

+

+- **Signal Processing**: The IR sensors detect the ball and send angle and strength data to the Arduino.

+- **Filtering**: We apply an exponential moving average (EMA) filter to smooth the data, ensuring stable and accurate readings.

+- **Angle Adjustment**: The raw angle data is adjusted to account for any offsets and converted to a 0-360 degree format for easier interpretation.

+- **Strength Calculation**: The strength of the signal indicates the distance to the ball, with stronger signals meaning the ball is closer.

+

+## Code Implementation

+

+Here is a brief overview of the core code responsible for processing the IR data:

+

+```cpp

+void IR::updateData() {

+ if (Serial3.available()) {

+ String input = Serial3.readStringUntil('\n');

+ if (input[0] == 'a') {

+ angle = input.substring(2).toDouble() + offset;

+ filterAngle.AddValue(angle);

+ } else if (input[0] == 'r') {

+ strength = input.substring(2).toDouble();

+ filterStr.AddValue(strength);

+ }

+ }

+}

+

+double IR::getAngle() {

+ return filterAngle.GetLowPass();

+}

+

+double IR::getStrength() {

+ return filterStr.GetLowPass();

+}

+```

+This code reads data from the IR sensors, applies filtering to the angle and strength values, and adjusts them for precise detection.

+

+## Implementation and Testing

+

+Comprehensive testing was conducted to calibrate the system and verify its accuracy. By employing TSSP-58038 IR sensors and advanced filtering techniques, we achieved reliable and precise ball detection, enabling the robot to execute complex movements and strategies with effectiveness.

\ No newline at end of file

diff --git a/docs/SoccerLightweight/2024/Programming/LineDetection.md b/docs/SoccerLightweight/2024/Programming/LineDetection.md

new file mode 100644

index 0000000..e34401c

--- /dev/null

+++ b/docs/SoccerLightweight/2024/Programming/LineDetection.md

@@ -0,0 +1,30 @@

+# Line Detection

+

+In the Soccer Lightweight project, detecting the field's lines is essential for maintaining the robot's position and ensuring it stays within the playing boundaries. The line detection system differentiates between the white lines on the field and the green background, enabling precise navigation.

+

+

+## Line Detection Strategy

+

+The line detection strategy employs a combination of analog sensors and a multiplexer to read values from different parts of the field. The key components and steps in this strategy include:

+

+

+- **Analog Sensors**: Multiple analog sensors are positioned around the robot to read the field's color, distinguishing between white lines and the green background.

+- **Multiplexer (MUX)**: A multiplexer switches between different sensor inputs, allowing the robot to monitor several sensors using a single analog input pin on the Arduino.

+- **Threshold Values**: Threshold values are set to differentiate between white lines and the green field, determining whether the sensor is over a line or the field.

+

+## Implementation

+

+The implementation involves reading sensor values and comparing them to predefined thresholds. The key functions responsible for line detection are:

+

+

+### muxSensor()

+Reads values from the multiplexer and direct sensor pins, comparing them to threshold values to determine if they are over a white line or green field.

+

+### calculateDirection()

+Determines the direction of the detected line and adjusts the robot's heading accordingly.

+

+## Testing and Calibration

+The line detection system underwent extensive testing and calibration to ensure accuracy. Thresholds for distinguishing between white and green were fine-tuned based on experimental data, ensuring the robot can reliably detect lines under various lighting conditions and on different field surfaces.

+

+

+

diff --git a/docs/SoccerLightweight/2024/Programming/Movement.md b/docs/SoccerLightweight/2024/Programming/Movement.md

new file mode 100644

index 0000000..3f6c9e6

--- /dev/null

+++ b/docs/SoccerLightweight/2024/Programming/Movement.md

@@ -0,0 +1,62 @@

+# Motion Control: Holonomic Movement

+

+## Kinematic Model

+

+The kinematic model was crucial for accurately calculating the speed of each motor, ensuring precise movement in the desired direction. The key principles applied include:

+

+- **Motor Speed Calculation**: The speed of each motor was determined using kinematic equations based on the desired movement angle.

+- **Consistent Orientation**: : Orientation data from sensors was used to correct the robot's movement, ensuring it always faced the goal.

+

+

+## Sensors and PID Controller

+

+We employed BNO-055 and MPU sensors to capture the robot's current orientation. A simplified PID (Proportional-Integral-Derivative) controller was implemented to correct any deviations from the desired orientation. This controller minimized the error between the current orientation and the target direction, facilitating smooth and accurate movement.

+

+## Implementation

+

+Here’s the core code that shows the kinematic equations and the corrections implemented using the PID controller:

+

+```cpp

+double PID::calculateError(int angle, int set_point) {

+ unsigned long time = millis();

+ double delta_time = (time - previous_time) / 1000.0;

+

+ control_error = set_point - angle;

+ double delta_error = (control_error - previous_error) / delta_time;

+ sum_error += control_error * delta_time;

+

+ sum_error = (sum_error > max_error) ? max_error : (sum_error < -max_error) ? -max_error : sum_error;

+

+ double control = (kP * control_error) + (kI * sum_error) + (kD * delta_error);

+

+ previous_error = control_error;

+ previous_time = time;

+

+ return control;

+}

+

+void Drive::linealMovementError(int degree, int speed, int error) {

+ float m1 = sin(((60 - degree) * PI / 180));

+ float m2 = sin(((180 - degree) * PI / 180));

+ float m3 = sin(((300 - degree) * PI / 180));

+

+ int speedA = (m1 * speed);

+ int speedB = (m2 * speed);

+ int speedC = (m3 * speed);

+

+ speedA -= error;

+ speedB -= error;

+ speedC -= error;

+

+ motor_1.setSpeed(speedA);

+ motor_2.setSpeed(speedB);

+ motor_3.setSpeed(speedC);

+}

+```

+

+### PID::calculateError

+The calculateError function computes the control signal using the PID algorithm, considering the discrepancy between the desired and current orientation.

+

+### Drive::linealMovementError

+The linealMovementError function calculates the speed for each motor based on the desired movement direction and applies corrections using the error from the PID controller.

+

diff --git a/docs/SoccerLightweight/2024/Programming/Vision.md b/docs/SoccerLightweight/2024/Programming/Vision.md

new file mode 100644

index 0000000..bf54cbf

--- /dev/null

+++ b/docs/SoccerLightweight/2024/Programming/Vision.md

@@ -0,0 +1,43 @@

+# Vision System: Goal Detection

+

+In our Soccer Lightweight project, detecting the goal is essential for strategic gameplay. We employed a Pixy2 camera, which facilitated blob color detection using the PixyMon IDE. This system enabled our robots to identify the bounding box of the goal and transmit the relevant data to the Arduino for processing.

+

+## Pixy2 Camera and PixyMon IDE

+

+The Pixy2 camera, integrated with the Open MV IDE, allowed for the detection of colored blobs representing the goals. The bounding box coordinates of these detected blobs were then transmitted to the Arduino, enabling the robots to navigate towards the goal or estimate the distance to it.

+

+## Implementation

+

+Here's the core code that shows how the vision system updates goal data and checks for detected goals:

+

+```cpp

+void Goals::updateData() {

+ pixy.ccc.getBlocks();

+ numGoals = pixy.ccc.numBlocks > 2 ? 2 : pixy.ccc.numBlocks;

+ for (uint8_t i = 0; i < numGoals; i++) {

+ goals[i].x = pixy.ccc.blocks[i].m_x;

+ goals[i].y = pixy.ccc.blocks[i].m_y;

+ goals[i].width = pixy.ccc.blocks[i].m_width;

+ goals[i].height = pixy.ccc.blocks[i].m_height;

+ goals[i].color = pixy.ccc.blocks[i].m_signature;

+ }

+}

+

+bool Goals::detected(uint8_t color) {

+ for (uint8_t i = 0; i < numGoals; i++) {

+ if (goals[i].color == color) {

+ return true;

+ }

+ }

+ return false;

+}

+```

+

+### updateData()

+This function retrieves the detected blocks from the Pixy2 camera and updates the goal data by storing the coordinates, dimensions, and color signatures of up to two detected goals.

+

+### detected()

+This function checks if a goal of the specified color has been detected. It iterates through the detected goals and returns true if a match is found.

+

+

+

diff --git a/docs/SoccerLightweight/2024/index.md b/docs/SoccerLightweight/2024/index.md

new file mode 100644

index 0000000..0755f02

--- /dev/null

+++ b/docs/SoccerLightweight/2024/index.md

@@ -0,0 +1,30 @@

+# @SoccerLightweight - 2024

+

+The main developments during 2024 with respect to previous years are the following:

+

+

+TODO: modify this.

+## Mechanics

+

+- [Jetson Nano](Jetson Nano/RunningJetson/)

+- d

+

+## Electronics

+

+- [General](Electronics/1%20General.md)

+

+- [Power Supply](Electronics/2%20Power%20Supply.md)

+

+- [PCBs Designs (PCB)](Electronics/3%20PCBs%20Designs.md)

+

+- [Electronic Components](Electronics/4%20Electronic%20Components.md)

+

+

+

+## Programming

+

+- [General](Programming/General.md)

+- [IR Detection](Programming/IRDetection.md)

+- [Line Detection](Programming/LineDetection.md)

+- [Motion Control](Programming/Movement.md)

+- [Vision Processing](Programming/Vision.md)

diff --git a/docs/SoccerLightweight/index.md b/docs/SoccerLightweight/index.md

new file mode 100644

index 0000000..9621c7e

--- /dev/null

+++ b/docs/SoccerLightweight/index.md

@@ -0,0 +1,12 @@

+# @SoccerLightweight

+

+ +

+2 vs 2 autonomous robot competition where opposing team robots have to play soccer playoffs. The twist of this competition is that robots have to weigh less than 1.1 kg, hence the name "Soccer Lightweight".

+

+

+## Competition

+

+See the [rules](https://robocupjuniortc.github.io/soccer-rules/master/rules.pdf) for Soccer Lightweight.

+

+

diff --git a/docs/SoccerOpen/2024/Communication/index.md b/docs/SoccerOpen/2024/Communication/index.md

new file mode 100644

index 0000000..da7f61b

--- /dev/null

+++ b/docs/SoccerOpen/2024/Communication/index.md

@@ -0,0 +1,24 @@

+Taking into account that we used a dual microcontroller we utilized various channels for serial communication. Our pipeline follows this structure:

+

+

+

+Note that the first data package input occurrs in the camera, sending the following variables to the Raspberry Pi Pico:

+

+```

+filtered_angle

+ball_distance

+ball_angle

+goal_angle

+distance_pixels

+```

+

+In our case the Raspberry Pi Pico just works to format into String and prepare our data package in a way so the esp32 can interpret it and work with it.

+

+Once the data has reached the esp32 the data management follows these steps:

+

+1. Reading data from serial input

+2. Initializing an array and an index

+3. Tokenizing the string

+4. Converting tokens to integer and storing in array

+5. Repeat the process until the 5 values have been populated

+6. Assigning parsed values to variables

\ No newline at end of file

diff --git a/docs/SoccerOpen/2024/Control/index.md b/docs/SoccerOpen/2024/Control/index.md

new file mode 100644

index 0000000..1e23d3b

--- /dev/null

+++ b/docs/SoccerOpen/2024/Control/index.md

@@ -0,0 +1,45 @@

+### Kinematic

+

+For robot control we decided to create our motor libraries following this categories:

+

+>> 📁 motor

+

+>> 📁 motors

+

+Below is a UML diagram showing the relation between both classes and their interaction:

+

+

+

+All of our kinematic logic is found in the Motors class, containing two relevant methods

+

+```

+MoveMotors(int degree, uint8_t speed)

+MoveMotorsImu(int degree, uint8_t speed, double speed_w)

+```

+

+For both methods the relation in kinematic includes the following equations:

+

+```

+float m1 = cos(((45 + degree) * PI / 180));

+float m2 = cos(((135 + degree) * PI / 180));

+float m3 = cos(((225 + degree) * PI / 180));

+float m4 = cos(((315 + degree) * PI / 180));

+```

+Which models the robot below:

+

+

+

+For the case of using the IMU sensor, we implemented a omega PID controller to regulate the robot's angle while moving to an specific direction. We also implemented a traslational PID

+to regulate speed when approaching the ball. To simplify this we created a PID class to make our code reusable.

+

+### IMU Sensor

+

+For IMU sensor we utilized the [Adafruit_BNO055](https://github.com/adafruit/Adafruit_BNO055/tree/master), we also implemented our own library for class creation. Utilizing the yaw position we were able to calculate setpoint and error for out PID controller, below is the logic followed to match angles on the robot frame and the real world.

+

+

+

+Note that yaw is calculated using the Euler vector as shown in the equation below:

+

+`var yaw = atan2(2.0*(q.y*q.z + q.w*q.x), q.w*q.w - q.x*q.x - q.y*q.y + q.z*q.z);`

+

+With this classes we are able to control our robot movement logically.

\ No newline at end of file

diff --git a/docs/SoccerOpen/2024/Logic/index.md b/docs/SoccerOpen/2024/Logic/index.md

new file mode 100644

index 0000000..2ace29f

--- /dev/null

+++ b/docs/SoccerOpen/2024/Logic/index.md

@@ -0,0 +1,63 @@

+For the algorithm design, which is the main logic, we utilized two main files. One for the esp32 and another one for the Raspberry Pi Pico. Our logic hierarchy follows this structure:

+

+>> Goalkeeper

+

+>> - ESP32_Goalkeeper

+

+>> - Pico_Goalkeeper

+

+

+>> Striker

+

+>> - ESP32_Striker

+

+>> - Pico_Striker

+

+### Goalkeeper Logic

+

+The diagram below shows the logic flow for Goalkeeper in the Raspberry Pi Pico:

+

+

+

+For the logic to center the robot in goal observe the pathway below:

+

+1. Check if the ball is found:

+

+ - If the ball is found (`ball_found` is true), proceed to the next step.

+

+2. Determine robot movement based on ball angle:

+

+ - If the ball's angle is within -15 to 15 degrees (indicating the ball is almost directly ahead), move the robot forward towards the ball using a specific speed (`speed_t_ball`) and rotation speed (`speed_w`).

+ - If the ball's angle is outside this range, adjust the ball angle to ensure it's within a 0-360 degree range and calculate a "differential" based on the ball angle. This differential is used to adjust the ball angle to a "`ponderated_angle`," which accounts for the ball's position relative to the robot. The robot then moves in the direction of this adjusted angle with the same speed and rotation speed as before.

+

+3. Adjust robot position if the ball is not found but the goal angle is known:

+

+ - If the goal angle is positive and the ball is not found:

+ - If the goal angle is less than a certain threshold to the left, move the robot to the left (angle 270 degrees) to align with the goal using a specific speed (`speed_t_goal`) and rotation speed (`speed_w`).

+ - If the goal angle is more than a certain threshold to the right, move the robot to the right (angle 90 degrees) to align with the goal using the same speeds.

+ - If the goal angle is within the threshold, stop the robot's lateral movement but continue rotating at the current speed (`speed_w`).

+

+### Striker Logic

+

+The diagram below shows the logic flow for Striker in the Raspberry Pi Pico:

+

+

+

+For the logic to implement if the ball was found observe the pathway below:

+

+1. Check for Ball Detection or Distance Conditions:

+

+ - The robot checks if the ball is found, if the distance to the ball is greater than 100 units, or if the distance is exactly 0 units. If any of these conditions are true, it proceeds with further checks; otherwise, it moves to the else block.

+

+2. Log Ball Found:

+

+ - If the condition is true, it logs "pelota found" to the Serial monitor, indicating that the ball has been detected or the distance conditions are met.

+

+3. Direct Approach or Adjust Angle:

+

+ - Direct Approach: If the ball's angle from the robot (considering a 180-degree field of view) is between -15 and 15 degrees, it means the ball is almost directly in front of the robot. The robot then moves directly towards the ball. The movement command uses an angle of 0 degrees, the absolute value of a predefined speed towards the ball (`speed_t_ball`), and a rotation speed (`speed_w`).

+ - Adjust Angle: If the ball's angle is outside the -15 to 15-degree range, the robot needs to adjust its angle to approach the ball correctly. It calculates a "differential" based on the ball's angle (after adjusting the angle to a 0-360 range) and a factor of 0.09. This differential is used to calculate a "`ponderated_angle`," which adjusts the robot's movement direction either by subtracting or adding this differential, depending on the ball's angle relative to 180 degrees. The robot then moves in this adjusted direction with the same speed and rotation speed.

+

+4. Default Movement:

+

+ - If none of the conditions for the ball being found or the specific distance conditions are met, the robot executes a default movement. It turns around (180 degrees) with a speed of 170 units and the predefined rotation speed (`speed_w`), then pauses for 110 milliseconds.

\ No newline at end of file

diff --git a/docs/SoccerOpen/2024/Mechanics/ACADFile.md b/docs/SoccerOpen/2024/Mechanics/ACADFile.md

new file mode 100644

index 0000000..55de421

--- /dev/null

+++ b/docs/SoccerOpen/2024/Mechanics/ACADFile.md

@@ -0,0 +1,3 @@

+#CAD (STEP)

+

+Full STEP File:[STEP](https://drive.google.com/file/d/1yx2i0Vv63EeMujKTLEmpld-wuCgQxQ_m/view?usp=sharing).

\ No newline at end of file

diff --git a/docs/SoccerOpen/2024/Mechanics/ARobotSystems.md b/docs/SoccerOpen/2024/Mechanics/ARobotSystems.md

new file mode 100644

index 0000000..ed37d57

--- /dev/null

+++ b/docs/SoccerOpen/2024/Mechanics/ARobotSystems.md

@@ -0,0 +1,21 @@

+#Robot Systems

+

+##Structure Materials:

+

+Robot chassis: Primarily MDF with acrylic reinforcements, secured with brass spacers and screws. 3D printed pieces were used for non-critical areas.

+

+

+

+

+##Dribbler:

+

+Made from 1/16in aluminum, some pieces were bent to shape. Designed for easy adjustment to accommodate manufacturing error.

+"Roller" component: 3D printed pulley paired with a black silicone molded shape for its gripping abilities.

+

+

+

+

+##Kicker:

+A 5 volt solenoid was used due to size and availability. The kicker 3d printed piece was inserted by heat.

+

+

diff --git a/docs/SoccerOpen/2024/Mechanics/CAD.md b/docs/SoccerOpen/2024/Mechanics/CAD.md

new file mode 100644

index 0000000..55c49b5

--- /dev/null

+++ b/docs/SoccerOpen/2024/Mechanics/CAD.md

@@ -0,0 +1,86 @@

+

+

+

+

+

+#Mirror design

+The following code was used to find a rough estimate of what our vision would look like even though the result would change slightly with the thickness of the chroming process. To adjust for calculation errors, we used a linear regression model for greater accuracy over theoretical results.

+

+ '''

+ parabola=(0.045+(0.45x^2))^(1/2)

+ derivada=(1/2)(0.9x)(0.045+0.45x^2)^(-1/2)

+ '''

+ import matplotlib.pyplot as plt

+

+ AlturaCamara_Curva=5#cm

+ Altura_suelo=15.4

+

+ import math

+ ran =[]

+ x=0

+ print('--------------------------valores x:')

+ for i in range(25+1):#lista de 0 a 2.5(media parabola)

+ ran.append(x)

+ x=x+0.1

+ for i in ran:

+ print(i)

+

+ print('--------------------------angulos de tangentes:')

+ anguTans=[]

+ for x in ran:

+ tangente=(1/2)*(0.9*x)*(0.045+0.45*x**2)**(-1/2)#encontrar tangente con derivada

+ angulo = math.degrees(math.atan(tangente))#angulo de tangente

+ anguTans.append(angulo)

+ print(angulo)

+

+ print('--------------------------angulos de camara:')

+ #encontrar angulo de camara a parabola

+ ai=[]

+ for x in ran:

+ y=((0.1+x**2)*0.45)**(1/2)+AlturaCamara_Curva#AlturaCamara curva es la distaancia cm al punto mas cercano de la parabloa

+ if x==0:

+ ai.append(0)

+ print(90)

+ else:

+ angulo = math.degrees(math.atan((y/x)))

+ ai.append(angulo)

+ print(angulo)

+

+

+ print('--------------------------angulos triangulols222:')

+ dists=[]

+ orden=0

+ #encontrardistancia

+ for x in ran:

+ if x==0:

+ dists.append(0)

+ print(0)

+ else:

+ Y=2*(180-90-(ai[orden]-anguTans[orden]))

+ X=(180-Y)/2

+ anguTriangulo2=X-anguTans[orden]

+ print(anguTriangulo2)

+ distTotal=x+(Altura_suelo/(math.tan(math.radians(anguTriangulo2))))#ALtura suelo cm de altura respecto al suelo

+ dists.append(distTotal)

+ orden=orden+1

+ print('--------------------------distancias totales:')

+ for i in dists:

+ print(i)

+

+ plt.figure(figsize=(8, 5))

+ plt.plot(ran, dists, label="Total Distance")

+ plt.xlabel("Mirror cm")

+ plt.ylabel("Total Distance")

+ plt.title("Total Distance vs. Mirror cm ")

+ plt.grid(True)

+ plt.legend()

+ plt.show()

+

+This is the graph showed by the code comparing meters of visibility and "x" axis distance on mirror hyperbola.

+

+

+

+

+

+**Design simulation in blender**

+

diff --git a/docs/SoccerOpen/2024/SoccerOpen - 2024.md b/docs/SoccerOpen/2024/SoccerOpen - 2024.md

new file mode 100644

index 0000000..29837ad

--- /dev/null

+++ b/docs/SoccerOpen/2024/SoccerOpen - 2024.md

@@ -0,0 +1,12 @@

+# Soccer Open 2024 Sections

+

+### Electronics

+

+### Mechanics

+

+### Programming

+

+- [📁 Robot Communication](Communication/index.md)

+- [🎮 Robot Control](Control/index.md)

+- [🤖 Algorithm Design](Logic/index.md)

+- [📸 Robot Vision](Vision/index.md)

\ No newline at end of file

diff --git a/docs/SoccerOpen/2024/Vision/index.md b/docs/SoccerOpen/2024/Vision/index.md

new file mode 100644

index 0000000..cd6bf28

--- /dev/null

+++ b/docs/SoccerOpen/2024/Vision/index.md

@@ -0,0 +1,58 @@

+### Blob Detection

+

+For detection we first had to set some constants using VAD values to detect the orange blob, we also added a brightness, saturation and contrast filter to make the image obtained from the camera more clear.

+

+Our vision algorithm followed these steps:

+

+1. Initialize sensor

+2. Locate blob

+3. Calculate distance

+4. Calculate opposite distance

+5. Calculate goal distance

+6. Calculate angle

+7. Main algorithm

+8. Send data package to esp32 via UART

+

+The first step when using the camera includes reducing the vision field by placing a black blob to reduce image noise, we also locate the center of the frame using constants such as `FRAME_HEIGHT`, `FRAME_WIDTH` and `FRAME_ROBOT`.

+

+For the game we must be able to differenciate between 3 different types of blobs that include the following:

+

+- Yellow goal

+- Blue goal

+- Orange ball

+

+We created the `locate_blob` method that used `find_blobs` from the OpenMV IDE to locate blobs in the image snapshot for each specific threshold value set and returns a list of blob objects for each set. In the case of the ball we have set the `area_threshold` to 1, this small value means that even when a small area of this color is detected it will be marked as an orange ball to increase the amount of inclusivity in our vision field. For the case of the goals the `area_threshold` is set to 1000 because goals are much more larger in size.

+

+Observe the image below to see the different blobs detected from the camera's POV.

+

+

+

+Once we have our different blobs detected we can calculate the distance to the blob using the hypotenuse. First we need to calculate the relative center in x and y axis, then find the magnitude distance and finally using an exponential regression model to calculate the real distance with pixel comparison. Note that the expression was obtained by running measurments comparing cm and pixels using a proportion and data modeling on Excel.

+

+```

+magnitude_distance = math.sqrt(relative_cx**2 + relative_cy**2)

+total_distance = 11.83 * math.exp((0.0245) * magnitude_distance)

+```

+

+For the `goal_distance` we needed to calculate the opposite distance, using sine.

+

+```

+distance_final = goal_distance*math.sin (math.radians(goal_angle))

+```

+

+To calculate the angle we used the inverse tangent and then just convert to degree measurment.

+

+```

+angle = math.atan2(relative_cy, relative_cx)

+angle_degrees = math.degrees(angle)

+```

+

+Finally, depending on the blob that is being detecting for each image snapshot we perform the corresponding methods and sent the data package using a format of two floating points divided by commas.

+

+### Line Detection

+

+To avoid corrsing the lines located outside the goals we implemented the `pixel_distance` measurment, so when our robot reached certain distance it automatically moved backward so we can limit its movement to avoid crossing the white lines.

+

+See the image below to observe the line limitations from the camera's POV.

+

+

\ No newline at end of file

diff --git a/docs/SoccerOpen/index.md b/docs/SoccerOpen/index.md

new file mode 100644