+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

-

-

-

-- ### 模型介绍

-

- - 本模块采用一个像素风格迁移网络 Pix2PixHD,能够根据输入的语义分割标签生成照片风格的图片。为了解决模型归一化层导致标签语义信息丢失的问题,向 Pix2PixHD 的生成器网络中添加了 SPADE(Spatially-Adaptive

- Normalization)空间自适应归一化模块,通过两个卷积层保留了归一化时训练的缩放与偏置参数的空间维度,以增强生成图片的质量。语义风格标签图像可以参考[coco_stuff数据集](https://github.com/nightrome/cocostuff)获取, 也可以通过[PaddleGAN repo中的该项目](https://github.com/PaddlePaddle/PaddleGAN/blob/87537ad9d4eeda17eaa5916c6a585534ab989ea8/docs/zh_CN/tutorials/photopen.md)来自定义生成图像进行体验。

-

-

-

-## 二、安装

-

-- ### 1、环境依赖

- - ppgan

-

-- ### 2、安装

-

- - ```shell

- $ hub install photopen

- ```

- - 如您安装时遇到问题,可参考:[零基础windows安装](../../../../docs/docs_ch/get_start/windows_quickstart.md)

- | [零基础Linux安装](../../../../docs/docs_ch/get_start/linux_quickstart.md) | [零基础MacOS安装](../../../../docs/docs_ch/get_start/mac_quickstart.md)

-

-## 三、模型API预测

-

-- ### 1、命令行预测

-

- - ```shell

- # Read from a file

- $ hub run photopen --input_path "/PATH/TO/IMAGE"

- ```

- - 通过命令行方式实现图像生成模型的调用,更多请见 [PaddleHub命令行指令](../../../../docs/docs_ch/tutorial/cmd_usage.rst)

-

-- ### 2、预测代码示例

-

- - ```python

- import paddlehub as hub

-

- module = hub.Module(name="photopen")

- input_path = ["/PATH/TO/IMAGE"]

- # Read from a file

- module.photo_transfer(paths=input_path, output_dir='./transfer_result/', use_gpu=True)

- ```

-

-- ### 3、API

-

- - ```python

- photo_transfer(images=None, paths=None, output_dir='./transfer_result/', use_gpu=False, visualization=True):

- ```

- - 图像转换生成API。

-

- - **参数**

-

- - images (list\[numpy.ndarray\]): 图片数据,ndarray.shape 为 \[H, W, C\];

- - paths (list\[str\]): 图片的路径;

- - output\_dir (str): 结果保存的路径;

- - use\_gpu (bool): 是否使用 GPU;

- - visualization(bool): 是否保存结果到本地文件夹

-

-

-## 四、服务部署

-

-- PaddleHub Serving可以部署一个在线图像转换生成服务。

-

-- ### 第一步:启动PaddleHub Serving

-

- - 运行启动命令:

- - ```shell

- $ hub serving start -m photopen

- ```

-

- - 这样就完成了一个图像转换生成的在线服务API的部署,默认端口号为8866。

-

- - **NOTE:** 如使用GPU预测,则需要在启动服务之前,请设置CUDA\_VISIBLE\_DEVICES环境变量,否则不用设置。

-

-- ### 第二步:发送预测请求

-

- - 配置好服务端,以下数行代码即可实现发送预测请求,获取预测结果

-

- - ```python

- import requests

- import json

- import cv2

- import base64

-

-

- def cv2_to_base64(image):

- data = cv2.imencode('.jpg', image)[1]

- return base64.b64encode(data.tostring()).decode('utf8')

-

- # 发送HTTP请求

- data = {'images':[cv2_to_base64(cv2.imread("/PATH/TO/IMAGE"))]}

- headers = {"Content-type": "application/json"}

- url = "http://127.0.0.1:8866/predict/photopen"

- r = requests.post(url=url, headers=headers, data=json.dumps(data))

-

- # 打印预测结果

- print(r.json()["results"])

-

-## 五、更新历史

-

-* 1.0.0

-

- 初始发布

-

- - ```shell

- $ hub install ernie_tiny==1.1.0

- ```

diff --git a/modules/text/text_generation/ernie_tiny/README_en.md b/modules/text/text_generation/ernie_tiny/README_en.md

deleted file mode 100644

index 373348799..000000000

--- a/modules/text/text_generation/ernie_tiny/README_en.md

+++ /dev/null

@@ -1,171 +0,0 @@

-# ernie_tiny

-

-|Module Name|ernie_tiny|

-| :--- | :---: |

-|Category|object detection|

-|Network|faster_rcnn|

-|Dataset|COCO2017|

-|Fine-tuning supported or not|No|

-|Module Size|161MB|

-|Latest update date|2021-03-15|

-|Data indicators|-|

-

-

-## I.Basic Information

-

-- ### Application Effect Display

- - Sample results:

-

-  -

-

-

+

+

+

+

+ +

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

-

+

+

-

+

@@ -22,14 +21,14 @@ English | [简体中文](README_ch.md)

## ⭐Features

- **📦400+ AI Models**: Rich, high-quality AI models, including CV, NLP, Speech, Video and Cross-Modal.

-- **🧒Easy to Use**: 3 lines of code to predict the 400+ AI models.

-- **💁Model As Service**: Easy to build a service with only one line of command.

+- **🧒Easy to Use**: 3 lines of code to predict 400+ AI models.

+- **💁Model As Service**: Easy to serve model with only one line of command.

- **💻Cross-platform**: Support Linux, Windows and MacOS.

### 💥Recent Updates

- **🔥2022.08.19:** The v2.3.0 version is released 🎉

- - supports [**ERNIE_ViLG**](./modules/image/text_to_image/ernie_vilg)([Hugging Face Space Demo](https://huggingface.co/spaces/PaddlePaddle/ERNIE-ViLG))

- - supports [**Disco Diffusion(DD)**](./modules/image/text_to_image/disco_diffusion_clip_vitb32) and [**Stable Diffusion(SD)**](./modules/image/text_to_image/stable_diffusion)

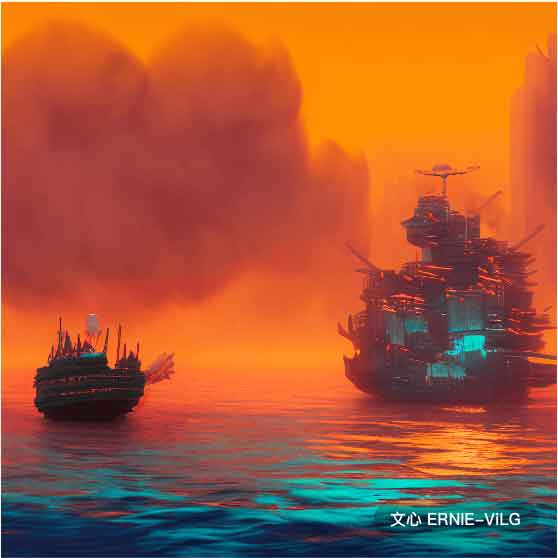

+ - Supports [**ERNIE-ViLG**](./modules/image/text_to_image/ernie_vilg)([HuggingFace Space Demo](https://huggingface.co/spaces/PaddlePaddle/ERNIE-ViLG))

+ - Supports [**Disco Diffusion (DD)**](./modules/image/text_to_image/disco_diffusion_clip_vitb32) and [**Stable Diffusion (SD)**](./modules/image/text_to_image/stable_diffusion)

- **2022.02.18:** Release models to HuggingFace [PaddlePaddle Space](https://huggingface.co/PaddlePaddle)

@@ -40,7 +39,7 @@ English | [简体中文](README_ch.md)

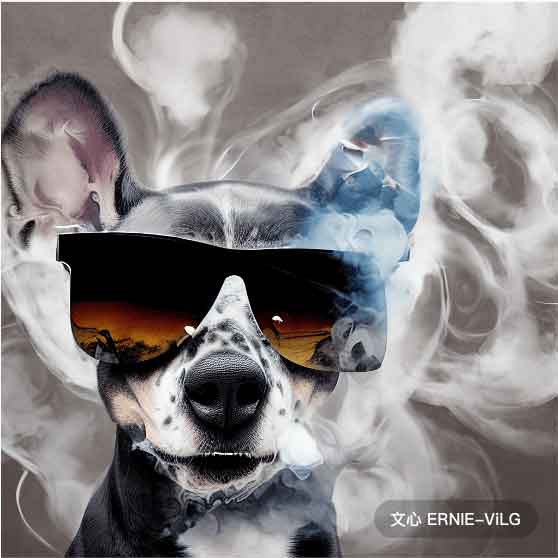

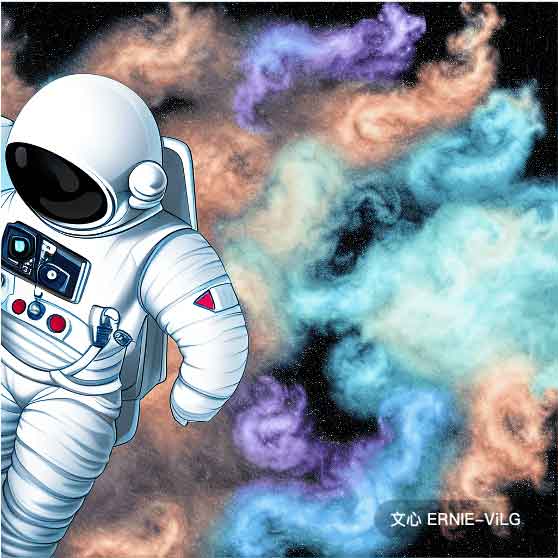

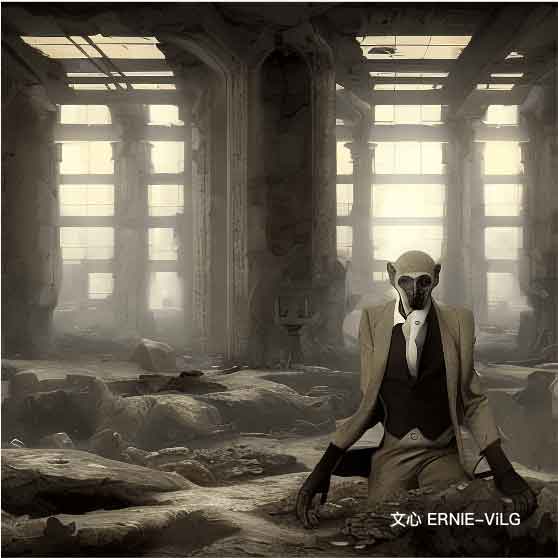

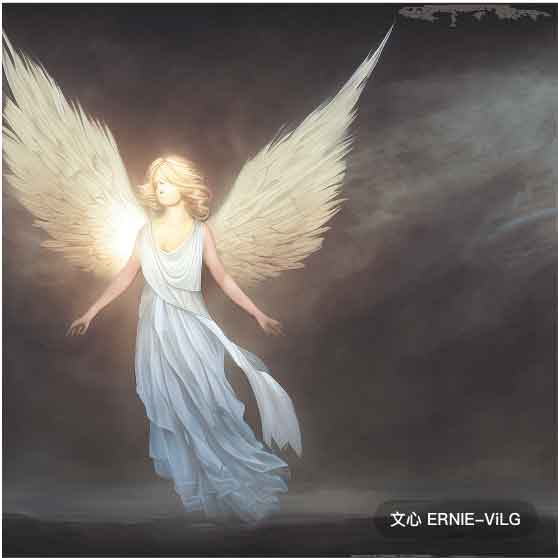

## 🌈Visualization Demo

#### 🏜️ [Text-to-Image Models](https://www.paddlepaddle.org.cn/hubdetail?name=ernie_vilg&en_category=TextToImage)

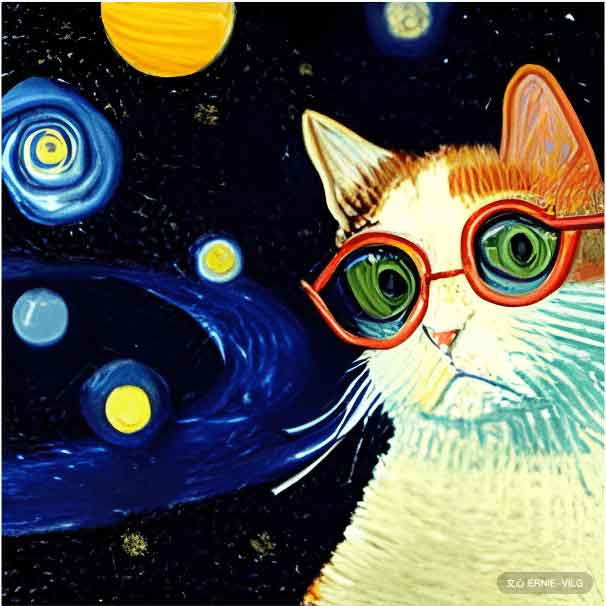

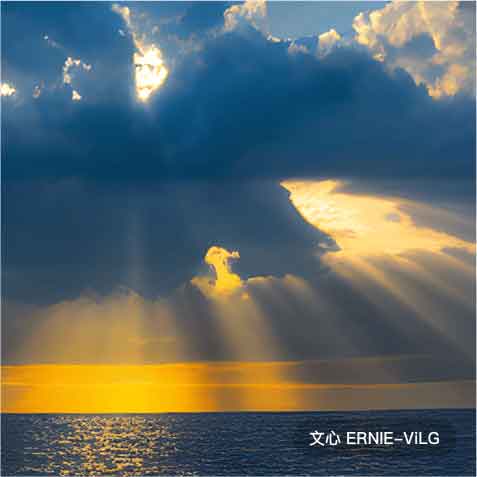

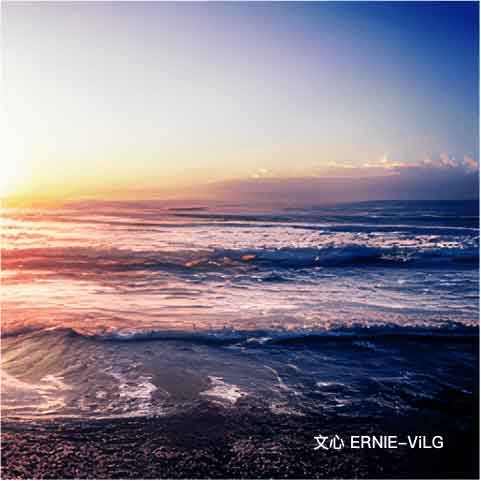

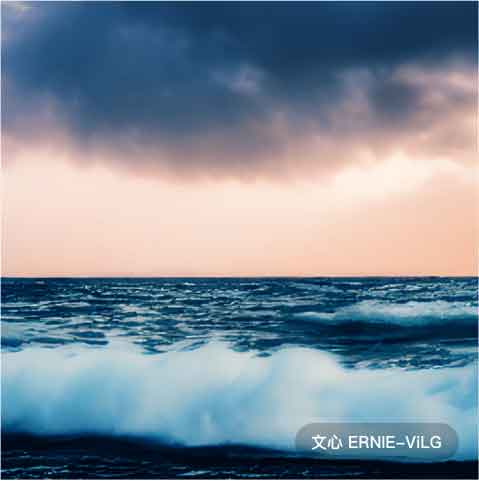

-- Include ERNIE-ViL, ERNIE 3.0 Zeus, supports applications such as text-to-image, writing essays, summarization, couplets, question answering, writing novels and completing text.

+- Include ERNIE-ViLG, ERNIE-ViL, ERNIE 3.0 Zeus, supports applications such as text-to-image, writing essays, summarization, couplets, question answering, writing novels and completing text.

From 2e727825a3b2ee45358b42ee5c12967d7fdb4595 Mon Sep 17 00:00:00 2001

From: jm12138 <2286040843@qq.com>

Date: Wed, 14 Sep 2022 14:23:29 +0800

Subject: [PATCH 050/117] fix typo

---

docs/docs_ch/get_start/linux_quickstart.md | 2 +-

1 file changed, 1 insertion(+), 1 deletion(-)

diff --git a/docs/docs_ch/get_start/linux_quickstart.md b/docs/docs_ch/get_start/linux_quickstart.md

index ebebaa448..c6f08573d 100755

--- a/docs/docs_ch/get_start/linux_quickstart.md

+++ b/docs/docs_ch/get_start/linux_quickstart.md

@@ -206,5 +206,5 @@

-

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+  +

+

+ 输入图像

+

+  +

+

+ 输出图像

+

+- ### 模型介绍

+

+ - PP-TinyPose是PaddleDetecion针对移动端设备优化的实时关键点检测模型,可流畅地在移动端设备上执行多人姿态估计任务。借助PaddleDetecion自研的优秀轻量级检测模型PicoDet以及轻量级姿态估计任务骨干网络HRNet, 结合多种策略有效平衡了模型的速度和精度表现。

+

+ - 更多详情参考:[PP-TinyPose](https://github.com/PaddlePaddle/PaddleDetection/tree/release/2.4/configs/keypoint/tiny_pose)。

+

+

+

+## 二、安装

+

+- ### 1、环境依赖

+

+ - paddlepaddle >= 2.2

+

+ - paddlehub >= 2.2 | [如何安装paddlehub](../../../../docs/docs_ch/get_start/installation.rst)

+

+- ### 2、安装

+

+ - ```shell

+ $ hub install pp-tinypose

+ ```

+ - 如您安装时遇到问题,可参考:[零基础windows安装](../../../../docs/docs_ch/get_start/windows_quickstart.md)

+ | [零基础Linux安装](../../../../docs/docs_ch/get_start/linux_quickstart.md) | [零基础MacOS安装](../../../../docs/docs_ch/get_start/mac_quickstart.md)

+

+

+

+## 三、模型API预测

+

+- ### 1、命令行预测

+

+ - ```shell

+ $ hub run pp-tinypose --input_path "/PATH/TO/IMAGE" --visualization True --use_gpu

+ ```

+ - 通过命令行方式实现关键点检测模型的调用,更多请见 [PaddleHub命令行指令](../../../../docs/docs_ch/tutorial/cmd_usage.rst)

+

+- ### 2、代码示例

+

+ - ```python

+ import paddlehub as hub

+ import cv2

+

+ model = hub.Module(name="pp-tinypose")

+ result = model.predict('/PATH/TO/IMAGE', save_path='pp_tinypose_output', visualization=True, use_gpu=True)

+ ```

+

+- ### 3、API

+

+

+ - ```python

+ def predict(self, img: Union[str, np.ndarray], save_path: str = "pp_tinypose_output", visualization: bool = True, use_gpu = False)

+ ```

+

+ - 预测API,识别输入图片中的所有人肢体关键点。

+

+ - **参数**

+

+ - img (numpy.ndarray|str): 图片数据,使用图片路径或者输入numpy.ndarray,BGR格式;

+ - save_path (str): 图片保存路径, 默认为'pp_tinypose_output';

+ - visualization (bool): 是否将识别结果保存为图片文件;

+ - use_gpu: 是否使用gpu;

+ - **返回**

+

+ - res (list\[dict\]): 识别结果的列表,列表元素依然为列表,存的内容为[图像名称,检测框,关键点]。

+

+

+## 四、服务部署

+

+- PaddleHub Serving 可以部署一个关键点检测的在线服务。

+

+- ### 第一步:启动PaddleHub Serving

+

+ - 运行启动命令:

+ - ```shell

+ $ hub serving start -m pp-tinypose

+ ```

+

+ - 这样就完成了一个关键点检测的服务化API的部署,默认端口号为8866。

+

+ - **NOTE:** 如使用GPU预测,则需要在启动服务之前,请设置CUDA\_VISIBLE\_DEVICES环境变量,否则不用设置。

+

+- ### 第二步:发送预测请求

+

+ - 配置好服务端,以下数行代码即可实现发送预测请求,获取预测结果

+

+ - ```python

+ import requests

+ import json

+ import cv2

+ import base64

+

+ def cv2_to_base64(image):

+ data = cv2.imencode('.jpg', image)[1]

+ return base64.b64encode(data.tostring()).decode('utf8')

+

+ # 发送HTTP请求

+ data = {'images':[cv2_to_base64(cv2.imread("/PATH/TO/IMAGE"))]}

+ headers = {"Content-type": "application/json"}

+ url = "http://127.0.0.1:8866/predict/pp-tinypose"

+ r = requests.post(url=url, headers=headers, data=json.dumps(data))

+ ```

+

+## 五、更新历史

+

+* 1.0.0

+

+ 初始发布

+

+ - ```shell

+ $ hub install pp-tinypose==1.0.0

+ ```

diff --git a/modules/image/keypoint_detection/pp-tinypose/__init__.py b/modules/image/keypoint_detection/pp-tinypose/__init__.py

new file mode 100644

index 000000000..55916b319

--- /dev/null

+++ b/modules/image/keypoint_detection/pp-tinypose/__init__.py

@@ -0,0 +1,5 @@

+import os

+import sys

+

+CUR_DIR = os.path.dirname(os.path.abspath(__file__))

+sys.path.append(CUR_DIR)

diff --git a/modules/image/keypoint_detection/pp-tinypose/benchmark_utils.py b/modules/image/keypoint_detection/pp-tinypose/benchmark_utils.py

new file mode 100644

index 000000000..e1dd4ec35

--- /dev/null

+++ b/modules/image/keypoint_detection/pp-tinypose/benchmark_utils.py

@@ -0,0 +1,262 @@

+# Copyright (c) 2021 PaddlePaddle Authors. All Rights Reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+import logging

+import os

+from pathlib import Path

+

+import paddle

+import paddle.inference as paddle_infer

+

+CUR_DIR = os.path.dirname(os.path.abspath(__file__))

+LOG_PATH_ROOT = f"{CUR_DIR}/../../output"

+

+

+class PaddleInferBenchmark(object):

+

+ def __init__(self,

+ config,

+ model_info: dict = {},

+ data_info: dict = {},

+ perf_info: dict = {},

+ resource_info: dict = {},

+ **kwargs):

+ """

+ Construct PaddleInferBenchmark Class to format logs.

+ args:

+ config(paddle.inference.Config): paddle inference config

+ model_info(dict): basic model info

+ {'model_name': 'resnet50'

+ 'precision': 'fp32'}

+ data_info(dict): input data info

+ {'batch_size': 1

+ 'shape': '3,224,224'

+ 'data_num': 1000}

+ perf_info(dict): performance result

+ {'preprocess_time_s': 1.0

+ 'inference_time_s': 2.0

+ 'postprocess_time_s': 1.0

+ 'total_time_s': 4.0}

+ resource_info(dict):

+ cpu and gpu resources

+ {'cpu_rss': 100

+ 'gpu_rss': 100

+ 'gpu_util': 60}

+ """

+ # PaddleInferBenchmark Log Version

+ self.log_version = "1.0.3"

+

+ # Paddle Version

+ self.paddle_version = paddle.__version__

+ self.paddle_commit = paddle.__git_commit__

+ paddle_infer_info = paddle_infer.get_version()

+ self.paddle_branch = paddle_infer_info.strip().split(': ')[-1]

+

+ # model info

+ self.model_info = model_info

+

+ # data info

+ self.data_info = data_info

+

+ # perf info

+ self.perf_info = perf_info

+

+ try:

+ # required value

+ self.model_name = model_info['model_name']

+ self.precision = model_info['precision']

+

+ self.batch_size = data_info['batch_size']

+ self.shape = data_info['shape']

+ self.data_num = data_info['data_num']

+

+ self.inference_time_s = round(perf_info['inference_time_s'], 4)

+ except:

+ self.print_help()

+ raise ValueError("Set argument wrong, please check input argument and its type")

+

+ self.preprocess_time_s = perf_info.get('preprocess_time_s', 0)

+ self.postprocess_time_s = perf_info.get('postprocess_time_s', 0)

+ self.with_tracker = True if 'tracking_time_s' in perf_info else False

+ self.tracking_time_s = perf_info.get('tracking_time_s', 0)

+ self.total_time_s = perf_info.get('total_time_s', 0)

+

+ self.inference_time_s_90 = perf_info.get("inference_time_s_90", "")

+ self.inference_time_s_99 = perf_info.get("inference_time_s_99", "")

+ self.succ_rate = perf_info.get("succ_rate", "")

+ self.qps = perf_info.get("qps", "")

+

+ # conf info

+ self.config_status = self.parse_config(config)

+

+ # mem info

+ if isinstance(resource_info, dict):

+ self.cpu_rss_mb = int(resource_info.get('cpu_rss_mb', 0))

+ self.cpu_vms_mb = int(resource_info.get('cpu_vms_mb', 0))

+ self.cpu_shared_mb = int(resource_info.get('cpu_shared_mb', 0))

+ self.cpu_dirty_mb = int(resource_info.get('cpu_dirty_mb', 0))

+ self.cpu_util = round(resource_info.get('cpu_util', 0), 2)

+

+ self.gpu_rss_mb = int(resource_info.get('gpu_rss_mb', 0))

+ self.gpu_util = round(resource_info.get('gpu_util', 0), 2)

+ self.gpu_mem_util = round(resource_info.get('gpu_mem_util', 0), 2)

+ else:

+ self.cpu_rss_mb = 0

+ self.cpu_vms_mb = 0

+ self.cpu_shared_mb = 0

+ self.cpu_dirty_mb = 0

+ self.cpu_util = 0

+

+ self.gpu_rss_mb = 0

+ self.gpu_util = 0

+ self.gpu_mem_util = 0

+

+ # init benchmark logger

+ self.benchmark_logger()

+

+ def benchmark_logger(self):

+ """

+ benchmark logger

+ """

+ # remove other logging handler

+ for handler in logging.root.handlers[:]:

+ logging.root.removeHandler(handler)

+

+ # Init logger

+ FORMAT = '%(asctime)s - %(name)s - %(levelname)s - %(message)s'

+ log_output = f"{LOG_PATH_ROOT}/{self.model_name}.log"

+ Path(f"{LOG_PATH_ROOT}").mkdir(parents=True, exist_ok=True)

+ logging.basicConfig(level=logging.INFO,

+ format=FORMAT,

+ handlers=[

+ logging.FileHandler(filename=log_output, mode='w'),

+ logging.StreamHandler(),

+ ])

+ self.logger = logging.getLogger(__name__)

+ self.logger.info(f"Paddle Inference benchmark log will be saved to {log_output}")

+

+ def parse_config(self, config) -> dict:

+ """

+ parse paddle predictor config

+ args:

+ config(paddle.inference.Config): paddle inference config

+ return:

+ config_status(dict): dict style config info

+ """

+ if isinstance(config, paddle_infer.Config):

+ config_status = {}

+ config_status['runtime_device'] = "gpu" if config.use_gpu() else "cpu"

+ config_status['ir_optim'] = config.ir_optim()

+ config_status['enable_tensorrt'] = config.tensorrt_engine_enabled()

+ config_status['precision'] = self.precision

+ config_status['enable_mkldnn'] = config.mkldnn_enabled()

+ config_status['cpu_math_library_num_threads'] = config.cpu_math_library_num_threads()

+ elif isinstance(config, dict):

+ config_status['runtime_device'] = config.get('runtime_device', "")

+ config_status['ir_optim'] = config.get('ir_optim', "")

+ config_status['enable_tensorrt'] = config.get('enable_tensorrt', "")

+ config_status['precision'] = config.get('precision', "")

+ config_status['enable_mkldnn'] = config.get('enable_mkldnn', "")

+ config_status['cpu_math_library_num_threads'] = config.get('cpu_math_library_num_threads', "")

+ else:

+ self.print_help()

+ raise ValueError("Set argument config wrong, please check input argument and its type")

+ return config_status

+

+ def report(self, identifier=None):

+ """

+ print log report

+ args:

+ identifier(string): identify log

+ """

+ if identifier:

+ identifier = f"[{identifier}]"

+ else:

+ identifier = ""

+

+ self.logger.info("\n")

+ self.logger.info("---------------------- Paddle info ----------------------")

+ self.logger.info(f"{identifier} paddle_version: {self.paddle_version}")

+ self.logger.info(f"{identifier} paddle_commit: {self.paddle_commit}")

+ self.logger.info(f"{identifier} paddle_branch: {self.paddle_branch}")

+ self.logger.info(f"{identifier} log_api_version: {self.log_version}")

+ self.logger.info("----------------------- Conf info -----------------------")

+ self.logger.info(f"{identifier} runtime_device: {self.config_status['runtime_device']}")

+ self.logger.info(f"{identifier} ir_optim: {self.config_status['ir_optim']}")

+ self.logger.info(f"{identifier} enable_memory_optim: {True}")

+ self.logger.info(f"{identifier} enable_tensorrt: {self.config_status['enable_tensorrt']}")

+ self.logger.info(f"{identifier} enable_mkldnn: {self.config_status['enable_mkldnn']}")

+ self.logger.info(

+ f"{identifier} cpu_math_library_num_threads: {self.config_status['cpu_math_library_num_threads']}")

+ self.logger.info("----------------------- Model info ----------------------")

+ self.logger.info(f"{identifier} model_name: {self.model_name}")

+ self.logger.info(f"{identifier} precision: {self.precision}")

+ self.logger.info("----------------------- Data info -----------------------")

+ self.logger.info(f"{identifier} batch_size: {self.batch_size}")

+ self.logger.info(f"{identifier} input_shape: {self.shape}")

+ self.logger.info(f"{identifier} data_num: {self.data_num}")

+ self.logger.info("----------------------- Perf info -----------------------")

+ self.logger.info(

+ f"{identifier} cpu_rss(MB): {self.cpu_rss_mb}, cpu_vms: {self.cpu_vms_mb}, cpu_shared_mb: {self.cpu_shared_mb}, cpu_dirty_mb: {self.cpu_dirty_mb}, cpu_util: {self.cpu_util}%"

+ )

+ self.logger.info(

+ f"{identifier} gpu_rss(MB): {self.gpu_rss_mb}, gpu_util: {self.gpu_util}%, gpu_mem_util: {self.gpu_mem_util}%"

+ )

+ self.logger.info(f"{identifier} total time spent(s): {self.total_time_s}")

+

+ if self.with_tracker:

+ self.logger.info(f"{identifier} preprocess_time(ms): {round(self.preprocess_time_s*1000, 1)}, "

+ f"inference_time(ms): {round(self.inference_time_s*1000, 1)}, "

+ f"postprocess_time(ms): {round(self.postprocess_time_s*1000, 1)}, "

+ f"tracking_time(ms): {round(self.tracking_time_s*1000, 1)}")

+ else:

+ self.logger.info(f"{identifier} preprocess_time(ms): {round(self.preprocess_time_s*1000, 1)}, "

+ f"inference_time(ms): {round(self.inference_time_s*1000, 1)}, "

+ f"postprocess_time(ms): {round(self.postprocess_time_s*1000, 1)}")

+ if self.inference_time_s_90:

+ self.looger.info(

+ f"{identifier} 90%_cost: {self.inference_time_s_90}, 99%_cost: {self.inference_time_s_99}, succ_rate: {self.succ_rate}"

+ )

+ if self.qps:

+ self.logger.info(f"{identifier} QPS: {self.qps}")

+

+ def print_help(self):

+ """

+ print function help

+ """

+ print("""Usage:

+ ==== Print inference benchmark logs. ====

+ config = paddle.inference.Config()

+ model_info = {'model_name': 'resnet50'

+ 'precision': 'fp32'}

+ data_info = {'batch_size': 1

+ 'shape': '3,224,224'

+ 'data_num': 1000}

+ perf_info = {'preprocess_time_s': 1.0

+ 'inference_time_s': 2.0

+ 'postprocess_time_s': 1.0

+ 'total_time_s': 4.0}

+ resource_info = {'cpu_rss_mb': 100

+ 'gpu_rss_mb': 100

+ 'gpu_util': 60}

+ log = PaddleInferBenchmark(config, model_info, data_info, perf_info, resource_info)

+ log('Test')

+ """)

+

+ def __call__(self, identifier=None):

+ """

+ __call__

+ args:

+ identifier(string): identify log

+ """

+ self.report(identifier)

diff --git a/modules/image/keypoint_detection/pp-tinypose/det_keypoint_unite_infer.py b/modules/image/keypoint_detection/pp-tinypose/det_keypoint_unite_infer.py

new file mode 100644

index 000000000..612f6dd51

--- /dev/null

+++ b/modules/image/keypoint_detection/pp-tinypose/det_keypoint_unite_infer.py

@@ -0,0 +1,230 @@

+# Copyright (c) 2021 PaddlePaddle Authors. All Rights Reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+import json

+import math

+import os

+

+import cv2

+import numpy as np

+import paddle

+import yaml

+from benchmark_utils import PaddleInferBenchmark

+from det_keypoint_unite_utils import argsparser

+from infer import bench_log

+from infer import Detector

+from infer import get_test_images

+from infer import PredictConfig

+from infer import print_arguments

+from keypoint_infer import KeyPointDetector

+from keypoint_infer import PredictConfig_KeyPoint

+from keypoint_postprocess import translate_to_ori_images

+from preprocess import decode_image

+from utils import get_current_memory_mb

+from visualize import visualize_pose

+

+KEYPOINT_SUPPORT_MODELS = {'HigherHRNet': 'keypoint_bottomup', 'HRNet': 'keypoint_topdown'}

+

+

+def predict_with_given_det(image, det_res, keypoint_detector, keypoint_batch_size, run_benchmark):

+ rec_images, records, det_rects = keypoint_detector.get_person_from_rect(image, det_res)

+ keypoint_vector = []

+ score_vector = []

+

+ rect_vector = det_rects

+ keypoint_results = keypoint_detector.predict_image(rec_images, run_benchmark, repeats=10, visual=False)

+ keypoint_vector, score_vector = translate_to_ori_images(keypoint_results, np.array(records))

+ keypoint_res = {}

+ keypoint_res['keypoint'] = [keypoint_vector.tolist(), score_vector.tolist()] if len(keypoint_vector) > 0 else [[],

+ []]

+ keypoint_res['bbox'] = rect_vector

+ return keypoint_res

+

+

+def topdown_unite_predict(detector, topdown_keypoint_detector, image_list, keypoint_batch_size=1, save_res=False):

+ det_timer = detector.get_timer()

+ store_res = []

+ for i, img_file in enumerate(image_list):

+ # Decode image in advance in det + pose prediction

+ det_timer.preprocess_time_s.start()

+ image, _ = decode_image(img_file, {})

+ det_timer.preprocess_time_s.end()

+

+ if FLAGS.run_benchmark:

+ results = detector.predict_image([image], run_benchmark=True, repeats=10)

+

+ cm, gm, gu = get_current_memory_mb()

+ detector.cpu_mem += cm

+ detector.gpu_mem += gm

+ detector.gpu_util += gu

+ else:

+ results = detector.predict_image([image], visual=False)

+ results = detector.filter_box(results, FLAGS.det_threshold)

+ if results['boxes_num'] > 0:

+ keypoint_res = predict_with_given_det(image, results, topdown_keypoint_detector, keypoint_batch_size,

+ FLAGS.run_benchmark)

+

+ if save_res:

+ save_name = img_file if isinstance(img_file, str) else i

+ store_res.append(

+ [save_name, keypoint_res['bbox'], [keypoint_res['keypoint'][0], keypoint_res['keypoint'][1]]])

+ else:

+ results["keypoint"] = [[], []]

+ keypoint_res = results

+ if FLAGS.run_benchmark:

+ cm, gm, gu = get_current_memory_mb()

+ topdown_keypoint_detector.cpu_mem += cm

+ topdown_keypoint_detector.gpu_mem += gm

+ topdown_keypoint_detector.gpu_util += gu

+ else:

+ if not os.path.exists(FLAGS.output_dir):

+ os.makedirs(FLAGS.output_dir)

+ visualize_pose(img_file, keypoint_res, visual_thresh=FLAGS.keypoint_threshold, save_dir=FLAGS.output_dir)

+ if save_res:

+ """

+ 1) store_res: a list of image_data

+ 2) image_data: [imageid, rects, [keypoints, scores]]

+ 3) rects: list of rect [xmin, ymin, xmax, ymax]

+ 4) keypoints: 17(joint numbers)*[x, y, conf], total 51 data in list

+ 5) scores: mean of all joint conf

+ """

+ with open("det_keypoint_unite_image_results.json", 'w') as wf:

+ json.dump(store_res, wf, indent=4)

+

+

+def topdown_unite_predict_video(detector, topdown_keypoint_detector, camera_id, keypoint_batch_size=1, save_res=False):

+ video_name = 'output.mp4'

+ if camera_id != -1:

+ capture = cv2.VideoCapture(camera_id)

+ else:

+ capture = cv2.VideoCapture(FLAGS.video_file)

+ video_name = os.path.split(FLAGS.video_file)[-1]

+ # Get Video info : resolution, fps, frame count

+ width = int(capture.get(cv2.CAP_PROP_FRAME_WIDTH))

+ height = int(capture.get(cv2.CAP_PROP_FRAME_HEIGHT))

+ fps = int(capture.get(cv2.CAP_PROP_FPS))

+ frame_count = int(capture.get(cv2.CAP_PROP_FRAME_COUNT))

+ print("fps: %d, frame_count: %d" % (fps, frame_count))

+

+ if not os.path.exists(FLAGS.output_dir):

+ os.makedirs(FLAGS.output_dir)

+ out_path = os.path.join(FLAGS.output_dir, video_name)

+ fourcc = cv2.VideoWriter_fourcc(*'mp4v')

+ writer = cv2.VideoWriter(out_path, fourcc, fps, (width, height))

+ index = 0

+ store_res = []

+ while (1):

+ ret, frame = capture.read()

+ if not ret:

+ break

+ index += 1

+ print('detect frame: %d' % (index))

+

+ frame2 = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)

+

+ results = detector.predict_image([frame2], visual=False)

+ results = detector.filter_box(results, FLAGS.det_threshold)

+ if results['boxes_num'] == 0:

+ writer.write(frame)

+ continue

+

+ keypoint_res = predict_with_given_det(frame2, results, topdown_keypoint_detector, keypoint_batch_size,

+ FLAGS.run_benchmark)

+

+ im = visualize_pose(frame, keypoint_res, visual_thresh=FLAGS.keypoint_threshold, returnimg=True)

+ if save_res:

+ store_res.append([index, keypoint_res['bbox'], [keypoint_res['keypoint'][0], keypoint_res['keypoint'][1]]])

+

+ writer.write(im)

+ if camera_id != -1:

+ cv2.imshow('Mask Detection', im)

+ if cv2.waitKey(1) & 0xFF == ord('q'):

+ break

+ writer.release()

+ print('output_video saved to: {}'.format(out_path))

+ if save_res:

+ """

+ 1) store_res: a list of frame_data

+ 2) frame_data: [frameid, rects, [keypoints, scores]]

+ 3) rects: list of rect [xmin, ymin, xmax, ymax]

+ 4) keypoints: 17(joint numbers)*[x, y, conf], total 51 data in list

+ 5) scores: mean of all joint conf

+ """

+ with open("det_keypoint_unite_video_results.json", 'w') as wf:

+ json.dump(store_res, wf, indent=4)

+

+

+def main():

+ deploy_file = os.path.join(FLAGS.det_model_dir, 'infer_cfg.yml')

+ with open(deploy_file) as f:

+ yml_conf = yaml.safe_load(f)

+ arch = yml_conf['arch']

+ detector = Detector(FLAGS.det_model_dir,

+ device=FLAGS.device,

+ run_mode=FLAGS.run_mode,

+ trt_min_shape=FLAGS.trt_min_shape,

+ trt_max_shape=FLAGS.trt_max_shape,

+ trt_opt_shape=FLAGS.trt_opt_shape,

+ trt_calib_mode=FLAGS.trt_calib_mode,

+ cpu_threads=FLAGS.cpu_threads,

+ enable_mkldnn=FLAGS.enable_mkldnn,

+ threshold=FLAGS.det_threshold)

+

+ topdown_keypoint_detector = KeyPointDetector(FLAGS.keypoint_model_dir,

+ device=FLAGS.device,

+ run_mode=FLAGS.run_mode,

+ batch_size=FLAGS.keypoint_batch_size,

+ trt_min_shape=FLAGS.trt_min_shape,

+ trt_max_shape=FLAGS.trt_max_shape,

+ trt_opt_shape=FLAGS.trt_opt_shape,

+ trt_calib_mode=FLAGS.trt_calib_mode,

+ cpu_threads=FLAGS.cpu_threads,

+ enable_mkldnn=FLAGS.enable_mkldnn,

+ use_dark=FLAGS.use_dark)

+ keypoint_arch = topdown_keypoint_detector.pred_config.arch

+ assert KEYPOINT_SUPPORT_MODELS[

+ keypoint_arch] == 'keypoint_topdown', 'Detection-Keypoint unite inference only supports topdown models.'

+

+ # predict from video file or camera video stream

+ if FLAGS.video_file is not None or FLAGS.camera_id != -1:

+ topdown_unite_predict_video(detector, topdown_keypoint_detector, FLAGS.camera_id, FLAGS.keypoint_batch_size,

+ FLAGS.save_res)

+ else:

+ # predict from image

+ img_list = get_test_images(FLAGS.image_dir, FLAGS.image_file)

+ topdown_unite_predict(detector, topdown_keypoint_detector, img_list, FLAGS.keypoint_batch_size, FLAGS.save_res)

+ if not FLAGS.run_benchmark:

+ detector.det_times.info(average=True)

+ topdown_keypoint_detector.det_times.info(average=True)

+ else:

+ mode = FLAGS.run_mode

+ det_model_dir = FLAGS.det_model_dir

+ det_model_info = {'model_name': det_model_dir.strip('/').split('/')[-1], 'precision': mode.split('_')[-1]}

+ bench_log(detector, img_list, det_model_info, name='Det')

+ keypoint_model_dir = FLAGS.keypoint_model_dir

+ keypoint_model_info = {

+ 'model_name': keypoint_model_dir.strip('/').split('/')[-1],

+ 'precision': mode.split('_')[-1]

+ }

+ bench_log(topdown_keypoint_detector, img_list, keypoint_model_info, FLAGS.keypoint_batch_size, 'KeyPoint')

+

+

+if __name__ == '__main__':

+ paddle.enable_static()

+ parser = argsparser()

+ FLAGS = parser.parse_args()

+ print_arguments(FLAGS)

+ FLAGS.device = FLAGS.device.upper()

+ assert FLAGS.device in ['CPU', 'GPU', 'XPU'], "device should be CPU, GPU or XPU"

+

+ main()

diff --git a/modules/image/keypoint_detection/pp-tinypose/det_keypoint_unite_utils.py b/modules/image/keypoint_detection/pp-tinypose/det_keypoint_unite_utils.py

new file mode 100644

index 000000000..309c80814

--- /dev/null

+++ b/modules/image/keypoint_detection/pp-tinypose/det_keypoint_unite_utils.py

@@ -0,0 +1,86 @@

+# Copyright (c) 2021 PaddlePaddle Authors. All Rights Reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+import argparse

+import ast

+

+

+def argsparser():

+ parser = argparse.ArgumentParser(description=__doc__)

+ parser.add_argument("--det_model_dir",

+ type=str,

+ default=None,

+ help=("Directory include:'model.pdiparams', 'model.pdmodel', "

+ "'infer_cfg.yml', created by tools/export_model.py."),

+ required=True)

+ parser.add_argument("--keypoint_model_dir",

+ type=str,

+ default=None,

+ help=("Directory include:'model.pdiparams', 'model.pdmodel', "

+ "'infer_cfg.yml', created by tools/export_model.py."),

+ required=True)

+ parser.add_argument("--image_file", type=str, default=None, help="Path of image file.")

+ parser.add_argument("--image_dir",

+ type=str,

+ default=None,

+ help="Dir of image file, `image_file` has a higher priority.")

+ parser.add_argument("--keypoint_batch_size",

+ type=int,

+ default=8,

+ help=("batch_size for keypoint inference. In detection-keypoint unit"

+ "inference, the batch size in detection is 1. Then collate det "

+ "result in batch for keypoint inference."))

+ parser.add_argument("--video_file",

+ type=str,

+ default=None,

+ help="Path of video file, `video_file` or `camera_id` has a highest priority.")

+ parser.add_argument("--camera_id", type=int, default=-1, help="device id of camera to predict.")

+ parser.add_argument("--det_threshold", type=float, default=0.5, help="Threshold of score.")

+ parser.add_argument("--keypoint_threshold", type=float, default=0.5, help="Threshold of score.")

+ parser.add_argument("--output_dir", type=str, default="output", help="Directory of output visualization files.")

+ parser.add_argument("--run_mode",

+ type=str,

+ default='paddle',

+ help="mode of running(paddle/trt_fp32/trt_fp16/trt_int8)")

+ parser.add_argument("--device",

+ type=str,

+ default='cpu',

+ help="Choose the device you want to run, it can be: CPU/GPU/XPU, default is CPU.")

+ parser.add_argument("--run_benchmark",

+ type=ast.literal_eval,

+ default=False,

+ help="Whether to predict a image_file repeatedly for benchmark")

+ parser.add_argument("--enable_mkldnn", type=ast.literal_eval, default=False, help="Whether use mkldnn with CPU.")

+ parser.add_argument("--cpu_threads", type=int, default=1, help="Num of threads with CPU.")

+ parser.add_argument("--trt_min_shape", type=int, default=1, help="min_shape for TensorRT.")

+ parser.add_argument("--trt_max_shape", type=int, default=1280, help="max_shape for TensorRT.")

+ parser.add_argument("--trt_opt_shape", type=int, default=640, help="opt_shape for TensorRT.")

+ parser.add_argument("--trt_calib_mode",

+ type=bool,

+ default=False,

+ help="If the model is produced by TRT offline quantitative "

+ "calibration, trt_calib_mode need to set True.")

+ parser.add_argument('--use_dark',

+ type=ast.literal_eval,

+ default=True,

+ help='whether to use darkpose to get better keypoint position predict ')

+ parser.add_argument('--save_res',

+ type=bool,

+ default=False,

+ help=("whether to save predict results to json file"

+ "1) store_res: a list of image_data"

+ "2) image_data: [imageid, rects, [keypoints, scores]]"

+ "3) rects: list of rect [xmin, ymin, xmax, ymax]"

+ "4) keypoints: 17(joint numbers)*[x, y, conf], total 51 data in list"

+ "5) scores: mean of all joint conf"))

+ return parser

diff --git a/modules/image/keypoint_detection/pp-tinypose/infer.py b/modules/image/keypoint_detection/pp-tinypose/infer.py

new file mode 100644

index 000000000..fe0764e97

--- /dev/null

+++ b/modules/image/keypoint_detection/pp-tinypose/infer.py

@@ -0,0 +1,694 @@

+# Copyright (c) 2020 PaddlePaddle Authors. All Rights Reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+import glob

+import json

+import math

+import os

+import sys

+from functools import reduce

+from pathlib import Path

+

+import cv2

+import numpy as np

+import paddle

+import yaml

+from benchmark_utils import PaddleInferBenchmark

+from keypoint_preprocess import EvalAffine

+from keypoint_preprocess import expand_crop

+from keypoint_preprocess import TopDownEvalAffine

+from paddle.inference import Config

+from paddle.inference import create_predictor

+from preprocess import decode_image

+from preprocess import LetterBoxResize

+from preprocess import NormalizeImage

+from preprocess import Pad

+from preprocess import PadStride

+from preprocess import Permute

+from preprocess import preprocess

+from preprocess import Resize

+from preprocess import WarpAffine

+from utils import argsparser

+from utils import get_current_memory_mb

+from utils import Timer

+from visualize import visualize_box

+

+# Global dictionary

+SUPPORT_MODELS = {

+ 'YOLO',

+ 'RCNN',

+ 'SSD',

+ 'Face',

+ 'FCOS',

+ 'SOLOv2',

+ 'TTFNet',

+ 'S2ANet',

+ 'JDE',

+ 'FairMOT',

+ 'DeepSORT',

+ 'GFL',

+ 'PicoDet',

+ 'CenterNet',

+ 'TOOD',

+ 'RetinaNet',

+ 'StrongBaseline',

+ 'STGCN',

+ 'YOLOX',

+}

+

+

+def bench_log(detector, img_list, model_info, batch_size=1, name=None):

+ mems = {

+ 'cpu_rss_mb': detector.cpu_mem / len(img_list),

+ 'gpu_rss_mb': detector.gpu_mem / len(img_list),

+ 'gpu_util': detector.gpu_util * 100 / len(img_list)

+ }

+ perf_info = detector.det_times.report(average=True)

+ data_info = {'batch_size': batch_size, 'shape': "dynamic_shape", 'data_num': perf_info['img_num']}

+ log = PaddleInferBenchmark(detector.config, model_info, data_info, perf_info, mems)

+ log(name)

+

+

+class Detector(object):

+ """

+ Args:

+ pred_config (object): config of model, defined by `Config(model_dir)`

+ model_dir (str): root path of model.pdiparams, model.pdmodel and infer_cfg.yml

+ device (str): Choose the device you want to run, it can be: CPU/GPU/XPU, default is CPU

+ run_mode (str): mode of running(paddle/trt_fp32/trt_fp16)

+ batch_size (int): size of pre batch in inference

+ trt_min_shape (int): min shape for dynamic shape in trt

+ trt_max_shape (int): max shape for dynamic shape in trt

+ trt_opt_shape (int): opt shape for dynamic shape in trt

+ trt_calib_mode (bool): If the model is produced by TRT offline quantitative

+ calibration, trt_calib_mode need to set True

+ cpu_threads (int): cpu threads

+ enable_mkldnn (bool): whether to open MKLDNN

+ enable_mkldnn_bfloat16 (bool): whether to turn on mkldnn bfloat16

+ output_dir (str): The path of output

+ threshold (float): The threshold of score for visualization

+ delete_shuffle_pass (bool): whether to remove shuffle_channel_detect_pass in TensorRT.

+ Used by action model.

+ """

+

+ def __init__(self,

+ model_dir,

+ device='CPU',

+ run_mode='paddle',

+ batch_size=1,

+ trt_min_shape=1,

+ trt_max_shape=1280,

+ trt_opt_shape=640,

+ trt_calib_mode=False,

+ cpu_threads=1,

+ enable_mkldnn=False,

+ enable_mkldnn_bfloat16=False,

+ output_dir='output',

+ threshold=0.5,

+ delete_shuffle_pass=False):

+ self.pred_config = self.set_config(model_dir)

+ self.device = device

+ self.predictor, self.config = load_predictor(model_dir,

+ run_mode=run_mode,

+ batch_size=batch_size,

+ min_subgraph_size=self.pred_config.min_subgraph_size,

+ device=device,

+ use_dynamic_shape=self.pred_config.use_dynamic_shape,

+ trt_min_shape=trt_min_shape,

+ trt_max_shape=trt_max_shape,

+ trt_opt_shape=trt_opt_shape,

+ trt_calib_mode=trt_calib_mode,

+ cpu_threads=cpu_threads,

+ enable_mkldnn=enable_mkldnn,

+ enable_mkldnn_bfloat16=enable_mkldnn_bfloat16,

+ delete_shuffle_pass=delete_shuffle_pass)

+ self.det_times = Timer()

+ self.cpu_mem, self.gpu_mem, self.gpu_util = 0, 0, 0

+ self.batch_size = batch_size

+ self.output_dir = output_dir

+ self.threshold = threshold

+

+ def set_config(self, model_dir):

+ return PredictConfig(model_dir)

+

+ def preprocess(self, image_list):

+ preprocess_ops = []

+ for op_info in self.pred_config.preprocess_infos:

+ new_op_info = op_info.copy()

+ op_type = new_op_info.pop('type')

+ preprocess_ops.append(eval(op_type)(**new_op_info))

+

+ input_im_lst = []

+ input_im_info_lst = []

+ for im_path in image_list:

+ im, im_info = preprocess(im_path, preprocess_ops)

+ input_im_lst.append(im)

+ input_im_info_lst.append(im_info)

+ inputs = create_inputs(input_im_lst, input_im_info_lst)

+ input_names = self.predictor.get_input_names()

+ for i in range(len(input_names)):

+ input_tensor = self.predictor.get_input_handle(input_names[i])

+ input_tensor.copy_from_cpu(inputs[input_names[i]])

+

+ return inputs

+

+ def postprocess(self, inputs, result):

+ # postprocess output of predictor

+ np_boxes_num = result['boxes_num']

+ if np_boxes_num[0] <= 0:

+ print('[WARNNING] No object detected.')

+ result = {'boxes': np.zeros([0, 6]), 'boxes_num': [0]}

+ result = {k: v for k, v in result.items() if v is not None}

+ return result

+

+ def filter_box(self, result, threshold):

+ np_boxes_num = result['boxes_num']

+ boxes = result['boxes']

+ start_idx = 0

+ filter_boxes = []

+ filter_num = []

+ for i in range(len(np_boxes_num)):

+ boxes_num = np_boxes_num[i]

+ boxes_i = boxes[start_idx:start_idx + boxes_num, :]

+ idx = boxes_i[:, 1] > threshold

+ filter_boxes_i = boxes_i[idx, :]

+ filter_boxes.append(filter_boxes_i)

+ filter_num.append(filter_boxes_i.shape[0])

+ start_idx += boxes_num

+ boxes = np.concatenate(filter_boxes)

+ filter_num = np.array(filter_num)

+ filter_res = {'boxes': boxes, 'boxes_num': filter_num}

+ return filter_res

+

+ def predict(self, repeats=1):

+ '''

+ Args:

+ repeats (int): repeats number for prediction

+ Returns:

+ result (dict): include 'boxes': np.ndarray: shape:[N,6], N: number of box,

+ matix element:[class, score, x_min, y_min, x_max, y_max]

+ MaskRCNN's result include 'masks': np.ndarray:

+ shape: [N, im_h, im_w]

+ '''

+ # model prediction

+ np_boxes, np_masks = None, None

+ for i in range(repeats):

+ self.predictor.run()

+ output_names = self.predictor.get_output_names()

+ boxes_tensor = self.predictor.get_output_handle(output_names[0])

+ np_boxes = boxes_tensor.copy_to_cpu()

+ boxes_num = self.predictor.get_output_handle(output_names[1])

+ np_boxes_num = boxes_num.copy_to_cpu()

+ if self.pred_config.mask:

+ masks_tensor = self.predictor.get_output_handle(output_names[2])

+ np_masks = masks_tensor.copy_to_cpu()

+ result = dict(boxes=np_boxes, masks=np_masks, boxes_num=np_boxes_num)

+ return result

+

+ def merge_batch_result(self, batch_result):

+ if len(batch_result) == 1:

+ return batch_result[0]

+ res_key = batch_result[0].keys()

+ results = {k: [] for k in res_key}

+ for res in batch_result:

+ for k, v in res.items():

+ results[k].append(v)

+ for k, v in results.items():

+ if k != 'masks':

+ results[k] = np.concatenate(v)

+ return results

+

+ def get_timer(self):

+ return self.det_times

+

+ def predict_image(self, image_list, run_benchmark=False, repeats=1, visual=True, save_file=None):

+ batch_loop_cnt = math.ceil(float(len(image_list)) / self.batch_size)

+ results = []

+ for i in range(batch_loop_cnt):

+ start_index = i * self.batch_size

+ end_index = min((i + 1) * self.batch_size, len(image_list))

+ batch_image_list = image_list[start_index:end_index]

+ if run_benchmark:

+ # preprocess

+ inputs = self.preprocess(batch_image_list) # warmup

+ self.det_times.preprocess_time_s.start()

+ inputs = self.preprocess(batch_image_list)

+ self.det_times.preprocess_time_s.end()

+

+ # model prediction

+ result = self.predict(repeats=50) # warmup

+ self.det_times.inference_time_s.start()

+ result = self.predict(repeats=repeats)

+ self.det_times.inference_time_s.end(repeats=repeats)

+

+ # postprocess

+ result_warmup = self.postprocess(inputs, result) # warmup

+ self.det_times.postprocess_time_s.start()

+ result = self.postprocess(inputs, result)

+ self.det_times.postprocess_time_s.end()

+ self.det_times.img_num += len(batch_image_list)

+

+ cm, gm, gu = get_current_memory_mb()

+ self.cpu_mem += cm

+ self.gpu_mem += gm

+ self.gpu_util += gu

+ else:

+ # preprocess

+ self.det_times.preprocess_time_s.start()

+ inputs = self.preprocess(batch_image_list)

+ self.det_times.preprocess_time_s.end()

+

+ # model prediction

+ self.det_times.inference_time_s.start()

+ result = self.predict()

+ self.det_times.inference_time_s.end()

+

+ # postprocess

+ self.det_times.postprocess_time_s.start()

+ result = self.postprocess(inputs, result)

+ self.det_times.postprocess_time_s.end()

+ self.det_times.img_num += len(batch_image_list)

+

+ if visual:

+ visualize(batch_image_list,

+ result,

+ self.pred_config.labels,

+ output_dir=self.output_dir,

+ threshold=self.threshold)

+

+ results.append(result)

+ if visual:

+ print('Test iter {}'.format(i))

+

+ if save_file is not None:

+ Path(self.output_dir).mkdir(exist_ok=True)

+ self.format_coco_results(image_list, results, save_file=save_file)

+

+ results = self.merge_batch_result(results)

+ return results

+

+ def predict_video(self, video_file, camera_id):

+ video_out_name = 'output.mp4'

+ if camera_id != -1:

+ capture = cv2.VideoCapture(camera_id)

+ else:

+ capture = cv2.VideoCapture(video_file)

+ video_out_name = os.path.split(video_file)[-1]

+ # Get Video info : resolution, fps, frame count

+ width = int(capture.get(cv2.CAP_PROP_FRAME_WIDTH))

+ height = int(capture.get(cv2.CAP_PROP_FRAME_HEIGHT))

+ fps = int(capture.get(cv2.CAP_PROP_FPS))

+ frame_count = int(capture.get(cv2.CAP_PROP_FRAME_COUNT))

+ print("fps: %d, frame_count: %d" % (fps, frame_count))

+

+ if not os.path.exists(self.output_dir):

+ os.makedirs(self.output_dir)

+ out_path = os.path.join(self.output_dir, video_out_name)

+ fourcc = cv2.VideoWriter_fourcc(*'mp4v')

+ writer = cv2.VideoWriter(out_path, fourcc, fps, (width, height))

+ index = 1

+ while (1):

+ ret, frame = capture.read()

+ if not ret:

+ break

+ print('detect frame: %d' % (index))

+ index += 1

+ results = self.predict_image([frame[:, :, ::-1]], visual=False)

+

+ im = visualize_box(frame, results, self.pred_config.labels, threshold=self.threshold)

+ im = np.array(im)

+ writer.write(im)

+ if camera_id != -1:

+ cv2.imshow('Mask Detection', im)

+ if cv2.waitKey(1) & 0xFF == ord('q'):

+ break

+ writer.release()

+

+ @staticmethod

+ def format_coco_results(image_list, results, save_file=None):

+ coco_results = []

+ image_id = 0

+

+ for result in results:

+ start_idx = 0

+ for box_num in result['boxes_num']:

+ idx_slice = slice(start_idx, start_idx + box_num)

+ start_idx += box_num

+

+ image_file = image_list[image_id]

+ image_id += 1

+

+ if 'boxes' in result:

+ boxes = result['boxes'][idx_slice, :]

+ per_result = [

+ {

+ 'image_file': image_file,

+ 'bbox': [box[2], box[3], box[4] - box[2], box[5] - box[3]], # xyxy -> xywh

+ 'score': box[1],

+ 'category_id': int(box[0]),

+ } for k, box in enumerate(boxes.tolist())

+ ]

+

+ elif 'segm' in result:

+ import pycocotools.mask as mask_util

+

+ scores = result['score'][idx_slice].tolist()

+ category_ids = result['label'][idx_slice].tolist()

+ segms = result['segm'][idx_slice, :]

+ rles = [

+ mask_util.encode(np.array(mask[:, :, np.newaxis], dtype=np.uint8, order='F'))[0]

+ for mask in segms

+ ]

+ for rle in rles:

+ rle['counts'] = rle['counts'].decode('utf-8')

+

+ per_result = [{

+ 'image_file': image_file,

+ 'segmentation': rle,

+ 'score': scores[k],

+ 'category_id': category_ids[k],

+ } for k, rle in enumerate(rles)]

+

+ else:

+ raise RuntimeError('')

+

+ # per_result = [item for item in per_result if item['score'] > threshold]

+ coco_results.extend(per_result)

+

+ if save_file:

+ with open(os.path.join(save_file), 'w') as f:

+ json.dump(coco_results, f)

+

+ return coco_results

+

+

+def create_inputs(imgs, im_info):

+ """generate input for different model type

+ Args:

+ imgs (list(numpy)): list of images (np.ndarray)

+ im_info (list(dict)): list of image info

+ Returns:

+ inputs (dict): input of model

+ """

+ inputs = {}

+

+ im_shape = []

+ scale_factor = []

+ if len(imgs) == 1:

+ inputs['image'] = np.array((imgs[0], )).astype('float32')

+ inputs['im_shape'] = np.array((im_info[0]['im_shape'], )).astype('float32')

+ inputs['scale_factor'] = np.array((im_info[0]['scale_factor'], )).astype('float32')

+ return inputs

+

+ for e in im_info:

+ im_shape.append(np.array((e['im_shape'], )).astype('float32'))

+ scale_factor.append(np.array((e['scale_factor'], )).astype('float32'))

+

+ inputs['im_shape'] = np.concatenate(im_shape, axis=0)

+ inputs['scale_factor'] = np.concatenate(scale_factor, axis=0)

+

+ imgs_shape = [[e.shape[1], e.shape[2]] for e in imgs]

+ max_shape_h = max([e[0] for e in imgs_shape])

+ max_shape_w = max([e[1] for e in imgs_shape])

+ padding_imgs = []

+ for img in imgs:

+ im_c, im_h, im_w = img.shape[:]

+ padding_im = np.zeros((im_c, max_shape_h, max_shape_w), dtype=np.float32)

+ padding_im[:, :im_h, :im_w] = img

+ padding_imgs.append(padding_im)

+ inputs['image'] = np.stack(padding_imgs, axis=0)

+ return inputs

+

+

+class PredictConfig():

+ """set config of preprocess, postprocess and visualize

+ Args:

+ model_dir (str): root path of model.yml

+ """

+

+ def __init__(self, model_dir):

+ # parsing Yaml config for Preprocess

+ deploy_file = os.path.join(model_dir, 'infer_cfg.yml')

+ with open(deploy_file) as f:

+ yml_conf = yaml.safe_load(f)

+ self.check_model(yml_conf)

+ self.arch = yml_conf['arch']

+ self.preprocess_infos = yml_conf['Preprocess']

+ self.min_subgraph_size = yml_conf['min_subgraph_size']

+ self.labels = yml_conf['label_list']

+ self.mask = False

+ self.use_dynamic_shape = yml_conf['use_dynamic_shape']

+ if 'mask' in yml_conf:

+ self.mask = yml_conf['mask']

+ self.tracker = None

+ if 'tracker' in yml_conf:

+ self.tracker = yml_conf['tracker']

+ if 'NMS' in yml_conf:

+ self.nms = yml_conf['NMS']

+ if 'fpn_stride' in yml_conf:

+ self.fpn_stride = yml_conf['fpn_stride']

+ if self.arch == 'RCNN' and yml_conf.get('export_onnx', False):

+ print('The RCNN export model is used for ONNX and it only supports batch_size = 1')

+ self.print_config()

+

+ def check_model(self, yml_conf):

+ """

+ Raises:

+ ValueError: loaded model not in supported model type

+ """

+ for support_model in SUPPORT_MODELS:

+ if support_model in yml_conf['arch']:

+ return True

+ raise ValueError("Unsupported arch: {}, expect {}".format(yml_conf['arch'], SUPPORT_MODELS))

+

+ def print_config(self):

+ print('----------- Model Configuration -----------')

+ print('%s: %s' % ('Model Arch', self.arch))

+ print('%s: ' % ('Transform Order'))

+ for op_info in self.preprocess_infos:

+ print('--%s: %s' % ('transform op', op_info['type']))

+ print('--------------------------------------------')

+

+

+def load_predictor(model_dir,

+ run_mode='paddle',

+ batch_size=1,

+ device='CPU',

+ min_subgraph_size=3,

+ use_dynamic_shape=False,

+ trt_min_shape=1,

+ trt_max_shape=1280,

+ trt_opt_shape=640,

+ trt_calib_mode=False,

+ cpu_threads=1,

+ enable_mkldnn=False,

+ enable_mkldnn_bfloat16=False,

+ delete_shuffle_pass=False):

+ """set AnalysisConfig, generate AnalysisPredictor

+ Args:

+ model_dir (str): root path of __model__ and __params__

+ device (str): Choose the device you want to run, it can be: CPU/GPU/XPU, default is CPU

+ run_mode (str): mode of running(paddle/trt_fp32/trt_fp16/trt_int8)

+ use_dynamic_shape (bool): use dynamic shape or not

+ trt_min_shape (int): min shape for dynamic shape in trt

+ trt_max_shape (int): max shape for dynamic shape in trt

+ trt_opt_shape (int): opt shape for dynamic shape in trt

+ trt_calib_mode (bool): If the model is produced by TRT offline quantitative

+ calibration, trt_calib_mode need to set True

+ delete_shuffle_pass (bool): whether to remove shuffle_channel_detect_pass in TensorRT.

+ Used by action model.

+ Returns:

+ predictor (PaddlePredictor): AnalysisPredictor

+ Raises:

+ ValueError: predict by TensorRT need device == 'GPU'.

+ """

+ if device != 'GPU' and run_mode != 'paddle':

+ raise ValueError("Predict by TensorRT mode: {}, expect device=='GPU', but device == {}".format(

+ run_mode, device))

+ config = Config(os.path.join(model_dir, 'model.pdmodel'), os.path.join(model_dir, 'model.pdiparams'))

+ if device == 'GPU':

+ # initial GPU memory(M), device ID

+ config.enable_use_gpu(200, 0)

+ # optimize graph and fuse op

+ config.switch_ir_optim(True)

+ elif device == 'XPU':

+ config.enable_lite_engine()

+ config.enable_xpu(10 * 1024 * 1024)

+ else:

+ config.disable_gpu()

+ config.set_cpu_math_library_num_threads(cpu_threads)

+ if enable_mkldnn:

+ try:

+ # cache 10 different shapes for mkldnn to avoid memory leak

+ config.set_mkldnn_cache_capacity(10)

+ config.enable_mkldnn()

+ if enable_mkldnn_bfloat16:

+ config.enable_mkldnn_bfloat16()

+ except Exception as e:

+ print("The current environment does not support `mkldnn`, so disable mkldnn.")

+ pass

+

+ precision_map = {

+ 'trt_int8': Config.Precision.Int8,

+ 'trt_fp32': Config.Precision.Float32,

+ 'trt_fp16': Config.Precision.Half

+ }

+ if run_mode in precision_map.keys():

+ config.enable_tensorrt_engine(workspace_size=(1 << 25) * batch_size,

+ max_batch_size=batch_size,

+ min_subgraph_size=min_subgraph_size,

+ precision_mode=precision_map[run_mode],

+ use_static=False,

+ use_calib_mode=trt_calib_mode)

+

+ if use_dynamic_shape:

+ min_input_shape = {'image': [batch_size, 3, trt_min_shape, trt_min_shape]}

+ max_input_shape = {'image': [batch_size, 3, trt_max_shape, trt_max_shape]}

+ opt_input_shape = {'image': [batch_size, 3, trt_opt_shape, trt_opt_shape]}

+ config.set_trt_dynamic_shape_info(min_input_shape, max_input_shape, opt_input_shape)

+ print('trt set dynamic shape done!')

+

+ # disable print log when predict

+ config.disable_glog_info()

+ # enable shared memory

+ config.enable_memory_optim()

+ # disable feed, fetch OP, needed by zero_copy_run

+ config.switch_use_feed_fetch_ops(False)

+ if delete_shuffle_pass:

+ config.delete_pass("shuffle_channel_detect_pass")

+ predictor = create_predictor(config)

+ return predictor, config

+

+

+def get_test_images(infer_dir, infer_img):

+ """

+ Get image path list in TEST mode

+ """

+ assert infer_img is not None or infer_dir is not None, \

+ "--image_file or --image_dir should be set"

+ assert infer_img is None or os.path.isfile(infer_img), \

+ "{} is not a file".format(infer_img)

+ assert infer_dir is None or os.path.isdir(infer_dir), \

+ "{} is not a directory".format(infer_dir)

+

+ # infer_img has a higher priority

+ if infer_img and os.path.isfile(infer_img):

+ return [infer_img]

+

+ images = set()

+ infer_dir = os.path.abspath(infer_dir)

+ assert os.path.isdir(infer_dir), \

+ "infer_dir {} is not a directory".format(infer_dir)

+ exts = ['jpg', 'jpeg', 'png', 'bmp']

+ exts += [ext.upper() for ext in exts]

+ for ext in exts:

+ images.update(glob.glob('{}/*.{}'.format(infer_dir, ext)))

+ images = list(images)

+

+ assert len(images) > 0, "no image found in {}".format(infer_dir)

+ print("Found {} inference images in total.".format(len(images)))

+

+ return images

+

+

+def visualize(image_list, result, labels, output_dir='output/', threshold=0.5):

+ # visualize the predict result

+ start_idx = 0

+ for idx, image_file in enumerate(image_list):

+ im_bboxes_num = result['boxes_num'][idx]

+ im_results = {}

+ if 'boxes' in result:

+ im_results['boxes'] = result['boxes'][start_idx:start_idx + im_bboxes_num, :]

+ if 'masks' in result:

+ im_results['masks'] = result['masks'][start_idx:start_idx + im_bboxes_num, :]

+ if 'segm' in result:

+ im_results['segm'] = result['segm'][start_idx:start_idx + im_bboxes_num, :]

+ if 'label' in result:

+ im_results['label'] = result['label'][start_idx:start_idx + im_bboxes_num]

+ if 'score' in result:

+ im_results['score'] = result['score'][start_idx:start_idx + im_bboxes_num]

+

+ start_idx += im_bboxes_num

+ im = visualize_box(image_file, im_results, labels, threshold=threshold)

+ img_name = os.path.split(image_file)[-1]

+ if not os.path.exists(output_dir):

+ os.makedirs(output_dir)

+ out_path = os.path.join(output_dir, img_name)

+ im.save(out_path, quality=95)

+ print("save result to: " + out_path)

+

+

+def print_arguments(args):

+ print('----------- Running Arguments -----------')

+ for arg, value in sorted(vars(args).items()):

+ print('%s: %s' % (arg, value))

+ print('------------------------------------------')

+

+

+def main():

+ deploy_file = os.path.join(FLAGS.model_dir, 'infer_cfg.yml')

+ with open(deploy_file) as f:

+ yml_conf = yaml.safe_load(f)

+ arch = yml_conf['arch']

+ detector_func = 'Detector'

+ if arch == 'SOLOv2':

+ detector_func = 'DetectorSOLOv2'

+ elif arch == 'PicoDet':

+ detector_func = 'DetectorPicoDet'

+

+ detector = eval(detector_func)(FLAGS.model_dir,

+ device=FLAGS.device,

+ run_mode=FLAGS.run_mode,

+ batch_size=FLAGS.batch_size,

+ trt_min_shape=FLAGS.trt_min_shape,

+ trt_max_shape=FLAGS.trt_max_shape,

+ trt_opt_shape=FLAGS.trt_opt_shape,

+ trt_calib_mode=FLAGS.trt_calib_mode,

+ cpu_threads=FLAGS.cpu_threads,

+ enable_mkldnn=FLAGS.enable_mkldnn,

+ enable_mkldnn_bfloat16=FLAGS.enable_mkldnn_bfloat16,

+ threshold=FLAGS.threshold,

+ output_dir=FLAGS.output_dir)

+

+ # predict from video file or camera video stream

+ if FLAGS.video_file is not None or FLAGS.camera_id != -1:

+ detector.predict_video(FLAGS.video_file, FLAGS.camera_id)

+ else:

+ # predict from image

+ if FLAGS.image_dir is None and FLAGS.image_file is not None:

+ assert FLAGS.batch_size == 1, "batch_size should be 1, when image_file is not None"

+ img_list = get_test_images(FLAGS.image_dir, FLAGS.image_file)

+ save_file = os.path.join(FLAGS.output_dir, 'results.json') if FLAGS.save_results else None

+ detector.predict_image(img_list, FLAGS.run_benchmark, repeats=100, save_file=save_file)

+ if not FLAGS.run_benchmark:

+ detector.det_times.info(average=True)

+ else:

+ mode = FLAGS.run_mode

+ model_dir = FLAGS.model_dir

+ model_info = {'model_name': model_dir.strip('/').split('/')[-1], 'precision': mode.split('_')[-1]}

+ bench_log(detector, img_list, model_info, name='DET')

+

+

+if __name__ == '__main__':

+ paddle.enable_static()

+ parser = argsparser()

+ FLAGS = parser.parse_args()

+ print_arguments(FLAGS)

+ FLAGS.device = FLAGS.device.upper()

+ assert FLAGS.device in ['CPU', 'GPU', 'XPU'], "device should be CPU, GPU or XPU"

+ assert not FLAGS.use_gpu, "use_gpu has been deprecated, please use --device"

+

+ assert not (FLAGS.enable_mkldnn == False and FLAGS.enable_mkldnn_bfloat16

+ == True), 'To enable mkldnn bfloat, please turn on both enable_mkldnn and enable_mkldnn_bfloat16'

+

+ main()

diff --git a/modules/image/keypoint_detection/pp-tinypose/keypoint_infer.py b/modules/image/keypoint_detection/pp-tinypose/keypoint_infer.py

new file mode 100644

index 000000000..e782ac1be

--- /dev/null

+++ b/modules/image/keypoint_detection/pp-tinypose/keypoint_infer.py

@@ -0,0 +1,381 @@

+# Copyright (c) 2020 PaddlePaddle Authors. All Rights Reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+import glob

+import math

+import os

+import sys

+import time

+from functools import reduce

+

+import cv2

+import numpy as np

+import paddle

+import yaml

+from PIL import Image

+# add deploy path of PadleDetection to sys.path

+parent_path = os.path.abspath(os.path.join(__file__, *(['..'])))

+sys.path.insert(0, parent_path)

+

+from preprocess import preprocess, NormalizeImage, Permute

+from keypoint_preprocess import EvalAffine, TopDownEvalAffine, expand_crop

+from keypoint_postprocess import HRNetPostProcess

+from visualize import visualize_pose

+from paddle.inference import Config

+from paddle.inference import create_predictor

+from utils import argsparser, Timer, get_current_memory_mb

+from benchmark_utils import PaddleInferBenchmark

+from infer import Detector, get_test_images, print_arguments

+

+# Global dictionary

+KEYPOINT_SUPPORT_MODELS = {'HigherHRNet': 'keypoint_bottomup', 'HRNet': 'keypoint_topdown'}

+

+

+class KeyPointDetector(Detector):

+ """

+ Args:

+ model_dir (str): root path of model.pdiparams, model.pdmodel and infer_cfg.yml

+ device (str): Choose the device you want to run, it can be: CPU/GPU/XPU, default is CPU

+ run_mode (str): mode of running(paddle/trt_fp32/trt_fp16)

+ batch_size (int): size of pre batch in inference

+ trt_min_shape (int): min shape for dynamic shape in trt

+ trt_max_shape (int): max shape for dynamic shape in trt

+ trt_opt_shape (int): opt shape for dynamic shape in trt

+ trt_calib_mode (bool): If the model is produced by TRT offline quantitative

+ calibration, trt_calib_mode need to set True

+ cpu_threads (int): cpu threads

+ enable_mkldnn (bool): whether to open MKLDNN

+ use_dark(bool): whether to use postprocess in DarkPose

+ """

+

+ def __init__(self,

+ model_dir,

+ device='CPU',

+ run_mode='paddle',

+ batch_size=1,

+ trt_min_shape=1,

+ trt_max_shape=1280,

+ trt_opt_shape=640,

+ trt_calib_mode=False,

+ cpu_threads=1,

+ enable_mkldnn=False,

+ output_dir='output',

+ threshold=0.5,

+ use_dark=True):

+ super(KeyPointDetector, self).__init__(

+ model_dir=model_dir,

+ device=device,

+ run_mode=run_mode,

+ batch_size=batch_size,

+ trt_min_shape=trt_min_shape,

+ trt_max_shape=trt_max_shape,

+ trt_opt_shape=trt_opt_shape,

+ trt_calib_mode=trt_calib_mode,

+ cpu_threads=cpu_threads,

+ enable_mkldnn=enable_mkldnn,

+ output_dir=output_dir,

+ threshold=threshold,

+ )

+ self.use_dark = use_dark

+

+ def set_config(self, model_dir):

+ return PredictConfig_KeyPoint(model_dir)

+

+ def get_person_from_rect(self, image, results):

+ # crop the person result from image

+ self.det_times.preprocess_time_s.start()

+ valid_rects = results['boxes']

+ rect_images = []

+ new_rects = []

+ org_rects = []

+ for rect in valid_rects:

+ rect_image, new_rect, org_rect = expand_crop(image, rect)

+ if rect_image is None or rect_image.size == 0:

+ continue

+ rect_images.append(rect_image)

+ new_rects.append(new_rect)

+ org_rects.append(org_rect)

+ self.det_times.preprocess_time_s.end()

+ return rect_images, new_rects, org_rects

+

+ def postprocess(self, inputs, result):

+ np_heatmap = result['heatmap']

+ np_masks = result['masks']

+ # postprocess output of predictor

+ if KEYPOINT_SUPPORT_MODELS[self.pred_config.arch] == 'keypoint_bottomup':

+ results = {}

+ h, w = inputs['im_shape'][0]

+ preds = [np_heatmap]

+ if np_masks is not None:

+ preds += np_masks

+ preds += [h, w]

+ keypoint_postprocess = HRNetPostProcess()

+ kpts, scores = keypoint_postprocess(*preds)

+ results['keypoint'] = kpts

+ results['score'] = scores

+ return results

+ elif KEYPOINT_SUPPORT_MODELS[self.pred_config.arch] == 'keypoint_topdown':

+ results = {}

+ imshape = inputs['im_shape'][:, ::-1]

+ center = np.round(imshape / 2.)

+ scale = imshape / 200.

+ keypoint_postprocess = HRNetPostProcess(use_dark=self.use_dark)

+ kpts, scores = keypoint_postprocess(np_heatmap, center, scale)

+ results['keypoint'] = kpts

+ results['score'] = scores

+ return results

+ else:

+ raise ValueError("Unsupported arch: {}, expect {}".format(self.pred_config.arch, KEYPOINT_SUPPORT_MODELS))

+

+ def predict(self, repeats=1):

+ '''

+ Args:

+ repeats (int): repeat number for prediction

+ Returns:

+ results (dict): include 'boxes': np.ndarray: shape:[N,6], N: number of box,

+ matix element:[class, score, x_min, y_min, x_max, y_max]

+ MaskRCNN's results include 'masks': np.ndarray:

+ shape: [N, im_h, im_w]

+ '''

+ # model prediction

+ np_heatmap, np_masks = None, None

+ for i in range(repeats):

+ self.predictor.run()

+ output_names = self.predictor.get_output_names()

+ heatmap_tensor = self.predictor.get_output_handle(output_names[0])

+ np_heatmap = heatmap_tensor.copy_to_cpu()

+ if self.pred_config.tagmap:

+ masks_tensor = self.predictor.get_output_handle(output_names[1])

+ heat_k = self.predictor.get_output_handle(output_names[2])

+ inds_k = self.predictor.get_output_handle(output_names[3])

+ np_masks = [masks_tensor.copy_to_cpu(), heat_k.copy_to_cpu(), inds_k.copy_to_cpu()]

+ result = dict(heatmap=np_heatmap, masks=np_masks)

+ return result

+

+ def predict_image(self, image_list, run_benchmark=False, repeats=1, visual=True):

+ results = []

+ batch_loop_cnt = math.ceil(float(len(image_list)) / self.batch_size)

+ for i in range(batch_loop_cnt):

+ start_index = i * self.batch_size

+ end_index = min((i + 1) * self.batch_size, len(image_list))

+ batch_image_list = image_list[start_index:end_index]

+ if run_benchmark:

+ # preprocess

+ inputs = self.preprocess(batch_image_list) # warmup

+ self.det_times.preprocess_time_s.start()

+ inputs = self.preprocess(batch_image_list)

+ self.det_times.preprocess_time_s.end()

+

+ # model prediction

+ result_warmup = self.predict(repeats=repeats) # warmup

+ self.det_times.inference_time_s.start()

+ result = self.predict(repeats=repeats)

+ self.det_times.inference_time_s.end(repeats=repeats)

+

+ # postprocess

+ result_warmup = self.postprocess(inputs, result) # warmup

+ self.det_times.postprocess_time_s.start()

+ result = self.postprocess(inputs, result)

+ self.det_times.postprocess_time_s.end()

+ self.det_times.img_num += len(batch_image_list)

+

+ cm, gm, gu = get_current_memory_mb()

+ self.cpu_mem += cm

+ self.gpu_mem += gm

+ self.gpu_util += gu

+

+ else:

+ # preprocess

+ self.det_times.preprocess_time_s.start()

+ inputs = self.preprocess(batch_image_list)

+ self.det_times.preprocess_time_s.end()

+

+ # model prediction

+ self.det_times.inference_time_s.start()

+ result = self.predict()

+ self.det_times.inference_time_s.end()

+

+ # postprocess

+ self.det_times.postprocess_time_s.start()

+ result = self.postprocess(inputs, result)

+ self.det_times.postprocess_time_s.end()

+ self.det_times.img_num += len(batch_image_list)

+

+ if visual:

+ if not os.path.exists(self.output_dir):

+ os.makedirs(self.output_dir)

+ visualize(batch_image_list, result, visual_thresh=self.threshold, save_dir=self.output_dir)

+

+ results.append(result)

+ if visual:

+ print('Test iter {}'.format(i))

+ results = self.merge_batch_result(results)

+ return results

+

+ def predict_video(self, video_file, camera_id):

+ video_name = 'output.mp4'

+ if camera_id != -1:

+ capture = cv2.VideoCapture(camera_id)

+ else:

+ capture = cv2.VideoCapture(video_file)

+ video_name = os.path.split(video_file)[-1]

+ # Get Video info : resolution, fps, frame count

+ width = int(capture.get(cv2.CAP_PROP_FRAME_WIDTH))

+ height = int(capture.get(cv2.CAP_PROP_FRAME_HEIGHT))

+ fps = int(capture.get(cv2.CAP_PROP_FPS))

+ frame_count = int(capture.get(cv2.CAP_PROP_FRAME_COUNT))

+ print("fps: %d, frame_count: %d" % (fps, frame_count))

+

+ if not os.path.exists(self.output_dir):

+ os.makedirs(self.output_dir)

+ out_path = os.path.join(self.output_dir, video_name)

+ fourcc = cv2.VideoWriter_fourcc(*'mp4v')

+ writer = cv2.VideoWriter(out_path, fourcc, fps, (width, height))

+ index = 1

+ while (1):

+ ret, frame = capture.read()

+ if not ret:

+ break

+ print('detect frame: %d' % (index))

+ index += 1

+ results = self.predict_image([frame[:, :, ::-1]], visual=False)

+ im_results = {}

+ im_results['keypoint'] = [results['keypoint'], results['score']]

+ im = visualize_pose(frame, im_results, visual_thresh=self.threshold, returnimg=True)

+ writer.write(im)

+ if camera_id != -1:

+ cv2.imshow('Mask Detection', im)

+ if cv2.waitKey(1) & 0xFF == ord('q'):

+ break

+ writer.release()

+

+

+def create_inputs(imgs, im_info):

+ """generate input for different model type

+ Args:

+ imgs (list(numpy)): list of image (np.ndarray)

+ im_info (list(dict)): list of image info

+ Returns:

+ inputs (dict): input of model

+ """

+ inputs = {}

+ inputs['image'] = np.stack(imgs, axis=0).astype('float32')

+ im_shape = []

+ for e in im_info:

+ im_shape.append(np.array((e['im_shape'])).astype('float32'))

+ inputs['im_shape'] = np.stack(im_shape, axis=0)

+ return inputs

+

+

+class PredictConfig_KeyPoint():

+ """set config of preprocess, postprocess and visualize

+ Args:

+ model_dir (str): root path of model.yml

+ """

+

+ def __init__(self, model_dir):

+ # parsing Yaml config for Preprocess

+ deploy_file = os.path.join(model_dir, 'infer_cfg.yml')

+ with open(deploy_file) as f:

+ yml_conf = yaml.safe_load(f)

+ self.check_model(yml_conf)

+ self.arch = yml_conf['arch']

+ self.archcls = KEYPOINT_SUPPORT_MODELS[yml_conf['arch']]

+ self.preprocess_infos = yml_conf['Preprocess']

+ self.min_subgraph_size = yml_conf['min_subgraph_size']

+ self.labels = yml_conf['label_list']

+ self.tagmap = False

+ self.use_dynamic_shape = yml_conf['use_dynamic_shape']

+ if 'keypoint_bottomup' == self.archcls:

+ self.tagmap = True

+ self.print_config()

+

+ def check_model(self, yml_conf):

+ """

+ Raises:

+ ValueError: loaded model not in supported model type

+ """

+ for support_model in KEYPOINT_SUPPORT_MODELS:

+ if support_model in yml_conf['arch']:

+ return True

+ raise ValueError("Unsupported arch: {}, expect {}".format(yml_conf['arch'], KEYPOINT_SUPPORT_MODELS))

+

+ def print_config(self):

+ print('----------- Model Configuration -----------')

+ print('%s: %s' % ('Model Arch', self.arch))

+ print('%s: ' % ('Transform Order'))

+ for op_info in self.preprocess_infos:

+ print('--%s: %s' % ('transform op', op_info['type']))

+ print('--------------------------------------------')

+

+

+def visualize(image_list, results, visual_thresh=0.6, save_dir='output'):

+ im_results = {}

+ for i, image_file in enumerate(image_list):

+ skeletons = results['keypoint']

+ scores = results['score']

+ skeleton = skeletons[i:i + 1]

+ score = scores[i:i + 1]

+ im_results['keypoint'] = [skeleton, score]

+ visualize_pose(image_file, im_results, visual_thresh=visual_thresh, save_dir=save_dir)

+

+

+def main():

+ detector = KeyPointDetector(FLAGS.model_dir,

+ device=FLAGS.device,

+ run_mode=FLAGS.run_mode,

+ batch_size=FLAGS.batch_size,

+ trt_min_shape=FLAGS.trt_min_shape,

+ trt_max_shape=FLAGS.trt_max_shape,

+ trt_opt_shape=FLAGS.trt_opt_shape,

+ trt_calib_mode=FLAGS.trt_calib_mode,

+ cpu_threads=FLAGS.cpu_threads,

+ enable_mkldnn=FLAGS.enable_mkldnn,

+ threshold=FLAGS.threshold,

+ output_dir=FLAGS.output_dir,