From ec707eff422ae7263bcf22ab72d03c13960523a8 Mon Sep 17 00:00:00 2001

From: Jack Huang <719668276@qq.com>

Date: Tue, 21 Sep 2021 23:59:32 +0800

Subject: [PATCH] updateto support Tensorflow V2; and modifiy 2_airl.py

---

2_airl.py | 148 ++++++++++++++++++++----------------------

3_load_airl.py | 19 +++---

README.md | 66 +++++++++----------

algo/discriminator.py | 8 +--

algo/generator.py | 17 ++---

5 files changed, 125 insertions(+), 133 deletions(-)

diff --git a/2_airl.py b/2_airl.py

index f3aace9..15d1a61 100644

--- a/2_airl.py

+++ b/2_airl.py

@@ -4,10 +4,11 @@

@Github: https://github.com/HuangJiaLian

@Date: 2019-10-10 19:27:08

@LastEditors: Jack Huang

-@LastEditTime: 2019-11-18 19:24:52

+@LastEditTime: 2021-08-24 19:24:52

'''

-import tensorflow as tf

+import tensorflow.compat.v1 as tf

+tf.disable_v2_behavior()

import numpy as np

import gym, os

import algo.generator as gen

@@ -42,9 +43,11 @@ def drawRewards(D, episode, path):

plt.clf()

def main():

- # Env

+ # Mountain care env setting

env = gym.make('MountainCar-v0')

ob_space = env.observation_space

+ action_space = env.action_space

+ print(ob_space, action_space)

# For Reinforcement Learning

Policy = gen.Policy_net('policy', env)

@@ -54,74 +57,70 @@ def main():

# For Inverse Reinforcement Learning

D = dis.Discriminator(env)

- # Load Experts Demonstration

+ # Load expert trajectories

expert_observations = np.genfromtxt('exp_traj/observations.csv')

next_expert_observations = np.genfromtxt('exp_traj/next_observations.csv')

expert_actions = np.genfromtxt('exp_traj/actions.csv', dtype=np.int32)

-

+ # Expert returns is just used for showing the mean scrore, not for training

expert_returns = np.genfromtxt('exp_traj/returns.csv')

mean_expert_return = np.mean(expert_returns)

- max_episode = 24000

+ max_episode = 10000

+ # The maximum step limit in one episode to make sure the mountain car

+ # task is finite Markov decision processes (MDP).

max_steps = 200

saveReturnEvery = 100

num_expert_tra = 20

- # Logger 用来记录训练过程

+ # Just use to record the training process

train_logger = log.logger(logger_name='AIRL_MCarV0_Training_Log',

- logger_path='./trainingLog/', col_names=['Episode', 'Actor(D)', 'Expert Mean(D)','Actor','Expert Mean'])

+ logger_path='./trainingLog/', col_names=['Episode', 'Actor(D)',

+ 'Expert Mean(D)','Actor','Expert Mean'])

- # Saver to save all the variables

+ # Model saver

model_save_path = './model/'

model_name = 'airl'

saver = tf.train.Saver(max_to_keep=int(max_episode/100))

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

- obs = env.reset()

- # do NOT use original rewards to update policy

for episode in range(max_episode):

if episode % 100 == 0:

print('Episode ', episode)

-

observations = []

actions = []

rewards = []

v_preds = []

- # 遍历这次游戏中的每一步

obs = env.reset()

+ # Interact with the environment until reach

+ # the terminal state or the maximum step.

for step in range(max_steps):

# if episode % 100 == 0:

# env.render()

+

obs = np.stack([obs]).astype(dtype=np.float32)

- act, v_pred = Policy.get_action(obs=obs, stochastic=True)

- act = np.asscalar(act)

- v_pred = np.asscalar(v_pred)

+ # act, v_pred = Policy.get_action(obs=obs, stochastic=True)

+ act, v_pred = Old_Policy.get_action(obs=obs, stochastic=True)

+

- # 和环境交互

- next_obs, reward, done, info = env.step(act)

+ next_obs, reward, done, _ = env.step(act)

observations.append(obs)

actions.append(act)

- # 这里的reward并不是用来更新网络的,而是用来记录真实的

- # 表现的。

+ # DO NOT use original rewards to update policy

rewards.append(reward)

v_preds.append(v_pred)

if done:

- v_preds_next = v_preds[1:] + [0] # next state of terminate state has 0 state value

+ # next state of terminate state has 0 state value

+ v_preds_next = v_preds[1:] + [0]

break

else:

obs = next_obs

- # 完了就可以用数据来训练网络了

-

- # 准备数据

- # Expert的数据已经准备好了

- # Generator的数据

- # convert list to numpy array for feeding tf.placeholder

-

+ # Data preparation

+ # Data for generator: convert list to numpy array for feeding tf.placeholder

next_observations = observations[1:]

observations = observations[:-1]

actions = actions[:-1]

@@ -129,15 +128,18 @@ def main():

next_observations = np.reshape(next_observations, newshape=[-1] + list(ob_space.shape))

observations = np.reshape(observations, newshape=[-1] + list(ob_space.shape))

actions = np.array(actions).astype(dtype=np.int32)

- # Get the G's probabilities

- probabilities = get_probabilities(policy=Policy, observations=observations, actions=actions)

- # Get the experts' probabilities

- expert_probabilities = get_probabilities(policy=Policy, observations=expert_observations, actions=expert_actions)

+

+ # G's probabilities

+ probabilities = get_probabilities(policy=Policy, \

+ observations=observations, actions=actions)

+ # Experts' probabilities

+ expert_probabilities = get_probabilities(policy=Policy, \

+ observations=expert_observations, actions=expert_actions)

- # numpy 里面log的底数是e

log_probabilities = np.log(probabilities)

log_expert_probabilities = np.log(expert_probabilities)

+ # Prepare data for disriminator

if D.only_position:

observations_for_d = (observations[:,0]).reshape(-1,1)

next_observations_for_d = (next_observations[:,0]).reshape(-1,1)

@@ -146,103 +148,93 @@ def main():

log_probabilities_for_d = log_probabilities.reshape(-1,1)

log_expert_probabilities_for_d = log_expert_probabilities.reshape(-1,1)

- # 数据排整齐

obs, obs_next, acts, path_probs = \

- observations_for_d, next_observations_for_d, actions, log_probabilities

+ observations_for_d, next_observations_for_d, \

+ actions.reshape(-1,1), log_probabilities.reshape(-1,1)

expert_obs, expert_obs_next, expert_acts, expert_probs = \

- expert_observations_for_d, next_expert_observations_for_d, expert_actions, log_expert_probabilities

+ expert_observations_for_d, next_expert_observations_for_d, \

+ expert_actions.reshape(-1,1), log_expert_probabilities.reshape(-1,1)

+

- acts = acts.reshape(-1,1)

- expert_acts = expert_acts.reshape(-1,1)

-

- path_probs = path_probs.reshape(-1,1)

- expert_probs = expert_probs.reshape(-1,1)

- # train discriminator 得到Reward函数

- # print('Train D')

- # 这里两类数据量的大小不对等啊

- # 应该可以优化的

+ # 这里两类数据量的大小不对等啊, 应该可以优化的??

+ # Train discriminator

batch_size = 32

for i in range(1):

- # 抽一个G的batch

+ # Sample generator

nobs_batch, obs_batch, act_batch, lprobs_batch = \

sample_batch(obs_next, obs, acts, path_probs, batch_size=batch_size)

-

- # 抽一个Expert的batch

+ # Sample expert

nexpert_obs_batch, expert_obs_batch, expert_act_batch, expert_lprobs_batch = \

- sample_batch(expert_obs_next, expert_obs, expert_acts, expert_probs, batch_size=batch_size)

+ sample_batch(expert_obs_next, expert_obs, expert_acts, \

+ expert_probs, batch_size=batch_size)

- # 前半部分负样本0; 后半部分是正样本1

+ # Label generator samples as 0, indicating that discriminator

+ # always consider generator's behavior is not good;

+ # Label expert samples as 1, indicating that discriminator

+ # always consider expert's behavior is excellent.

labels = np.zeros((batch_size*2, 1))

labels[batch_size:] = 1.0

- # 拼在一起喂到神经网络里面去训练

obs_batch = np.concatenate([obs_batch, expert_obs_batch], axis=0)

nobs_batch = np.concatenate([nobs_batch, nexpert_obs_batch], axis=0)

- # 若是只和状态相关,下面这个这个没有用

- act_batch = np.concatenate([act_batch, expert_act_batch], axis=0)

lprobs_batch = np.concatenate([lprobs_batch, expert_lprobs_batch], axis=0)

D.train(obs_t = obs_batch,

nobs_t = nobs_batch,

lprobs = lprobs_batch,

labels = labels)

+

if episode % 50 == 0:

drawRewards(D=D, episode=episode, path='./trainingLog/')

- # output of this discriminator is reward

+ # The output of this discriminator is reward

if D.score_discrim == False:

d_rewards = D.get_scores(obs_t=observations_for_d)

else:

- d_rewards = D.get_l_scores(obs_t=observations_for_d, nobs_t=next_observations_for_d, lprobs=log_probabilities_for_d)

+ d_rewards = D.get_l_scores(obs_t=observations_for_d, \

+ nobs_t=next_observations_for_d, lprobs=log_probabilities_for_d)

+

d_rewards = np.reshape(d_rewards, newshape=[-1]).astype(dtype=np.float32)

- d_actor_return = np.sum(d_rewards)

- # print(d_actor_return)

+ # Sum rewards to get return: Just for tracking the record of returns overtime.

+ d_actor_return = np.sum(d_rewards)

- # d_expert_return: Just For Tracking

if D.score_discrim == False:

expert_d_rewards = D.get_scores(obs_t=expert_observations_for_d)

else:

- expert_d_rewards = D.get_l_scores(obs_t=expert_observations_for_d, nobs_t= next_expert_observations_for_d,lprobs= log_expert_probabilities_for_d )

+ expert_d_rewards = D.get_l_scores(obs_t=expert_observations_for_d, \

+ nobs_t= next_expert_observations_for_d,lprobs= log_expert_probabilities_for_d )

expert_d_rewards = np.reshape(expert_d_rewards, newshape=[-1]).astype(dtype=np.float32)

d_expert_return = np.sum(expert_d_rewards)/num_expert_tra

- # print(d_expert_return)

-

- ######################

- # Start Logging #

- ######################

+

+ #** Start Logging **#: Just use to track information

train_logger.add_row_data([episode, d_actor_return, d_expert_return,

sum(rewards), mean_expert_return], saveFlag=True)

if episode % saveReturnEvery == 0:

train_logger.plotToFile(title='Return')

- ###################

- # End logging #

- ###################

+ #** End logging **#

gaes = PPO.get_gaes(rewards=d_rewards, v_preds=v_preds, v_preds_next=v_preds_next)

gaes = np.array(gaes).astype(dtype=np.float32)

# gaes = (gaes - gaes.mean()) / gaes.std()

v_preds_next = np.array(v_preds_next).astype(dtype=np.float32)

-

- # train policy 得到更好的Policy

inp = [observations, actions, gaes, d_rewards, v_preds_next]

- # if episode % 4 == 0:

- # PPO.assign_policy_parameters()

-

- PPO.assign_policy_parameters()

-

+ if episode % 4 == 0:

+ PPO.assign_policy_parameters()

+ # PPO.assign_policy_parameters()

for epoch in range(10):

- sample_indices = np.random.randint(low=0, high=observations.shape[0],

- size=32) # indices are in [low, high)

- sampled_inp = [np.take(a=a, indices=sample_indices, axis=0) for a in inp] # sample training data

+ sample_indices = np.random.randint(low=0, high=observations.shape[0],size=32)

+ # sample training data

+ sampled_inp = [np.take(a=a, indices=sample_indices, axis=0) for a in inp]

PPO.train(obs=sampled_inp[0],

actions=sampled_inp[1],

gaes=sampled_inp[2],

rewards=sampled_inp[3],

v_preds_next=sampled_inp[4])

- # 保存整个模型

+

+ # Save model

if episode > 0 and episode % 100 == 0:

saver.save(sess, os.path.join(model_save_path, model_name), global_step=episode)

diff --git a/3_load_airl.py b/3_load_airl.py

index bdffd97..5d3c850 100644

--- a/3_load_airl.py

+++ b/3_load_airl.py

@@ -4,10 +4,11 @@

@Github: https://github.com/HuangJiaLian

@Date: 2019-10-10 19:27:08

@LastEditors: Jack Huang

-@LastEditTime: 2019-11-21 22:10:00

+@LastEditTime: 2021-08-24 22:10:00

'''

-import tensorflow as tf

+import tensorflow.compat.v1 as tf

+tf.disable_v2_behavior()

import numpy as np

import gym, os

import argparse

@@ -51,7 +52,7 @@ def main():

num_expert_tra = 20

# Saver to save all the variables

- model_save_path = './modelGAN/'

+ model_save_path = './model/'

model_name = 'airl'

saver = tf.train.Saver()

ckpt = tf.train.get_checkpoint_state(model_save_path)

@@ -95,15 +96,15 @@ def main():

# 遍历这次游戏中的每一步

obs = env.reset()

for step in range(max_steps):

- # if episode % 100 == 0:

- # env.render()

+ if episode % 100 == 0:

+ env.render()

obs = np.stack([obs]).astype(dtype=np.float32)

# When testing set stochastic False will get better performance

- act, v_pred = Policy.get_action(obs=obs, stochastic=True)

- # act, v_pred = Policy.get_action(obs=obs, stochastic=False)

- act = np.asscalar(act)

- v_pred = np.asscalar(v_pred)

+ # act, v_pred = Policy.get_action(obs=obs, stochastic=True)

+ act, v_pred = Policy.get_action(obs=obs, stochastic=False)

+ # act = act.item()

+ # v_pred = v_pred.item()

# 和环境交互

next_obs, reward, done, info = env.step(act)

diff --git a/README.md b/README.md

index 980fd1d..dc1db45 100644

--- a/README.md

+++ b/README.md

@@ -1,7 +1,7 @@

# Adversarial Inverse Reinforcement Learning implementation for Mountain Car

## Abstract

-This project use [Adversarial Inverse Reinforcement Learning](https://arxiv.org/abs/1710.11248) (AIRL) to learn a optimal policy and a optimal reward function for a basic control problem--[Mountain-Car](https://github.com/openai/gym/wiki/MountainCar-v0). It's important to note that **the reward function that AIRL learned can be transferred to learn a optimal policy from zero, while [Generative Adversarial Imitation Learning](https://arxiv.org/abs/1606.03476) (GAIL) can't.** The [original implementation](https://github.com/justinjfu/inverse_rl) of AIRL was use **rllab**, which is not maintained anymore. In this work, OpenAI gym environment was used for simplicity.

+This project use [Adversarial Inverse Reinforcement Learning](https://arxiv.org/abs/1710.11248) (AIRL) to learn a optimal policy and a reward function for a basic control problem--[Mountain-Car](https://github.com/openai/gym/wiki/MountainCar-v0). It's important to note that **the reward function that AIRL learned can be transferred to learn a optimal policy from zero, while [Generative Adversarial Imitation Learning](https://arxiv.org/abs/1606.03476) (GAIL) can't.** The [original implementation](https://github.com/justinjfu/inverse_rl) of AIRL was use **rllab**, which is not maintained anymore. In this work, OpenAI gym environment was used for simplicity.

## Introduction

@@ -13,12 +13,12 @@ AIRL putted two forms of reward function:

It has been showed in the paper of AIRL that the State-Only reward function is **more easily transferred** to a different dynamics of environment. For example, the State-Only reward function learned in the four legs ant can be used to train a disabled two legs ant while State-Action reward function can't.

Although the dynamics of environment doesn't change in this work, I still used State-Only reward function.

-In mountain car problem, the state(observation) of agent has the car's position and the car's velocity alone the track.

+In mountain car problem, the state(observation) of agent are the car position and velocity alone the track.

-For more simplicity, and to make the learned reward function can be easily explained, I **just use the car's position as the input of reward function**, and ignore the velocity. This **Partial-State** reward function even simpler than the State-Only reward function.

+To make the reward function as simple as possible, I **just use the car's position as the input of reward function**, and ignore the velocity. This **Partial-State** reward function even simpler than the State-Only reward function.

-When it comes to the training process, AIRL provide two ways to get score(the reward feed back to agent) from discriminator:

+When it comes to the training process, AIRL provide two ways to get score (the reward feed back to agent) from discriminator:

1. Score is the learned reward function $g_{\theta}(s)$

2. Score is the result of $\log D - log(1-D)$

@@ -27,9 +27,9 @@ Both ways worked. From experiments, I found:

- The reward function will become meaningless at the very end if training using $g_{\theta}(s)$ as score.

- The reward function $g_{\theta}(s)$ is much much more stable if training using $\log D - log(1-D)$ as score.

-**The purpose of inverse reinforcement learning (IRL) should be focused more on getting a robust and stable reward function, rather than just getting a optimal policy, which imitation learning (IL) does.**

+For my personal perspective, **the purpose of inverse reinforcement learning (IRL) should be focused more on getting a robust and stable reward function, rather than just getting a optimal policy, which imitation learning (IL) does.**

-From this point, I chose the $\log D - log(1-D)$ as the score of reinforcement learning (RL) agent. In this case, it should be noted that the learned reward function $g_{\theta}(s)$ is not used in the policy's training process. But using the learned reward function $g_{\theta}(s)$, we can train the optimal policy from zero.

+From this point, I chose the $\log D - log(1-D)$ as the score of reinforcement learning (RL) agent. In this case, I want to mention that the learned reward function $g_{\theta}(s)$ is not used in the policy's training process. Besides, as an example, by using the learned reward function $g_{\theta}(s)$, we can train the optimal policy from zero.

## Experiments

@@ -57,18 +57,19 @@ I used 20, 50, 200 expert demonstrations to get different experimental results,

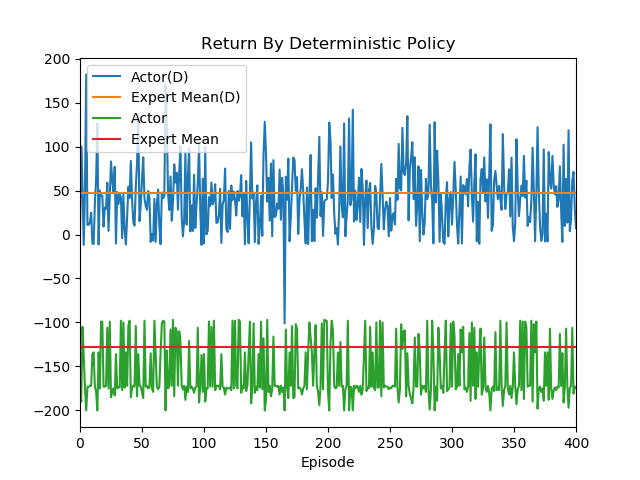

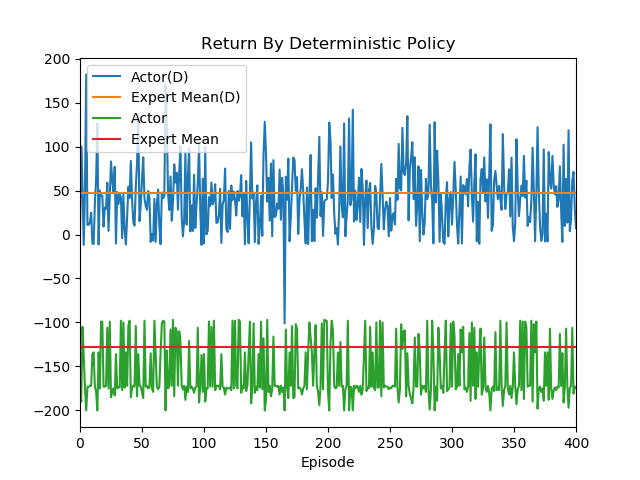

### AIRL train

- +

+

+

Score from discriminator:

- Yellow: The mean return of 20 expert demonstrations;

- Blue: The return of train policy demonstration;

-The discriminator is trying to classify samples from experts and from generator, and the generator is trying it's best to generate samples similar to the experts, so that it can get high score from discriminator.

+The discriminator is trying to classify samples from experts and from generator (policy), and the generator is trying it's best to generate samples similar to the experts, so that it can get high score from discriminator.

-It's clear that in the first 2500 episodes, the discriminator is learning very fast, it can tell expert samples and generator samples very easily.

+It's clear that in the first 2500 episodes, the discriminator is learning very fast, it can tell expert samples and generator samples very easily as it's easy to distinguish between the Expert Mean(D) and Actior(D).

-With the learning process of generator keep going, about 2000 episodes later, the difference between expert return and generator return is getting smaller.

+With the learning process of generator keep going, about 4000 episodes later, the difference between expert and generator return is getting smaller.

Score from openAI:

- Red: The mean return of 20 expert demonstrations;

@@ -76,16 +77,16 @@ Score from openAI:

Note: OpenAI give each step a `-1` as score, if `200` steps pass the car can't reach to the destination, this episode end, so that it will get `-200` total scores (return). Only if the car reach the destination within 200 steps, the return will greater than `-200`.

-Before 4000 episode, although the generator have learned some, but that was not enough to let the car reach to the destination, so that the green line was keeping at `-200`.

+Before episode 4000, although the generator have learned some, but that was not enough to let the car reach to the destination, so that the green line was keeping at `-200`.

-About 5000 episodes latter, the generator was good enough to let the car reach to the destination occasionally. At the same time, some parts of the blue line and the yellow line are overlapped, which indicating that sometimes the generator can perform as better as expert.

+About episode 7000, the generator was good enough to let the car reach to the destination occasionally. At the same time, some parts of the blue line and the yellow line are overlapped, which indicating that sometimes the generator can perform as better as expert.

-Although the mean return of generator can't reach to the expert's. But in our case, we think it was good enough. Because our main purpose was a robust reward function.

+Although the mean return of generator can't reach to the expert's standard. One reason for this is we use stochastic policy, which enable some random action. But in our case, we think it was good enough. Because our main purpose was a robust reward function.

### The learned reward function

The learned reward function $g_{\theta}(s)$ at the very end:

- +

+ For better explaining the reward, the track of

mountain car problem is as follow:

@@ -100,7 +101,7 @@ Besides, the shape of learned reward function doesn't change much as the trainin

### Use the learned reward function to train a policy

To test the learned reward function was robust and transferable, I fixed the reward function at the very end to train a new policy from zero.

-

For better explaining the reward, the track of

mountain car problem is as follow:

@@ -100,7 +101,7 @@ Besides, the shape of learned reward function doesn't change much as the trainin

### Use the learned reward function to train a policy

To test the learned reward function was robust and transferable, I fixed the reward function at the very end to train a new policy from zero.

- +

+ After 7000 episode, the generator's behavior was very close to the expert.

@@ -108,45 +109,43 @@ After 7000 episode, the generator's behavior was very close to the expert.

### Conclusion

This work used a simplified version AIRL to solve OpenAI Mountain Car problem, and got a robust and transferable reward function.

-### Q&A

-- **Why reward function only depend on position make sense?**

-- **Reward function not depend on action is non-sense.**

-No, it make sense. For example, how to define a good golf track. Although the direction is really important, but I can design a reward function that only depend on the golf's position, if the golf in the position of good track, the reward will be higher than other position. That means that if you can pass that position, you should and must took the right action. The reward function is indirectly guiding reinforcement learning (RL) algorithm to get good policy. How to take action at a state is the work of policy, not reward function. So that reward function can only depend on state.

-

-- **The reward is not good, I can just draw a slop line (k>0, eg k=2) as reward function.**

+### Questions & Answers

+- *Why reward function only depend on position make sense? Reward function not depend on action seems non-sense.*

+ No, it does make sense. For example, how to define a good golf track. Although the direction is really important, but I can design a reward function that only depend on the golf's position, if the golf in the position of good track, the reward will be higher than other position. That means that if you can pass that position, you should and must took the right action. The reward function is indirectly guiding reinforcement learning (RL) algorithm to get good policy. How to take action at a state is the work of policy, not reward function.

+- *The reward is not good, I can just draw a slop line (k>0, eg k=2) as reward function.*

I can not bet the learned reward function is the optimal reward function, but it really make sense, and worked. Because using this learned reward function, I can train a policy from zero, which indicating this a worked reward function. And I don't think just a slop line can be better than the learned reward function, cause the agent has to up to the left hill to get enough energy to climb up to the right hill. So than the reward function should tell the agent that the state of left hill is no bad, which a slop (k>0) can't do this.

-- **Although it is important that the car can climb the right side of the hill, but besides that we also want to know why this car can climb up to the right side. We want to know why. How can you explain the behind story if you just make reward function only depend on the car's position?**

+- *Although it is important that the car can climb the right side of the hill, but besides that we also want to know why this car can climb up to the right side. How can you explain the behind story if you just make reward function only depend on the car's position?*

I can't answer at this moment.

-- **If your reward has nothing to do with action, the agent don't know what is right action to take.**

-No, this is not right. Use the state-only reward function, the agent can learn a good policy, which will tell the agent what should be the best action. You should realize that the reward function is **indirectly** guide RL algorithm to learn a good policy.

+- *If the reward has nothing to do with action, the agent don't know what is right action to take.*

+No, it's not the case. Use the state-only reward function, the agent can learn a good policy, which will tell the agent what should be the best action. You should realize that the reward function is **indirectly** guide for RL algorithm to learn.

-- **What's RL algorithm learned?**

+- *What's RL algorithm learned?*

RL algorithm learned a good policy, it can tell the agent what is the best action $a$, when it in one state (observation) $s$. Specifically, the learned policy is a lot of distribution of action. There are different distribution at different state(observation).

-- **You said you learned the expert's policy, but the agent's returns are bellow the expert's mean return. How could you said the agent learned the expert's policy?**

+- *You said you learned the expert's policy, but the agent's returns are bellow the expert's mean return. How could you said the agent learned the expert's policy?*

Although the agent's return can't reach to the expert's, but it's above `-200`, which means the car reach to the right hill within 200 steps. Beside, there are another reason why agent's return can't reach to the mean return of expert's. **The agent took stochastic policy**, which means that the action to take was sample from action distribution, rather than directly chose the most probable one. See Append.B for detail.

-- **Why the agent goes down, if goes down will make the reward less?**

-You don't understand the basics of RL. The main purpose of RL algorithms is to maximize the expected **return--the reward sum along all trajectory**. So that, although it seems that going down makes the total reward less, but the behavior will get more reward and more return in the end of the episode.

+- *Why the agent goes down, if goes down will make the reward less?*

+Well, this one basic idea of RL. The main purpose of RL algorithms is to maximize the expected **return--the reward sum along all trajectory**. So that, although it seems that going down makes the total reward less, but the behavior will get more reward and more return in the end of the episode.

-- **The agent don't know the right side has higher reward, so the agent will stuck at left.**

-It's a bad question. I reject to answer.

+- *The agent don't know the right side has higher reward, so the agent will stuck at left.*

+The agent would try and learn that the right side has higher reward.

### Appendix A: Some snapshots of learned reward function over training episode

Episode 500:

-

After 7000 episode, the generator's behavior was very close to the expert.

@@ -108,45 +109,43 @@ After 7000 episode, the generator's behavior was very close to the expert.

### Conclusion

This work used a simplified version AIRL to solve OpenAI Mountain Car problem, and got a robust and transferable reward function.

-### Q&A

-- **Why reward function only depend on position make sense?**

-- **Reward function not depend on action is non-sense.**

-No, it make sense. For example, how to define a good golf track. Although the direction is really important, but I can design a reward function that only depend on the golf's position, if the golf in the position of good track, the reward will be higher than other position. That means that if you can pass that position, you should and must took the right action. The reward function is indirectly guiding reinforcement learning (RL) algorithm to get good policy. How to take action at a state is the work of policy, not reward function. So that reward function can only depend on state.

-

-- **The reward is not good, I can just draw a slop line (k>0, eg k=2) as reward function.**

+### Questions & Answers

+- *Why reward function only depend on position make sense? Reward function not depend on action seems non-sense.*

+ No, it does make sense. For example, how to define a good golf track. Although the direction is really important, but I can design a reward function that only depend on the golf's position, if the golf in the position of good track, the reward will be higher than other position. That means that if you can pass that position, you should and must took the right action. The reward function is indirectly guiding reinforcement learning (RL) algorithm to get good policy. How to take action at a state is the work of policy, not reward function.

+- *The reward is not good, I can just draw a slop line (k>0, eg k=2) as reward function.*

I can not bet the learned reward function is the optimal reward function, but it really make sense, and worked. Because using this learned reward function, I can train a policy from zero, which indicating this a worked reward function. And I don't think just a slop line can be better than the learned reward function, cause the agent has to up to the left hill to get enough energy to climb up to the right hill. So than the reward function should tell the agent that the state of left hill is no bad, which a slop (k>0) can't do this.

-- **Although it is important that the car can climb the right side of the hill, but besides that we also want to know why this car can climb up to the right side. We want to know why. How can you explain the behind story if you just make reward function only depend on the car's position?**

+- *Although it is important that the car can climb the right side of the hill, but besides that we also want to know why this car can climb up to the right side. How can you explain the behind story if you just make reward function only depend on the car's position?*

I can't answer at this moment.

-- **If your reward has nothing to do with action, the agent don't know what is right action to take.**

-No, this is not right. Use the state-only reward function, the agent can learn a good policy, which will tell the agent what should be the best action. You should realize that the reward function is **indirectly** guide RL algorithm to learn a good policy.

+- *If the reward has nothing to do with action, the agent don't know what is right action to take.*

+No, it's not the case. Use the state-only reward function, the agent can learn a good policy, which will tell the agent what should be the best action. You should realize that the reward function is **indirectly** guide for RL algorithm to learn.

-- **What's RL algorithm learned?**

+- *What's RL algorithm learned?*

RL algorithm learned a good policy, it can tell the agent what is the best action $a$, when it in one state (observation) $s$. Specifically, the learned policy is a lot of distribution of action. There are different distribution at different state(observation).

-- **You said you learned the expert's policy, but the agent's returns are bellow the expert's mean return. How could you said the agent learned the expert's policy?**

+- *You said you learned the expert's policy, but the agent's returns are bellow the expert's mean return. How could you said the agent learned the expert's policy?*

Although the agent's return can't reach to the expert's, but it's above `-200`, which means the car reach to the right hill within 200 steps. Beside, there are another reason why agent's return can't reach to the mean return of expert's. **The agent took stochastic policy**, which means that the action to take was sample from action distribution, rather than directly chose the most probable one. See Append.B for detail.

-- **Why the agent goes down, if goes down will make the reward less?**

-You don't understand the basics of RL. The main purpose of RL algorithms is to maximize the expected **return--the reward sum along all trajectory**. So that, although it seems that going down makes the total reward less, but the behavior will get more reward and more return in the end of the episode.

+- *Why the agent goes down, if goes down will make the reward less?*

+Well, this one basic idea of RL. The main purpose of RL algorithms is to maximize the expected **return--the reward sum along all trajectory**. So that, although it seems that going down makes the total reward less, but the behavior will get more reward and more return in the end of the episode.

-- **The agent don't know the right side has higher reward, so the agent will stuck at left.**

-It's a bad question. I reject to answer.

+- *The agent don't know the right side has higher reward, so the agent will stuck at left.*

+The agent would try and learn that the right side has higher reward.

### Appendix A: Some snapshots of learned reward function over training episode

Episode 500:

- +

+ Episode 1000:

-

Episode 1000:

- +

+ Episode 2000:

-

Episode 2000:

- +

+ Episode 4000:

@@ -170,4 +169,3 @@ Episode 23000:

Episode 4000:

@@ -170,4 +169,3 @@ Episode 23000:

-

diff --git a/algo/discriminator.py b/algo/discriminator.py

index 3f2bd46..96ed54e 100644

--- a/algo/discriminator.py

+++ b/algo/discriminator.py

@@ -1,13 +1,14 @@

'''

-@Description: AIRL算法的Discriminator

+@Description: Discriminator of AIRL

@Author: Jack Huang

@Github: https://github.com/HuangJiaLian

@Date: 2019-10-11 19:18:07

@LastEditors: Jack Huang

-@LastEditTime: 2019-11-18 17:36:07

+@LastEditTime: 2021-08-24 17:36:07

'''

-import tensorflow as tf

+import tensorflow.compat.v1 as tf

+tf.disable_v2_behavior()

import numpy as np

class Discriminator:

@@ -99,6 +100,5 @@ def get_l_scores(self, obs_t, nobs_t, lprobs):

return scores

-

def get_trainable_variables(self):

return tf.get_collection(tf.GraphKeys.TRAINABLE_VARIABLES, self.scope)

\ No newline at end of file

diff --git a/algo/generator.py b/algo/generator.py

index 2c47f89..7f74194 100644

--- a/algo/generator.py

+++ b/algo/generator.py

@@ -4,10 +4,11 @@

@Github: https://github.com/HuangJiaLian

@Date: 2019-10-11 19:17:36

@LastEditors: Jack Huang

-@LastEditTime: 2019-11-15 19:04:03

+@LastEditTime: 2021-08-24 19:04:03

'''

-import tensorflow as tf

+import tensorflow.compat.v1 as tf

+tf.disable_v2_behavior()

import copy

class Policy_net:

@@ -21,13 +22,12 @@ def __init__(self, name: str, env):

with tf.variable_scope(name):

self.obs = tf.placeholder(dtype=tf.float32, shape=[None] + list(ob_space.shape), name='obs')

- # Actor

- # Input 20 20 act_space.n act_space.n

+ # Actor (Policy): Given a state (or observation)

+ # obtain the distribution of actions

with tf.variable_scope('policy_net'):

layer_1 = tf.layers.dense(inputs=self.obs, units=20, activation=tf.tanh)

layer_2 = tf.layers.dense(inputs=layer_1, units=20, activation=tf.tanh)

layer_3 = tf.layers.dense(inputs=layer_2, units=act_space.n, activation=tf.tanh)

- # 输出动作的概率

self.act_probs = tf.layers.dense(inputs=layer_3, units=act_space.n, activation=tf.nn.softmax)

# Critic

@@ -46,14 +46,15 @@ def __init__(self, name: str, env):

def get_action(self, obs, stochastic=True):

if stochastic:

- return tf.get_default_session().run([self.act_stochastic, self.v_preds], feed_dict={self.obs: obs})

+ act, v_pred = tf.get_default_session().run([self.act_stochastic, self.v_preds], feed_dict={self.obs: obs})

+ return act.item(), v_pred.item()

else:

- return tf.get_default_session().run([self.act_deterministic, self.v_preds], feed_dict={self.obs: obs})

+ act, v_pred = tf.get_default_session().run([self.act_deterministic, self.v_preds], feed_dict={self.obs: obs})

+ return act.item(), v_pred.item()

def get_trainable_variables(self):

return tf.get_collection(tf.GraphKeys.TRAINABLE_VARIABLES, self.scope)

- # Last Edit

def get_distribution(self, obs):

return tf.get_default_session().run(self.act_probs,feed_dict={self.obs: obs})

-

diff --git a/algo/discriminator.py b/algo/discriminator.py

index 3f2bd46..96ed54e 100644

--- a/algo/discriminator.py

+++ b/algo/discriminator.py

@@ -1,13 +1,14 @@

'''

-@Description: AIRL算法的Discriminator

+@Description: Discriminator of AIRL

@Author: Jack Huang

@Github: https://github.com/HuangJiaLian

@Date: 2019-10-11 19:18:07

@LastEditors: Jack Huang

-@LastEditTime: 2019-11-18 17:36:07

+@LastEditTime: 2021-08-24 17:36:07

'''

-import tensorflow as tf

+import tensorflow.compat.v1 as tf

+tf.disable_v2_behavior()

import numpy as np

class Discriminator:

@@ -99,6 +100,5 @@ def get_l_scores(self, obs_t, nobs_t, lprobs):

return scores

-

def get_trainable_variables(self):

return tf.get_collection(tf.GraphKeys.TRAINABLE_VARIABLES, self.scope)

\ No newline at end of file

diff --git a/algo/generator.py b/algo/generator.py

index 2c47f89..7f74194 100644

--- a/algo/generator.py

+++ b/algo/generator.py

@@ -4,10 +4,11 @@

@Github: https://github.com/HuangJiaLian

@Date: 2019-10-11 19:17:36

@LastEditors: Jack Huang

-@LastEditTime: 2019-11-15 19:04:03

+@LastEditTime: 2021-08-24 19:04:03

'''

-import tensorflow as tf

+import tensorflow.compat.v1 as tf

+tf.disable_v2_behavior()

import copy

class Policy_net:

@@ -21,13 +22,12 @@ def __init__(self, name: str, env):

with tf.variable_scope(name):

self.obs = tf.placeholder(dtype=tf.float32, shape=[None] + list(ob_space.shape), name='obs')

- # Actor

- # Input 20 20 act_space.n act_space.n

+ # Actor (Policy): Given a state (or observation)

+ # obtain the distribution of actions

with tf.variable_scope('policy_net'):

layer_1 = tf.layers.dense(inputs=self.obs, units=20, activation=tf.tanh)

layer_2 = tf.layers.dense(inputs=layer_1, units=20, activation=tf.tanh)

layer_3 = tf.layers.dense(inputs=layer_2, units=act_space.n, activation=tf.tanh)

- # 输出动作的概率

self.act_probs = tf.layers.dense(inputs=layer_3, units=act_space.n, activation=tf.nn.softmax)

# Critic

@@ -46,14 +46,15 @@ def __init__(self, name: str, env):

def get_action(self, obs, stochastic=True):

if stochastic:

- return tf.get_default_session().run([self.act_stochastic, self.v_preds], feed_dict={self.obs: obs})

+ act, v_pred = tf.get_default_session().run([self.act_stochastic, self.v_preds], feed_dict={self.obs: obs})

+ return act.item(), v_pred.item()

else:

- return tf.get_default_session().run([self.act_deterministic, self.v_preds], feed_dict={self.obs: obs})

+ act, v_pred = tf.get_default_session().run([self.act_deterministic, self.v_preds], feed_dict={self.obs: obs})

+ return act.item(), v_pred.item()

def get_trainable_variables(self):

return tf.get_collection(tf.GraphKeys.TRAINABLE_VARIABLES, self.scope)

- # Last Edit

def get_distribution(self, obs):

return tf.get_default_session().run(self.act_probs,feed_dict={self.obs: obs})

+

+

+

+

+

+ For better explaining the reward, the track of

mountain car problem is as follow:

@@ -100,7 +101,7 @@ Besides, the shape of learned reward function doesn't change much as the trainin

### Use the learned reward function to train a policy

To test the learned reward function was robust and transferable, I fixed the reward function at the very end to train a new policy from zero.

-

For better explaining the reward, the track of

mountain car problem is as follow:

@@ -100,7 +101,7 @@ Besides, the shape of learned reward function doesn't change much as the trainin

### Use the learned reward function to train a policy

To test the learned reward function was robust and transferable, I fixed the reward function at the very end to train a new policy from zero.

- +

+ After 7000 episode, the generator's behavior was very close to the expert.

@@ -108,45 +109,43 @@ After 7000 episode, the generator's behavior was very close to the expert.

### Conclusion

This work used a simplified version AIRL to solve OpenAI Mountain Car problem, and got a robust and transferable reward function.

-### Q&A

-- **Why reward function only depend on position make sense?**

-- **Reward function not depend on action is non-sense.**

-No, it make sense. For example, how to define a good golf track. Although the direction is really important, but I can design a reward function that only depend on the golf's position, if the golf in the position of good track, the reward will be higher than other position. That means that if you can pass that position, you should and must took the right action. The reward function is indirectly guiding reinforcement learning (RL) algorithm to get good policy. How to take action at a state is the work of policy, not reward function. So that reward function can only depend on state.

-

-- **The reward is not good, I can just draw a slop line (k>0, eg k=2) as reward function.**

+### Questions & Answers

+- *Why reward function only depend on position make sense? Reward function not depend on action seems non-sense.*

+ No, it does make sense. For example, how to define a good golf track. Although the direction is really important, but I can design a reward function that only depend on the golf's position, if the golf in the position of good track, the reward will be higher than other position. That means that if you can pass that position, you should and must took the right action. The reward function is indirectly guiding reinforcement learning (RL) algorithm to get good policy. How to take action at a state is the work of policy, not reward function.

+- *The reward is not good, I can just draw a slop line (k>0, eg k=2) as reward function.*

I can not bet the learned reward function is the optimal reward function, but it really make sense, and worked. Because using this learned reward function, I can train a policy from zero, which indicating this a worked reward function. And I don't think just a slop line can be better than the learned reward function, cause the agent has to up to the left hill to get enough energy to climb up to the right hill. So than the reward function should tell the agent that the state of left hill is no bad, which a slop (k>0) can't do this.

-- **Although it is important that the car can climb the right side of the hill, but besides that we also want to know why this car can climb up to the right side. We want to know why. How can you explain the behind story if you just make reward function only depend on the car's position?**

+- *Although it is important that the car can climb the right side of the hill, but besides that we also want to know why this car can climb up to the right side. How can you explain the behind story if you just make reward function only depend on the car's position?*

I can't answer at this moment.

-- **If your reward has nothing to do with action, the agent don't know what is right action to take.**

-No, this is not right. Use the state-only reward function, the agent can learn a good policy, which will tell the agent what should be the best action. You should realize that the reward function is **indirectly** guide RL algorithm to learn a good policy.

+- *If the reward has nothing to do with action, the agent don't know what is right action to take.*

+No, it's not the case. Use the state-only reward function, the agent can learn a good policy, which will tell the agent what should be the best action. You should realize that the reward function is **indirectly** guide for RL algorithm to learn.

-- **What's RL algorithm learned?**

+- *What's RL algorithm learned?*

RL algorithm learned a good policy, it can tell the agent what is the best action $a$, when it in one state (observation) $s$. Specifically, the learned policy is a lot of distribution of action. There are different distribution at different state(observation).

-- **You said you learned the expert's policy, but the agent's returns are bellow the expert's mean return. How could you said the agent learned the expert's policy?**

+- *You said you learned the expert's policy, but the agent's returns are bellow the expert's mean return. How could you said the agent learned the expert's policy?*

Although the agent's return can't reach to the expert's, but it's above `-200`, which means the car reach to the right hill within 200 steps. Beside, there are another reason why agent's return can't reach to the mean return of expert's. **The agent took stochastic policy**, which means that the action to take was sample from action distribution, rather than directly chose the most probable one. See Append.B for detail.

-- **Why the agent goes down, if goes down will make the reward less?**

-You don't understand the basics of RL. The main purpose of RL algorithms is to maximize the expected **return--the reward sum along all trajectory**. So that, although it seems that going down makes the total reward less, but the behavior will get more reward and more return in the end of the episode.

+- *Why the agent goes down, if goes down will make the reward less?*

+Well, this one basic idea of RL. The main purpose of RL algorithms is to maximize the expected **return--the reward sum along all trajectory**. So that, although it seems that going down makes the total reward less, but the behavior will get more reward and more return in the end of the episode.

-- **The agent don't know the right side has higher reward, so the agent will stuck at left.**

-It's a bad question. I reject to answer.

+- *The agent don't know the right side has higher reward, so the agent will stuck at left.*

+The agent would try and learn that the right side has higher reward.

### Appendix A: Some snapshots of learned reward function over training episode

Episode 500:

-

After 7000 episode, the generator's behavior was very close to the expert.

@@ -108,45 +109,43 @@ After 7000 episode, the generator's behavior was very close to the expert.

### Conclusion

This work used a simplified version AIRL to solve OpenAI Mountain Car problem, and got a robust and transferable reward function.

-### Q&A

-- **Why reward function only depend on position make sense?**

-- **Reward function not depend on action is non-sense.**

-No, it make sense. For example, how to define a good golf track. Although the direction is really important, but I can design a reward function that only depend on the golf's position, if the golf in the position of good track, the reward will be higher than other position. That means that if you can pass that position, you should and must took the right action. The reward function is indirectly guiding reinforcement learning (RL) algorithm to get good policy. How to take action at a state is the work of policy, not reward function. So that reward function can only depend on state.

-

-- **The reward is not good, I can just draw a slop line (k>0, eg k=2) as reward function.**

+### Questions & Answers

+- *Why reward function only depend on position make sense? Reward function not depend on action seems non-sense.*

+ No, it does make sense. For example, how to define a good golf track. Although the direction is really important, but I can design a reward function that only depend on the golf's position, if the golf in the position of good track, the reward will be higher than other position. That means that if you can pass that position, you should and must took the right action. The reward function is indirectly guiding reinforcement learning (RL) algorithm to get good policy. How to take action at a state is the work of policy, not reward function.

+- *The reward is not good, I can just draw a slop line (k>0, eg k=2) as reward function.*

I can not bet the learned reward function is the optimal reward function, but it really make sense, and worked. Because using this learned reward function, I can train a policy from zero, which indicating this a worked reward function. And I don't think just a slop line can be better than the learned reward function, cause the agent has to up to the left hill to get enough energy to climb up to the right hill. So than the reward function should tell the agent that the state of left hill is no bad, which a slop (k>0) can't do this.

-- **Although it is important that the car can climb the right side of the hill, but besides that we also want to know why this car can climb up to the right side. We want to know why. How can you explain the behind story if you just make reward function only depend on the car's position?**

+- *Although it is important that the car can climb the right side of the hill, but besides that we also want to know why this car can climb up to the right side. How can you explain the behind story if you just make reward function only depend on the car's position?*

I can't answer at this moment.

-- **If your reward has nothing to do with action, the agent don't know what is right action to take.**

-No, this is not right. Use the state-only reward function, the agent can learn a good policy, which will tell the agent what should be the best action. You should realize that the reward function is **indirectly** guide RL algorithm to learn a good policy.

+- *If the reward has nothing to do with action, the agent don't know what is right action to take.*

+No, it's not the case. Use the state-only reward function, the agent can learn a good policy, which will tell the agent what should be the best action. You should realize that the reward function is **indirectly** guide for RL algorithm to learn.

-- **What's RL algorithm learned?**

+- *What's RL algorithm learned?*

RL algorithm learned a good policy, it can tell the agent what is the best action $a$, when it in one state (observation) $s$. Specifically, the learned policy is a lot of distribution of action. There are different distribution at different state(observation).

-- **You said you learned the expert's policy, but the agent's returns are bellow the expert's mean return. How could you said the agent learned the expert's policy?**

+- *You said you learned the expert's policy, but the agent's returns are bellow the expert's mean return. How could you said the agent learned the expert's policy?*

Although the agent's return can't reach to the expert's, but it's above `-200`, which means the car reach to the right hill within 200 steps. Beside, there are another reason why agent's return can't reach to the mean return of expert's. **The agent took stochastic policy**, which means that the action to take was sample from action distribution, rather than directly chose the most probable one. See Append.B for detail.

-- **Why the agent goes down, if goes down will make the reward less?**

-You don't understand the basics of RL. The main purpose of RL algorithms is to maximize the expected **return--the reward sum along all trajectory**. So that, although it seems that going down makes the total reward less, but the behavior will get more reward and more return in the end of the episode.

+- *Why the agent goes down, if goes down will make the reward less?*

+Well, this one basic idea of RL. The main purpose of RL algorithms is to maximize the expected **return--the reward sum along all trajectory**. So that, although it seems that going down makes the total reward less, but the behavior will get more reward and more return in the end of the episode.

-- **The agent don't know the right side has higher reward, so the agent will stuck at left.**

-It's a bad question. I reject to answer.

+- *The agent don't know the right side has higher reward, so the agent will stuck at left.*

+The agent would try and learn that the right side has higher reward.

### Appendix A: Some snapshots of learned reward function over training episode

Episode 500:

- +

+ Episode 1000:

-

Episode 1000:

- +

+ Episode 2000:

-

Episode 2000:

- +

+ Episode 4000:

@@ -170,4 +169,3 @@ Episode 23000:

Episode 4000:

@@ -170,4 +169,3 @@ Episode 23000:

-

diff --git a/algo/discriminator.py b/algo/discriminator.py

index 3f2bd46..96ed54e 100644

--- a/algo/discriminator.py

+++ b/algo/discriminator.py

@@ -1,13 +1,14 @@

'''

-@Description: AIRL算法的Discriminator

+@Description: Discriminator of AIRL

@Author: Jack Huang

@Github: https://github.com/HuangJiaLian

@Date: 2019-10-11 19:18:07

@LastEditors: Jack Huang

-@LastEditTime: 2019-11-18 17:36:07

+@LastEditTime: 2021-08-24 17:36:07

'''

-import tensorflow as tf

+import tensorflow.compat.v1 as tf

+tf.disable_v2_behavior()

import numpy as np

class Discriminator:

@@ -99,6 +100,5 @@ def get_l_scores(self, obs_t, nobs_t, lprobs):

return scores

-

def get_trainable_variables(self):

return tf.get_collection(tf.GraphKeys.TRAINABLE_VARIABLES, self.scope)

\ No newline at end of file

diff --git a/algo/generator.py b/algo/generator.py

index 2c47f89..7f74194 100644

--- a/algo/generator.py

+++ b/algo/generator.py

@@ -4,10 +4,11 @@

@Github: https://github.com/HuangJiaLian

@Date: 2019-10-11 19:17:36

@LastEditors: Jack Huang

-@LastEditTime: 2019-11-15 19:04:03

+@LastEditTime: 2021-08-24 19:04:03

'''

-import tensorflow as tf

+import tensorflow.compat.v1 as tf

+tf.disable_v2_behavior()

import copy

class Policy_net:

@@ -21,13 +22,12 @@ def __init__(self, name: str, env):

with tf.variable_scope(name):

self.obs = tf.placeholder(dtype=tf.float32, shape=[None] + list(ob_space.shape), name='obs')

- # Actor

- # Input 20 20 act_space.n act_space.n

+ # Actor (Policy): Given a state (or observation)

+ # obtain the distribution of actions

with tf.variable_scope('policy_net'):

layer_1 = tf.layers.dense(inputs=self.obs, units=20, activation=tf.tanh)

layer_2 = tf.layers.dense(inputs=layer_1, units=20, activation=tf.tanh)

layer_3 = tf.layers.dense(inputs=layer_2, units=act_space.n, activation=tf.tanh)

- # 输出动作的概率

self.act_probs = tf.layers.dense(inputs=layer_3, units=act_space.n, activation=tf.nn.softmax)

# Critic

@@ -46,14 +46,15 @@ def __init__(self, name: str, env):

def get_action(self, obs, stochastic=True):

if stochastic:

- return tf.get_default_session().run([self.act_stochastic, self.v_preds], feed_dict={self.obs: obs})

+ act, v_pred = tf.get_default_session().run([self.act_stochastic, self.v_preds], feed_dict={self.obs: obs})

+ return act.item(), v_pred.item()

else:

- return tf.get_default_session().run([self.act_deterministic, self.v_preds], feed_dict={self.obs: obs})

+ act, v_pred = tf.get_default_session().run([self.act_deterministic, self.v_preds], feed_dict={self.obs: obs})

+ return act.item(), v_pred.item()

def get_trainable_variables(self):

return tf.get_collection(tf.GraphKeys.TRAINABLE_VARIABLES, self.scope)

- # Last Edit

def get_distribution(self, obs):

return tf.get_default_session().run(self.act_probs,feed_dict={self.obs: obs})

-

diff --git a/algo/discriminator.py b/algo/discriminator.py

index 3f2bd46..96ed54e 100644

--- a/algo/discriminator.py

+++ b/algo/discriminator.py

@@ -1,13 +1,14 @@

'''

-@Description: AIRL算法的Discriminator

+@Description: Discriminator of AIRL

@Author: Jack Huang

@Github: https://github.com/HuangJiaLian

@Date: 2019-10-11 19:18:07

@LastEditors: Jack Huang

-@LastEditTime: 2019-11-18 17:36:07

+@LastEditTime: 2021-08-24 17:36:07

'''

-import tensorflow as tf

+import tensorflow.compat.v1 as tf

+tf.disable_v2_behavior()

import numpy as np

class Discriminator:

@@ -99,6 +100,5 @@ def get_l_scores(self, obs_t, nobs_t, lprobs):

return scores

-

def get_trainable_variables(self):

return tf.get_collection(tf.GraphKeys.TRAINABLE_VARIABLES, self.scope)

\ No newline at end of file

diff --git a/algo/generator.py b/algo/generator.py

index 2c47f89..7f74194 100644

--- a/algo/generator.py

+++ b/algo/generator.py

@@ -4,10 +4,11 @@

@Github: https://github.com/HuangJiaLian

@Date: 2019-10-11 19:17:36

@LastEditors: Jack Huang

-@LastEditTime: 2019-11-15 19:04:03

+@LastEditTime: 2021-08-24 19:04:03

'''

-import tensorflow as tf

+import tensorflow.compat.v1 as tf

+tf.disable_v2_behavior()

import copy

class Policy_net:

@@ -21,13 +22,12 @@ def __init__(self, name: str, env):

with tf.variable_scope(name):

self.obs = tf.placeholder(dtype=tf.float32, shape=[None] + list(ob_space.shape), name='obs')

- # Actor

- # Input 20 20 act_space.n act_space.n

+ # Actor (Policy): Given a state (or observation)

+ # obtain the distribution of actions

with tf.variable_scope('policy_net'):

layer_1 = tf.layers.dense(inputs=self.obs, units=20, activation=tf.tanh)

layer_2 = tf.layers.dense(inputs=layer_1, units=20, activation=tf.tanh)

layer_3 = tf.layers.dense(inputs=layer_2, units=act_space.n, activation=tf.tanh)

- # 输出动作的概率

self.act_probs = tf.layers.dense(inputs=layer_3, units=act_space.n, activation=tf.nn.softmax)

# Critic

@@ -46,14 +46,15 @@ def __init__(self, name: str, env):

def get_action(self, obs, stochastic=True):

if stochastic:

- return tf.get_default_session().run([self.act_stochastic, self.v_preds], feed_dict={self.obs: obs})

+ act, v_pred = tf.get_default_session().run([self.act_stochastic, self.v_preds], feed_dict={self.obs: obs})

+ return act.item(), v_pred.item()

else:

- return tf.get_default_session().run([self.act_deterministic, self.v_preds], feed_dict={self.obs: obs})

+ act, v_pred = tf.get_default_session().run([self.act_deterministic, self.v_preds], feed_dict={self.obs: obs})

+ return act.item(), v_pred.item()

def get_trainable_variables(self):

return tf.get_collection(tf.GraphKeys.TRAINABLE_VARIABLES, self.scope)

- # Last Edit

def get_distribution(self, obs):

return tf.get_default_session().run(self.act_probs,feed_dict={self.obs: obs})